AI with LLMs Chatbot Flow

Background Information

AI/ML and specifically Large Language Models (LLM) for generative AI (GenAI) has been the hottest topic in the application development world since the birth of OpenAI’s ChatGPT on November 30 2022.

The GPT and specifically the transformer architecture has produced models that are incrediby capable at Natural Language Processing (NLP) tasks making them ideally suited for conversational interactive user experience and the Hello World for a LLM is a chatbot.

Demo Template Wizard

Narrator: I want to show you a template that provisions both the SDLC (Software Development Lifecycle) and the MDLC (Model Development Lifecycle). Where the SDLC is implemented as a Tekton-based Trusted Application Pipeline as seen in previously and the MDLC is implemented as a Red Hat OpenShift AI (RHOAI) pipeline based on Kubeflow.

With open LLMs such as Llama, Mistral or Granite you can start with a foundation model that does not have to be trained from scratch. These models can be lifecycled, managed, served and monitored by Red Hat OpenShift AI while your application lifecycle remains independent and potentially hosted on OpenShift as will be seen in this demonstration. And perhaps most importantly these open LLMs can live in your datacenter or VPC where your private data remains yours and does not have to traverse the internet.

Developer Hub templates and gitops are the driving engines that allow for both self-service and standardization. This means becomes much easier for developers to adopt the standards you set for the application stack.

Red Hat Developer Hub is our supported and enterprise ready version of Backstage along with Red Hat Trusted Application Pipeline that leverages Trusted Arifact Signer, Trusted Profile Analyzer, Quay and Advanced Cluster Security.

Let’s see it in action, hopefully things become more clear as we go.

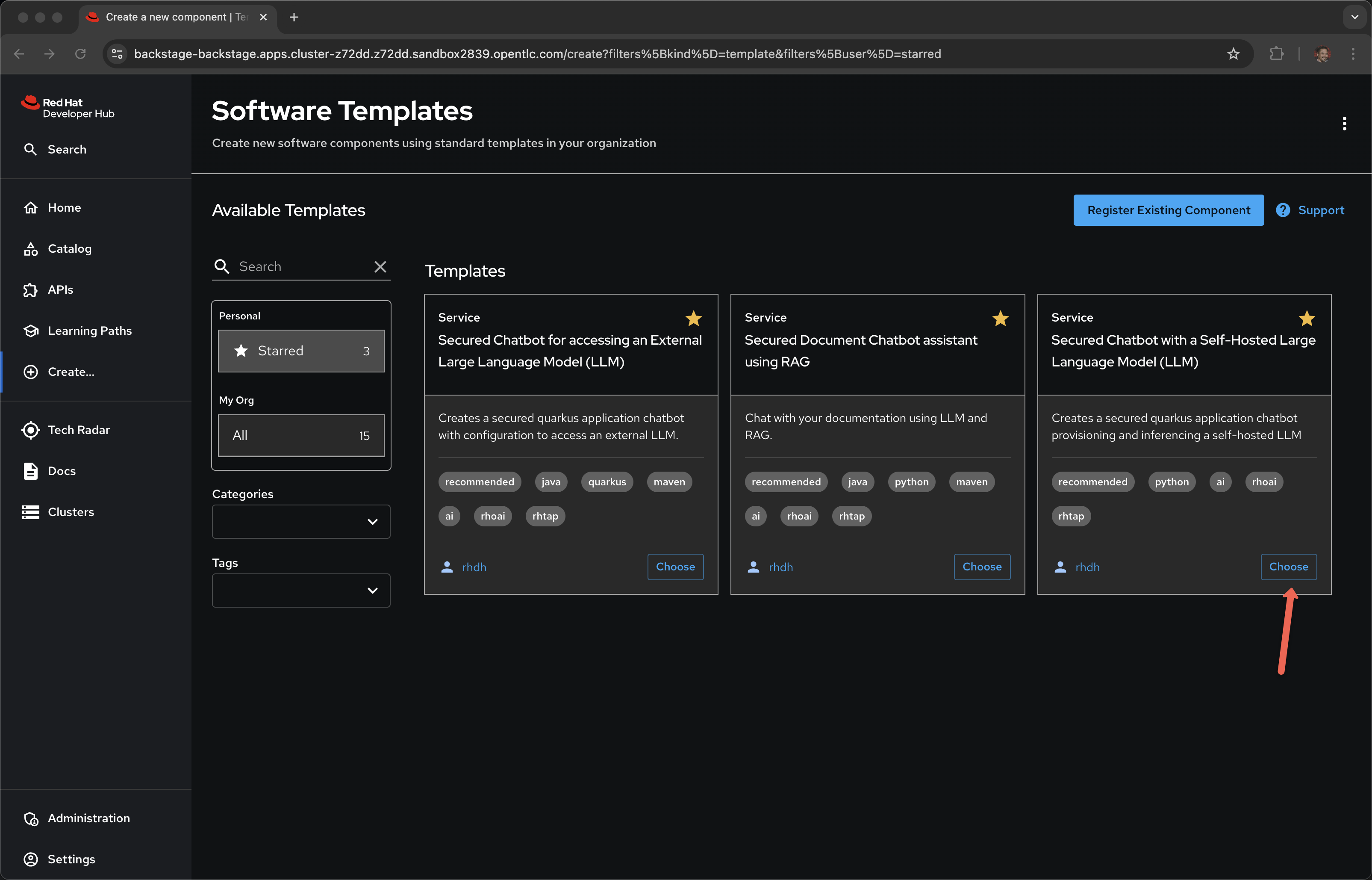

Click on Create… in the left-hand navigation menu

Click Choose on the Secured Chatbot with a Self-Hosted Large Language Model (LLM) template

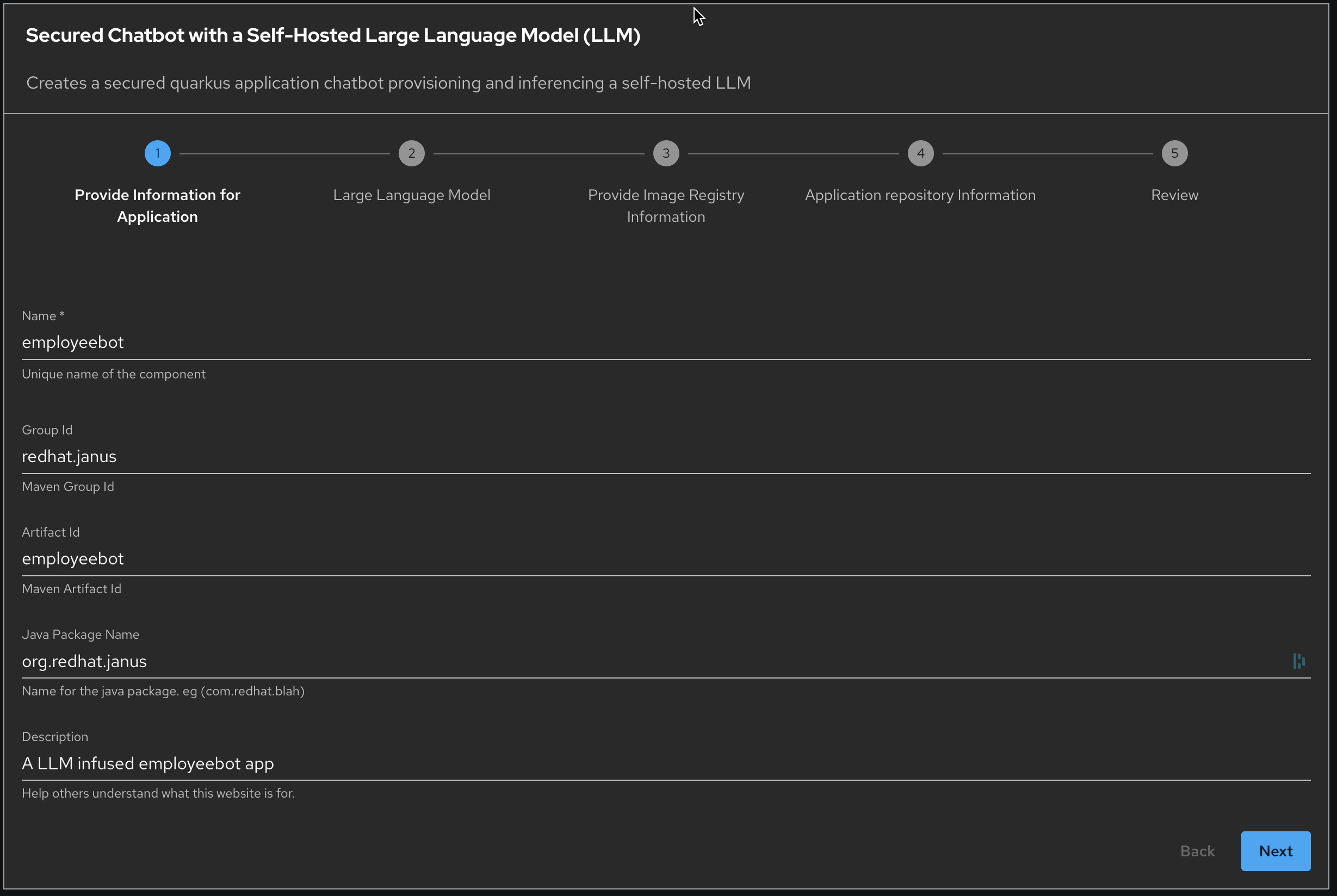

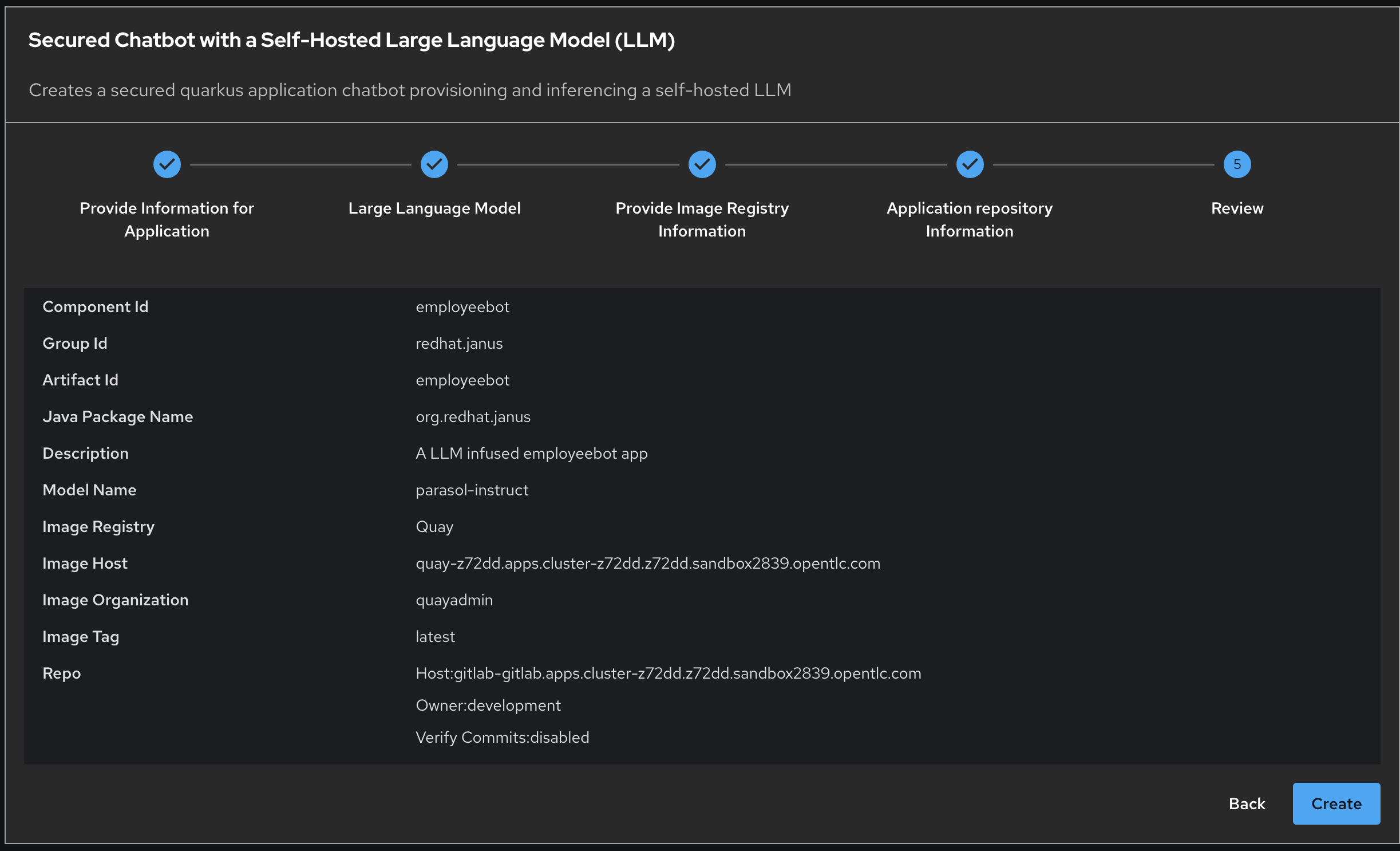

Name: employeebot

Group Id: redhat.janus

Artifact Id: employeebot

Java Package Name: org.redhat.janus

Description: A LLM infused employeebot app

Click Next

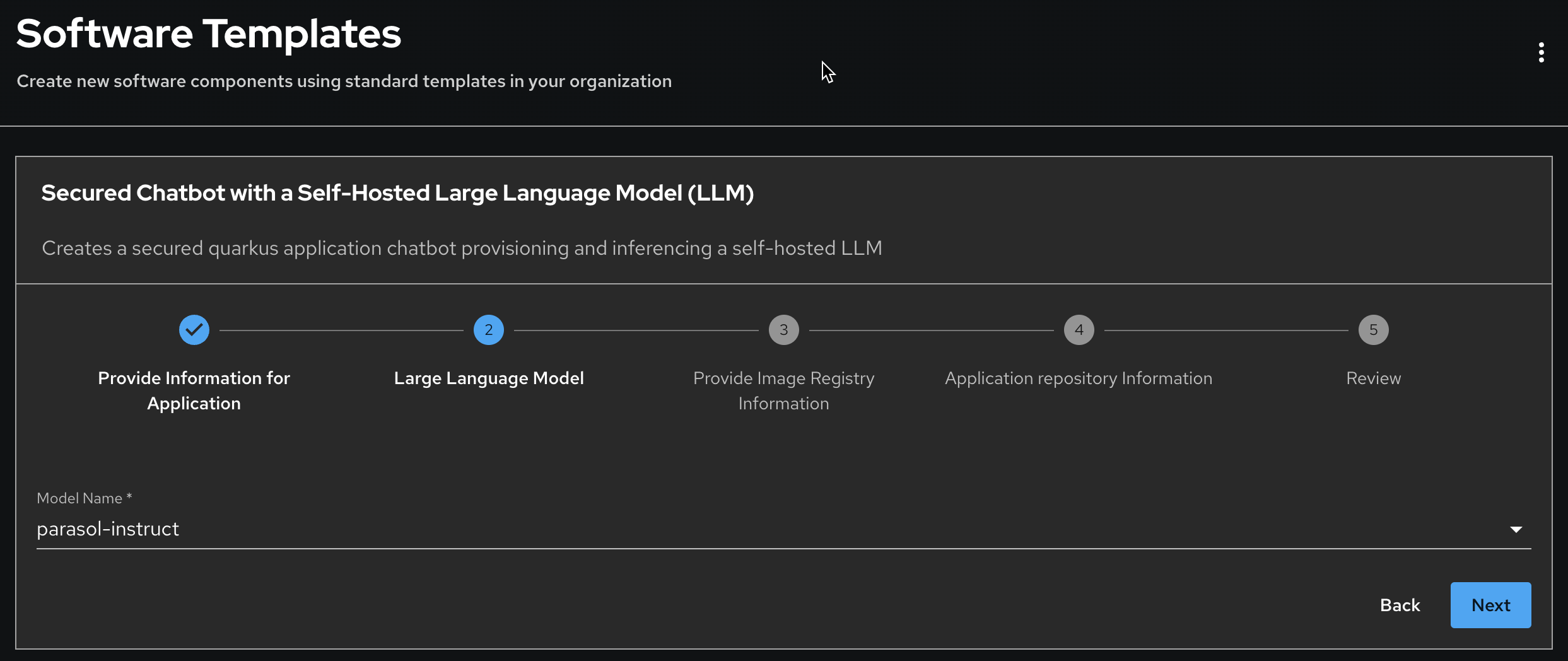

Mode Name: parasol-instruct

Narrator: These models have been curated and approved by Parasol’s data science team. Parasol Insurance is a fictional company specializing in providing comprehensive insurance solutions.

Which models are listed in the template require a negotiation and collaboration with the folks who own models within Parasol, within your organization. These models are being managed by Red Hat OpenShift AI (RHOAI) which we will see later.

Click Next

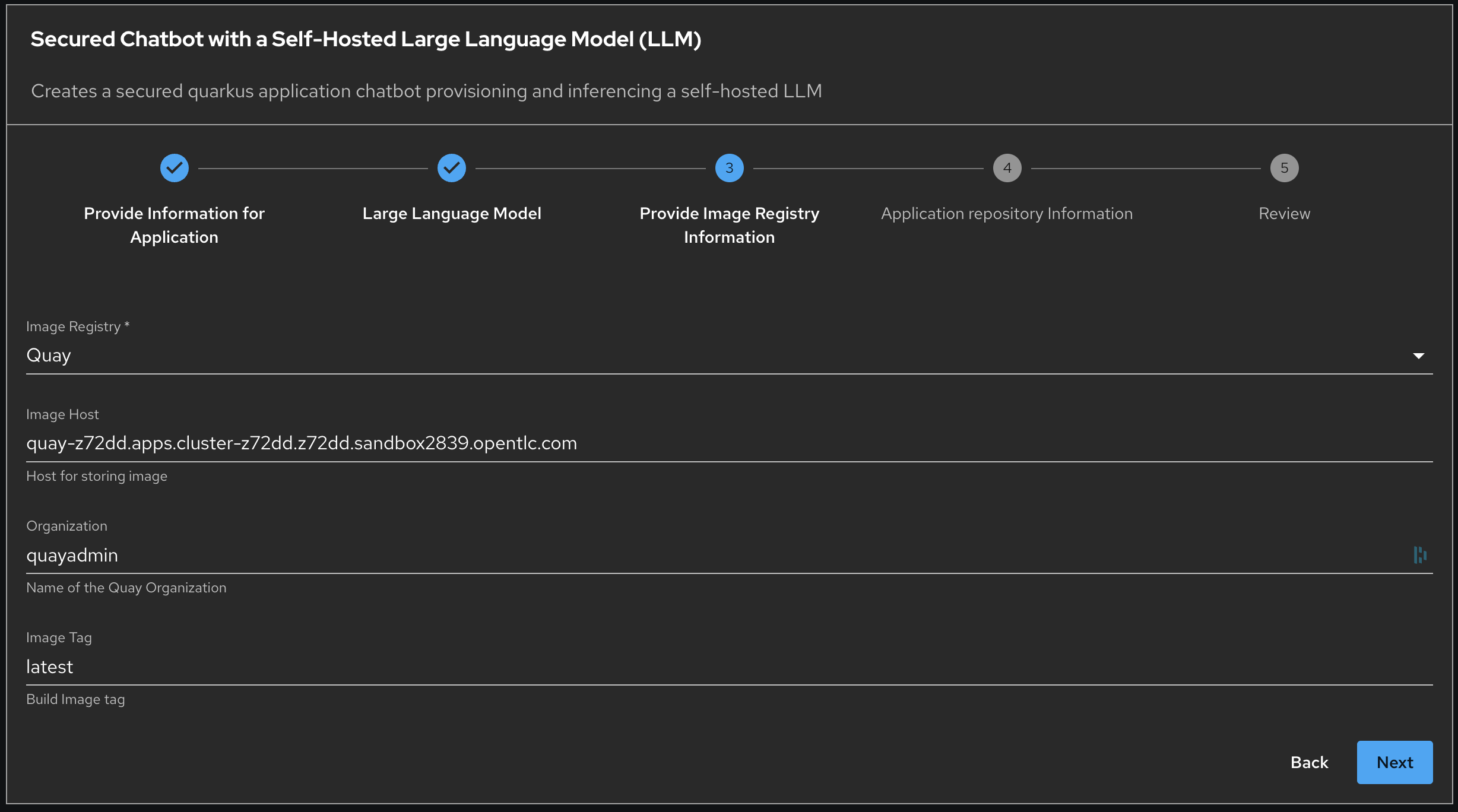

For Image Registry, take all the defaults.

Click Next

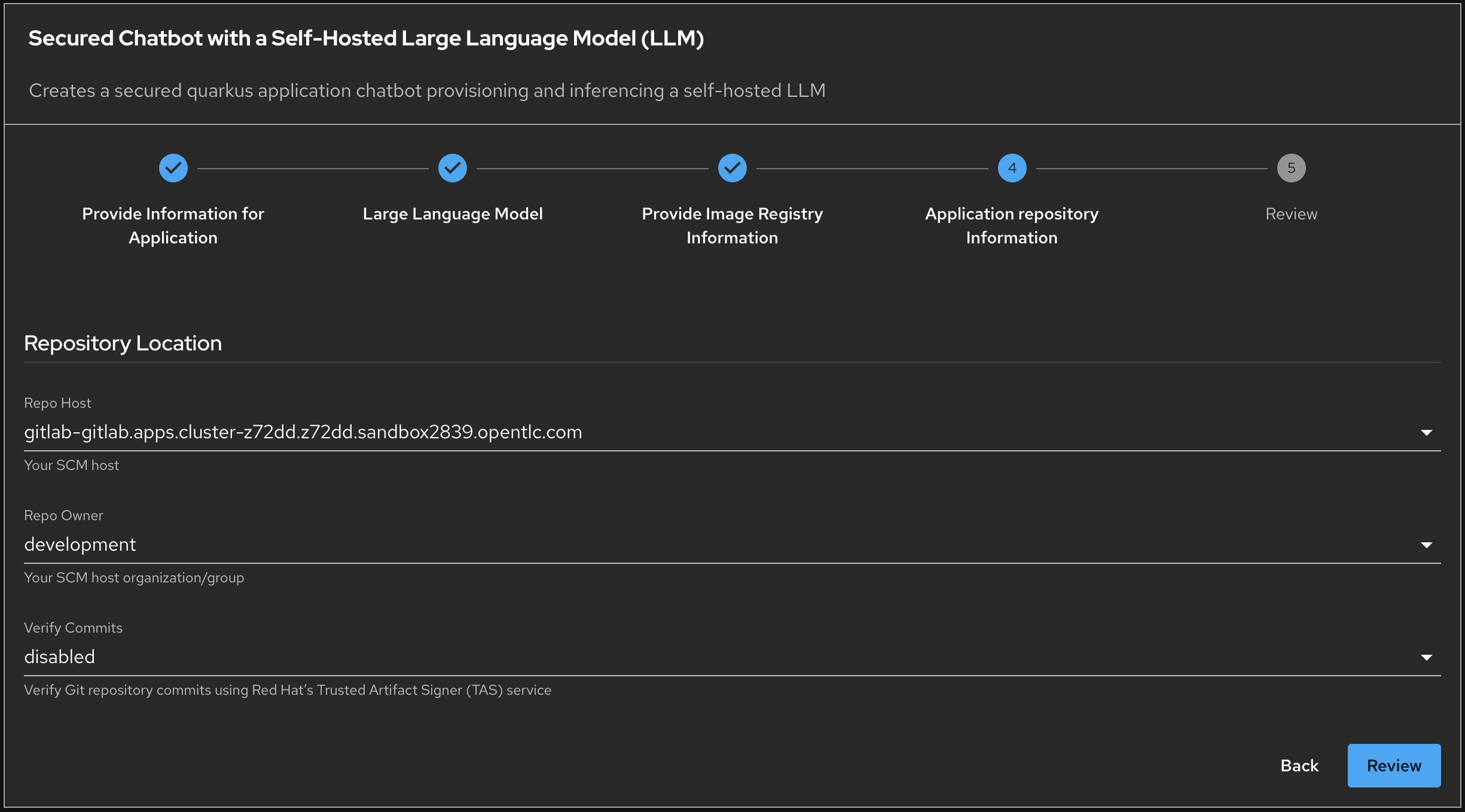

For Repository Location, take all the defaults

Click Review

Click Create

The animation takes few seconds however this hides the heavy lifting happening under the covers.

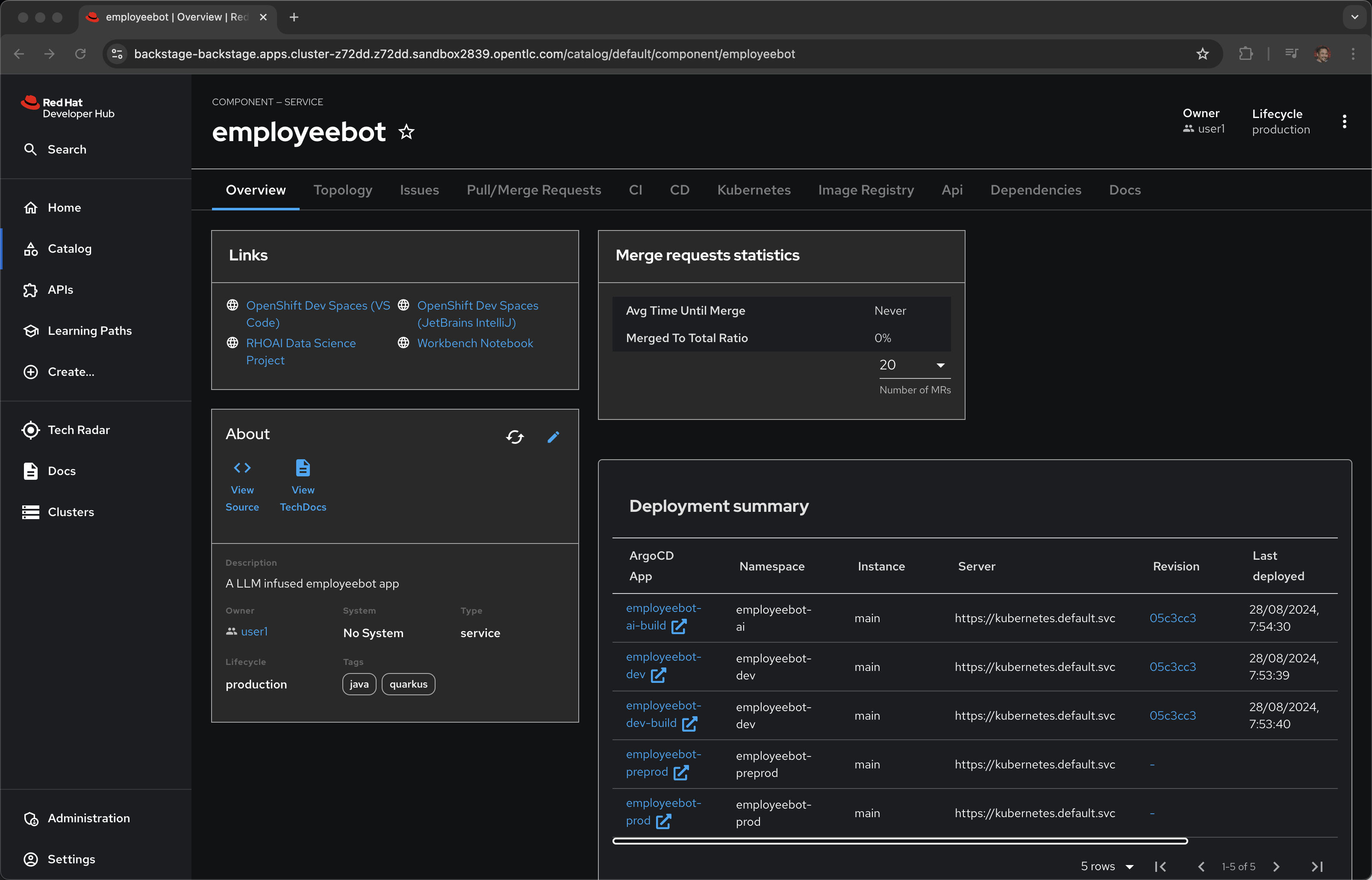

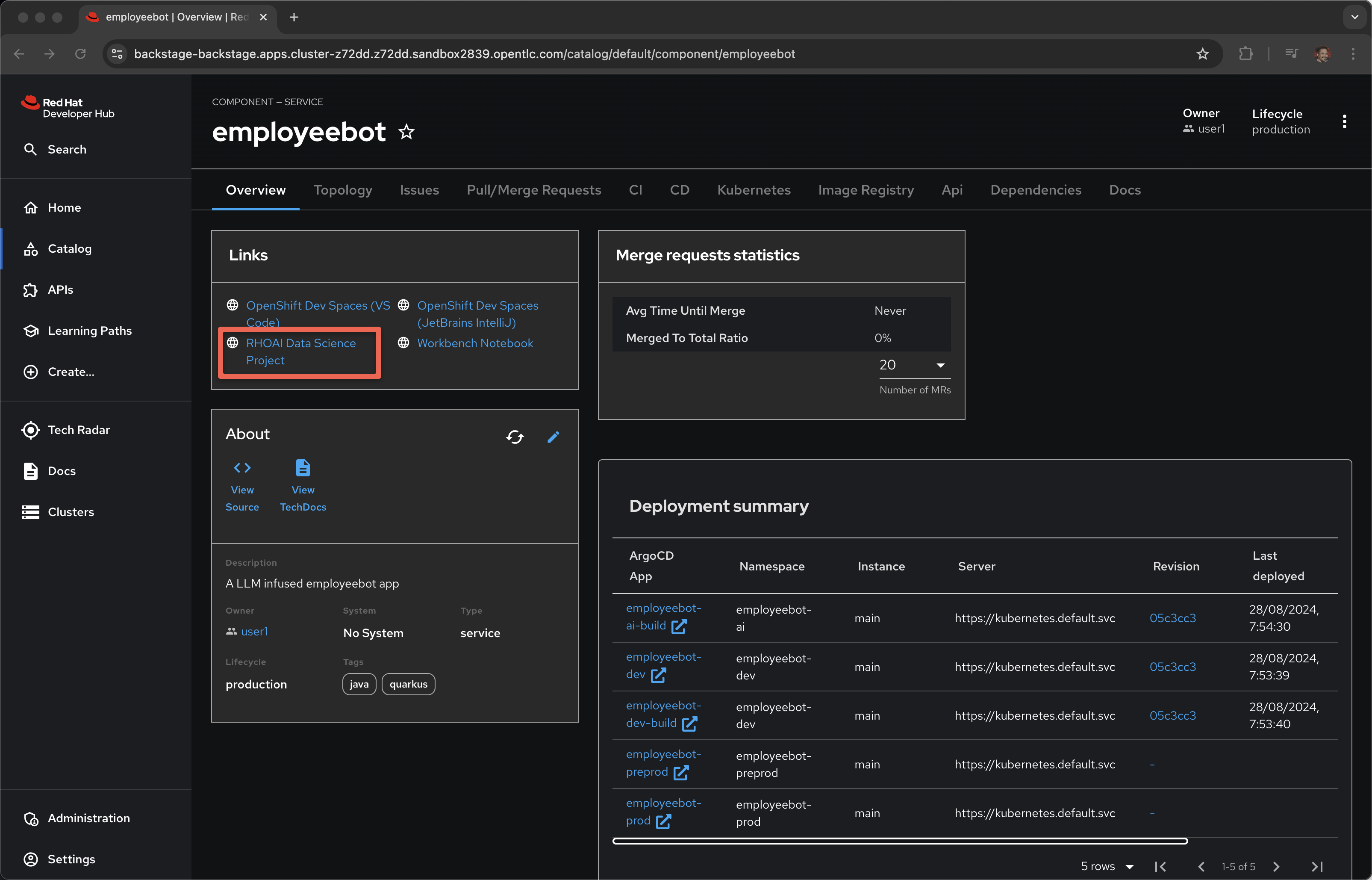

Click on Open Component in catalog

Narrator: The template wizard makes everything look super simple. Behind the scences is ArgoCD, OpenShift GitOps, that is actually doing the heavy lifting and provisioning everything. Making this a self-service process for the user. No tickets, no waiting.

Let’s go check out the gitops repository over in Gitlab.

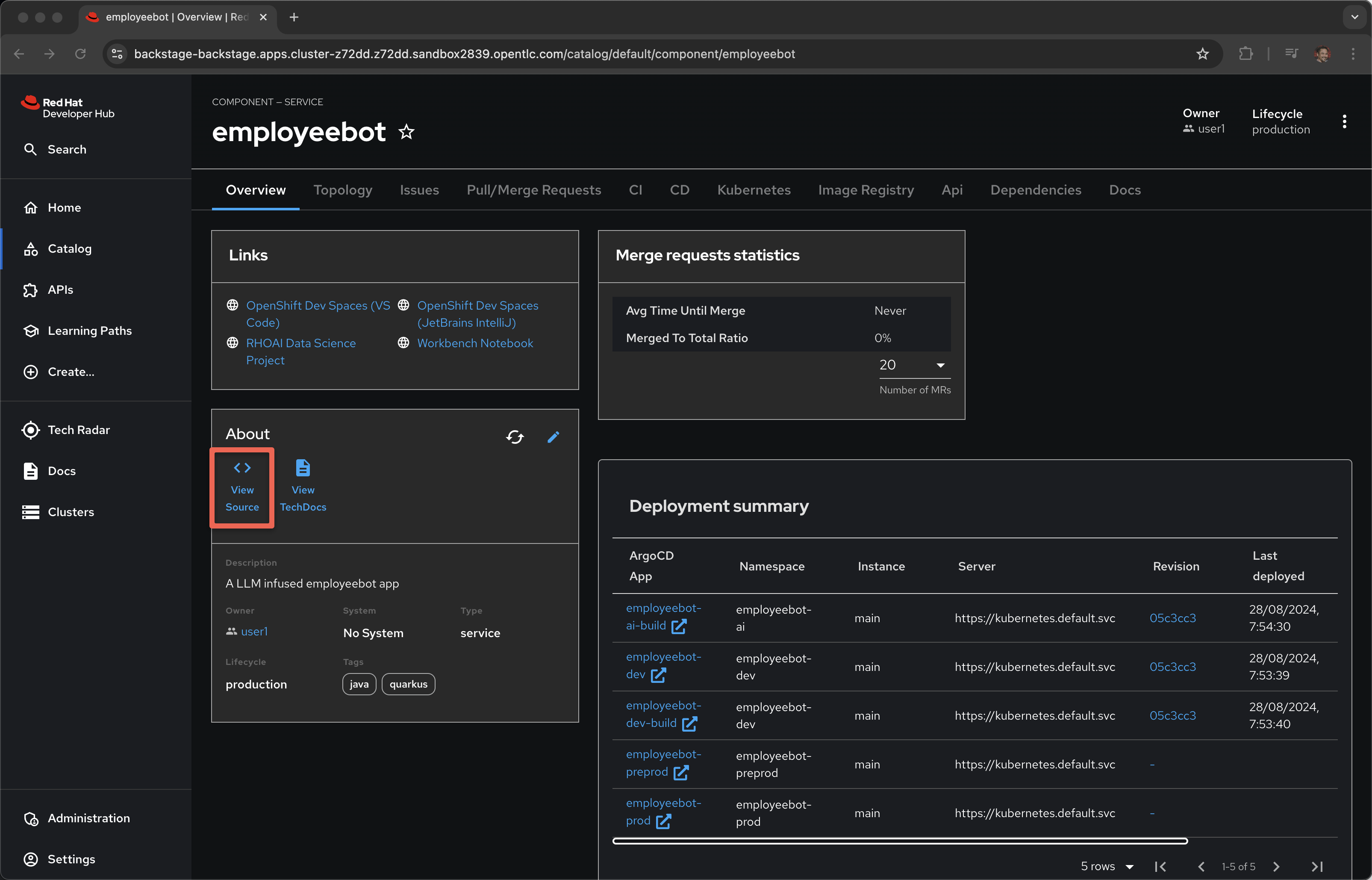

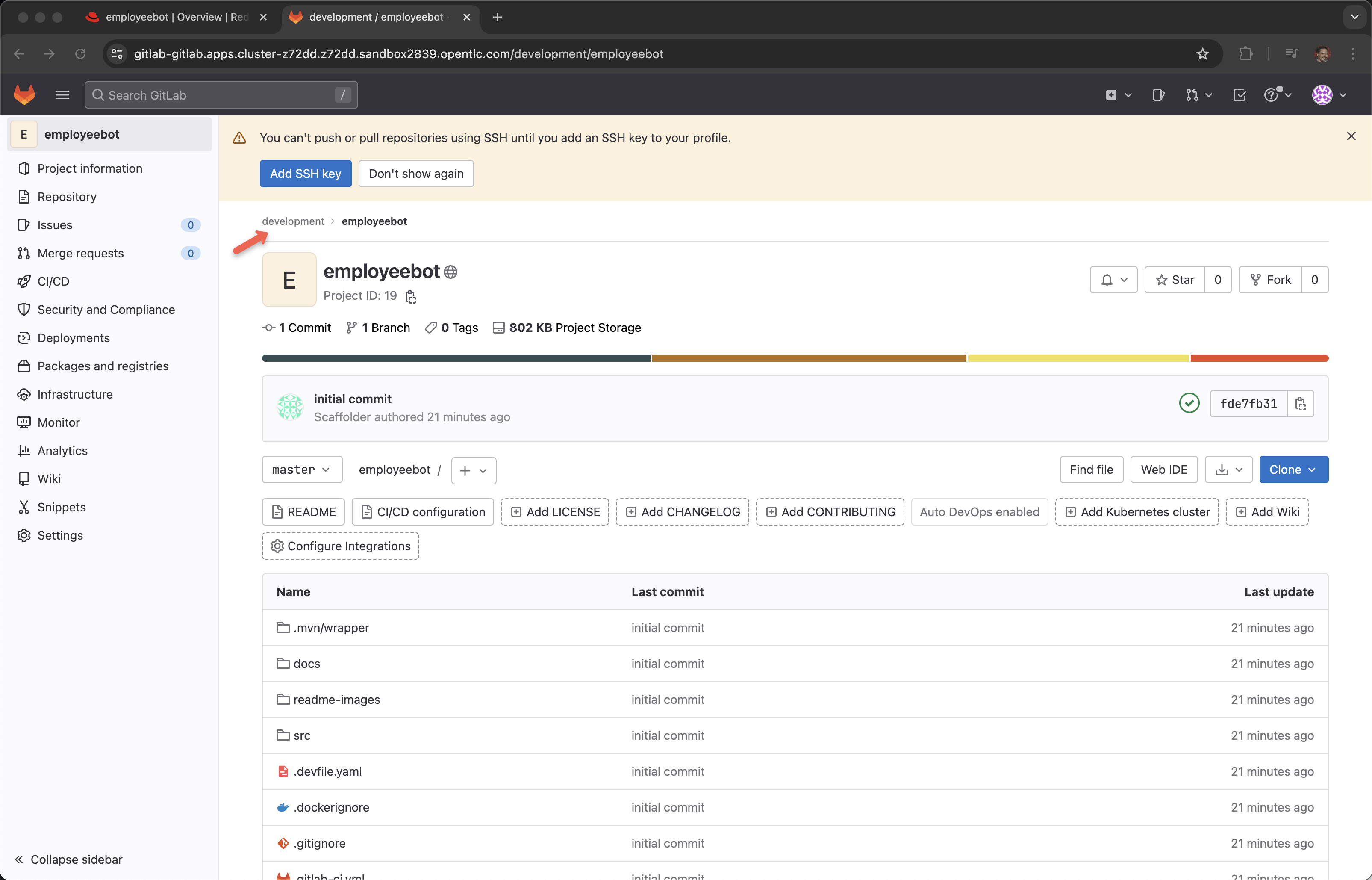

Click View Source

Click development

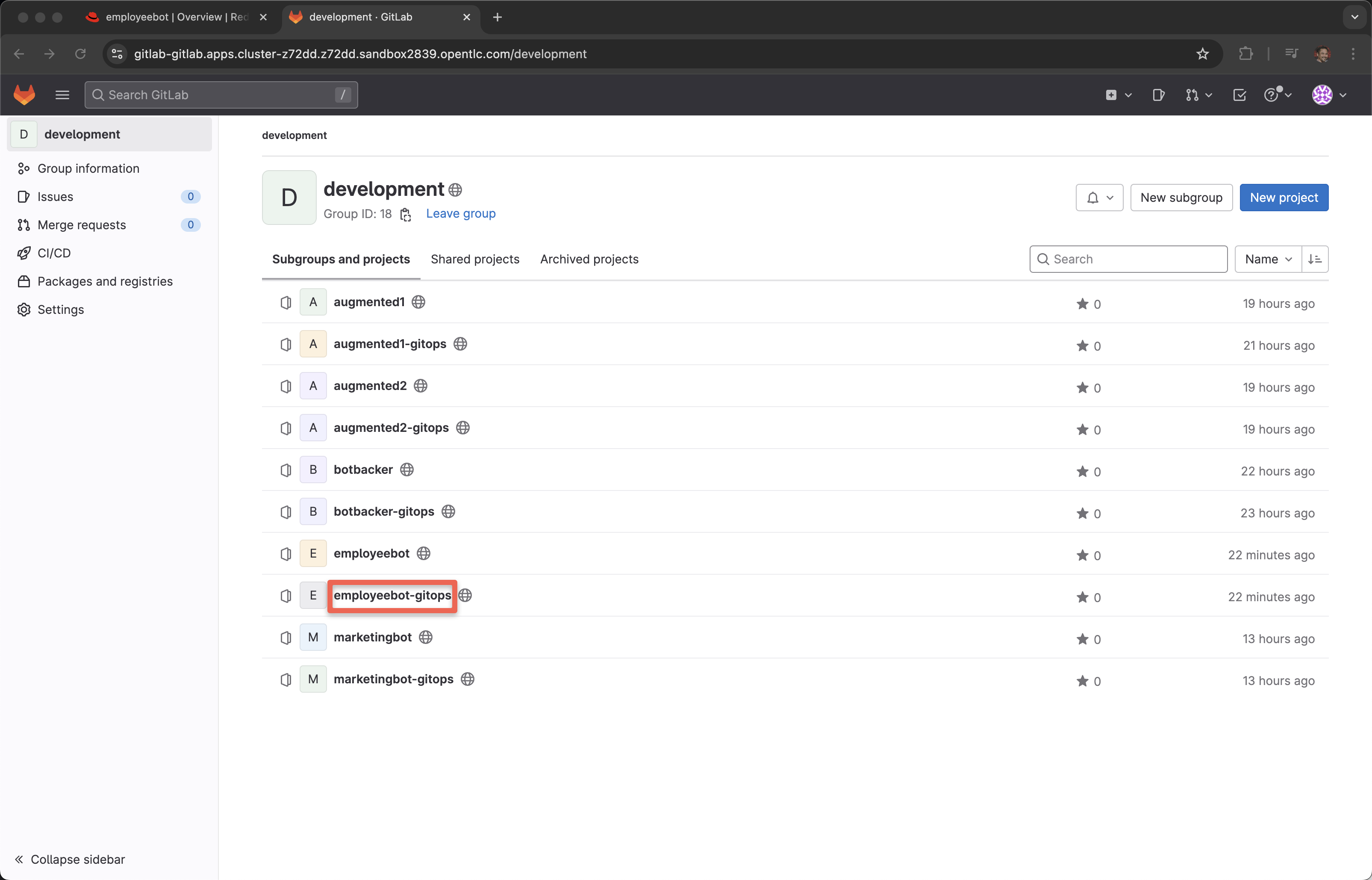

Click employeebot-gitops

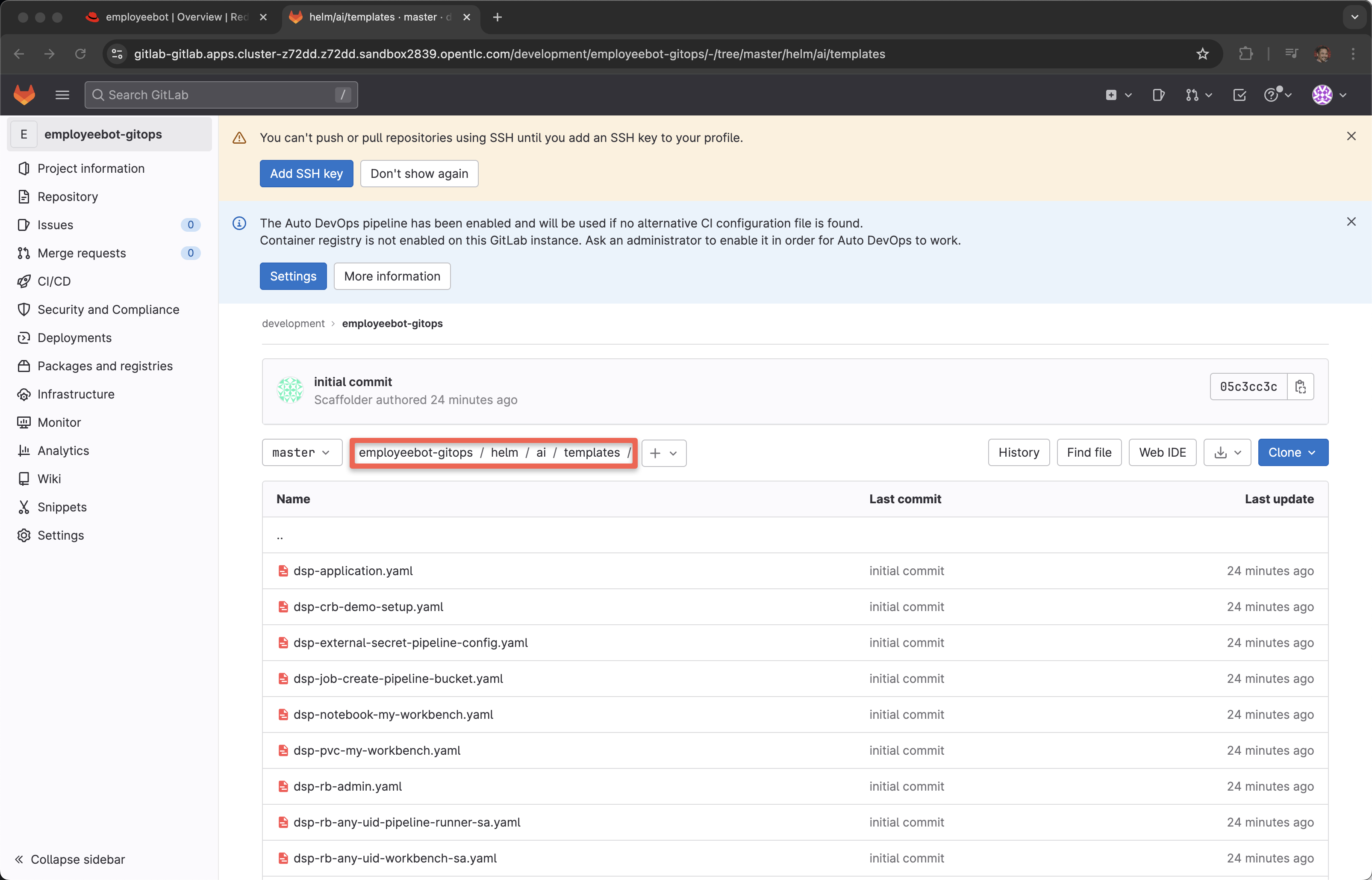

Drill down into helm/ai/templates

Narrator: We could spend the next hour talking about the power of gitops but for now I wish to get back to the developer experience. It is just important to note that a Developer Hub template is also stored in a git repository and you want collaboration, you are open to pull/merge requests from other members of your internal developer community.

Back to Overview tab

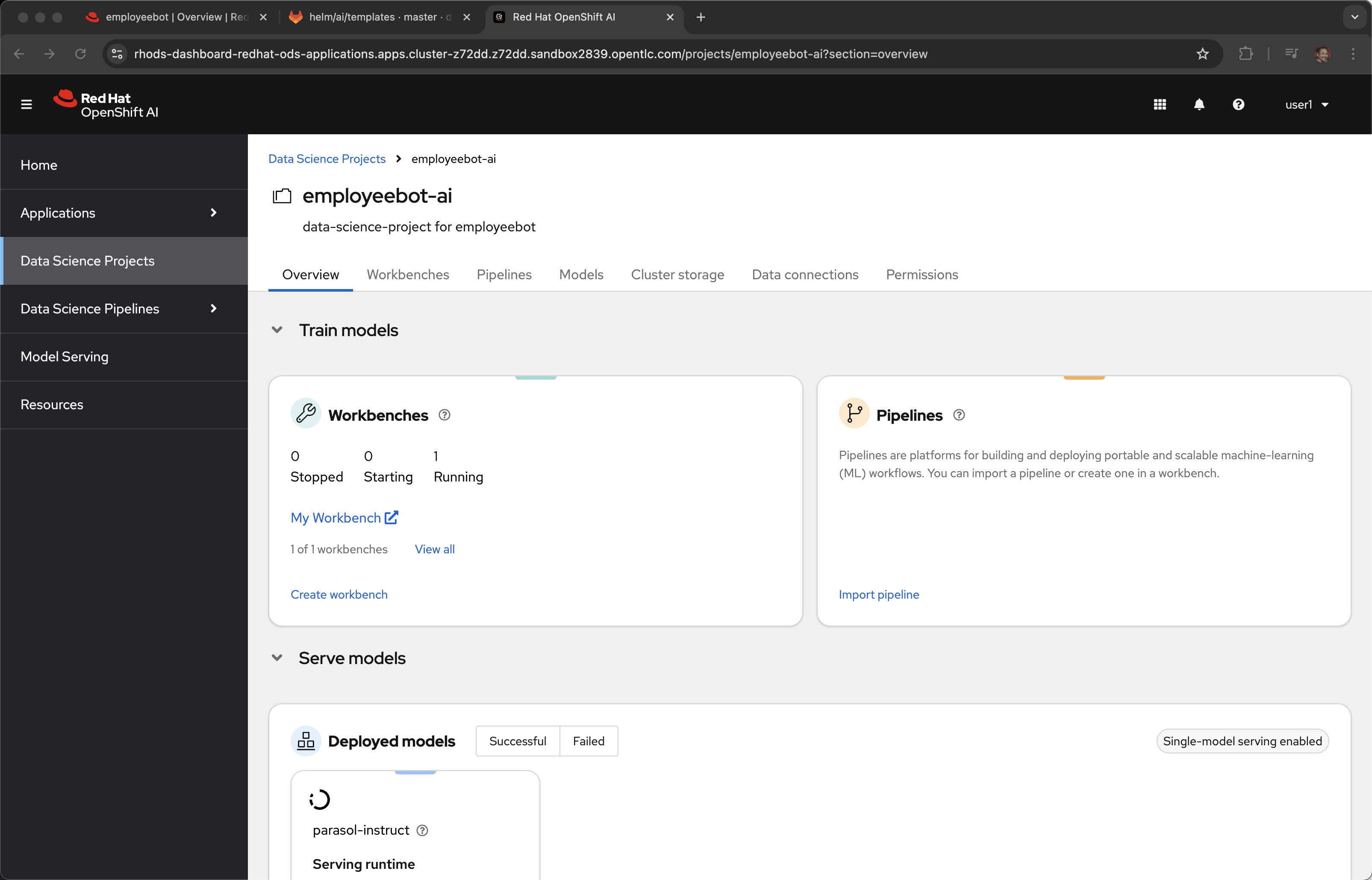

Click RHOAI Data Science Project

Narrator: The parasol-instruct model is based on Mistral-7B and it was previously downloaded from Huggingface, placed in cluster hosted storage, specifically an open source S3 solution called Minio in this case. This model is large and takes several minutes for the model server, the pod, to spin up and become ready. We will look into the model serving and model pipeline capabilities later in our demonstration.

For the sake of time, like in a TV cooking show, we will skip over to a project where the model server is already up and running.

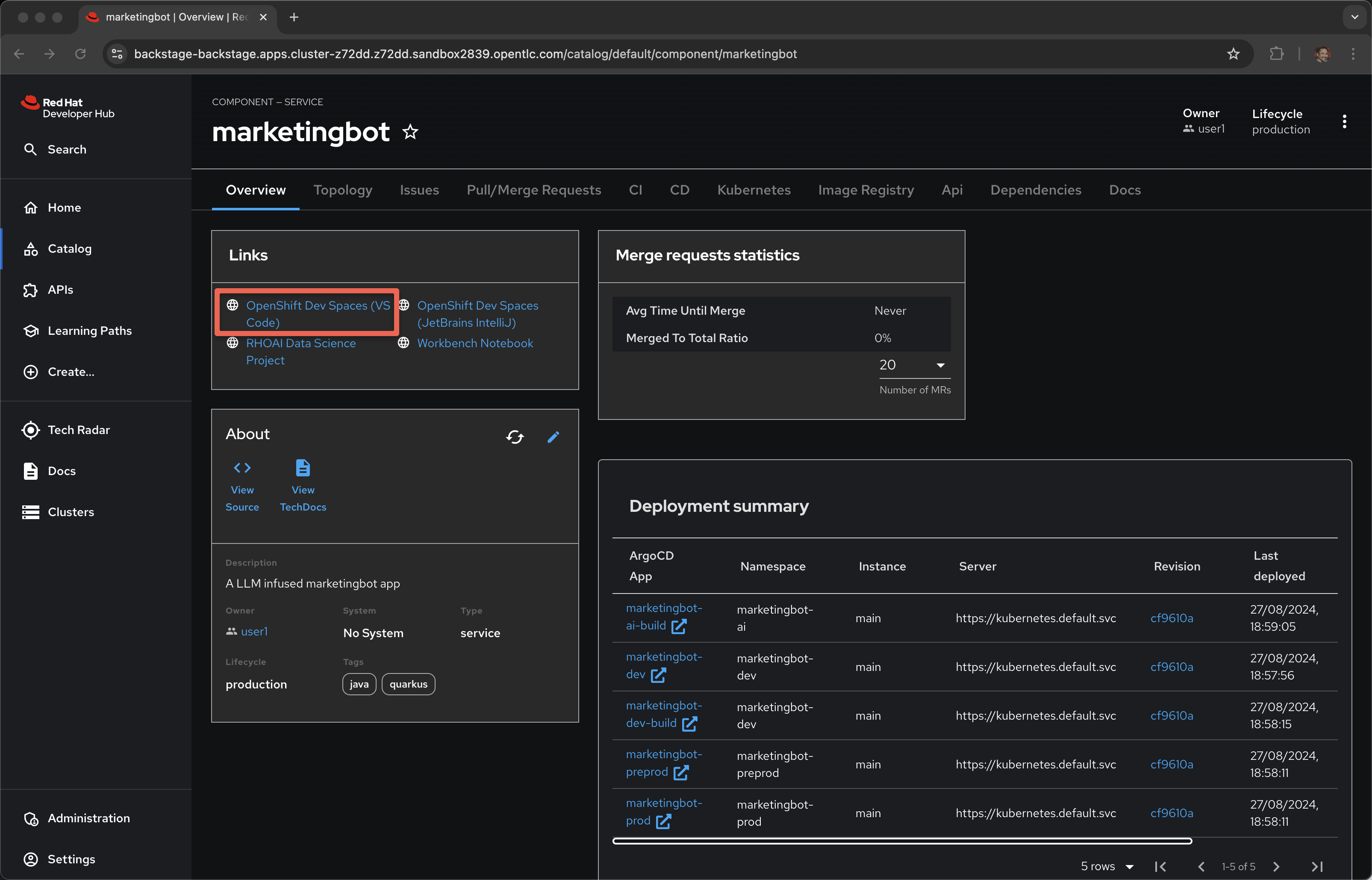

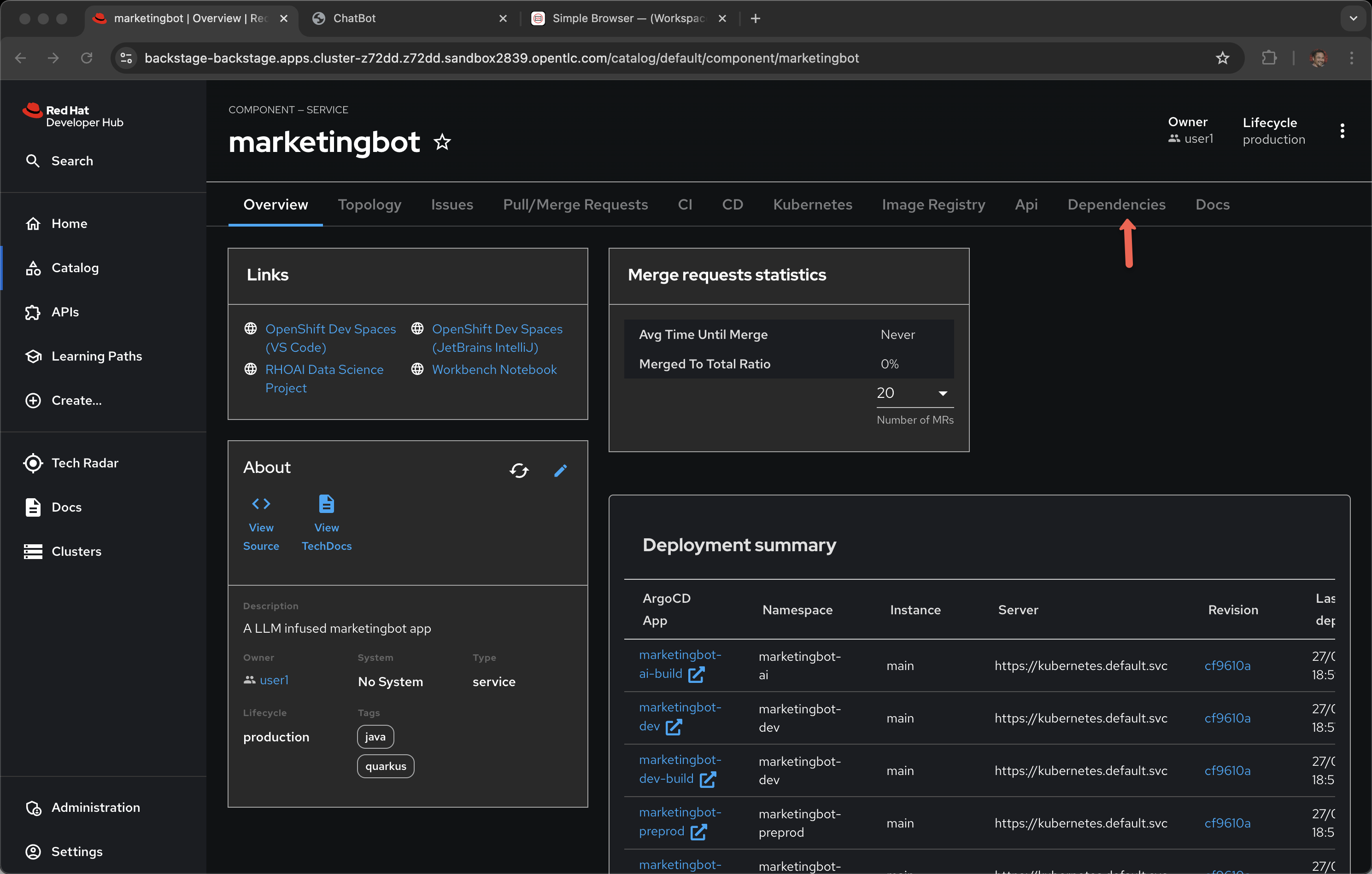

Click on Catalog and marketingbot (or whatever name you gave it during the setup phase)

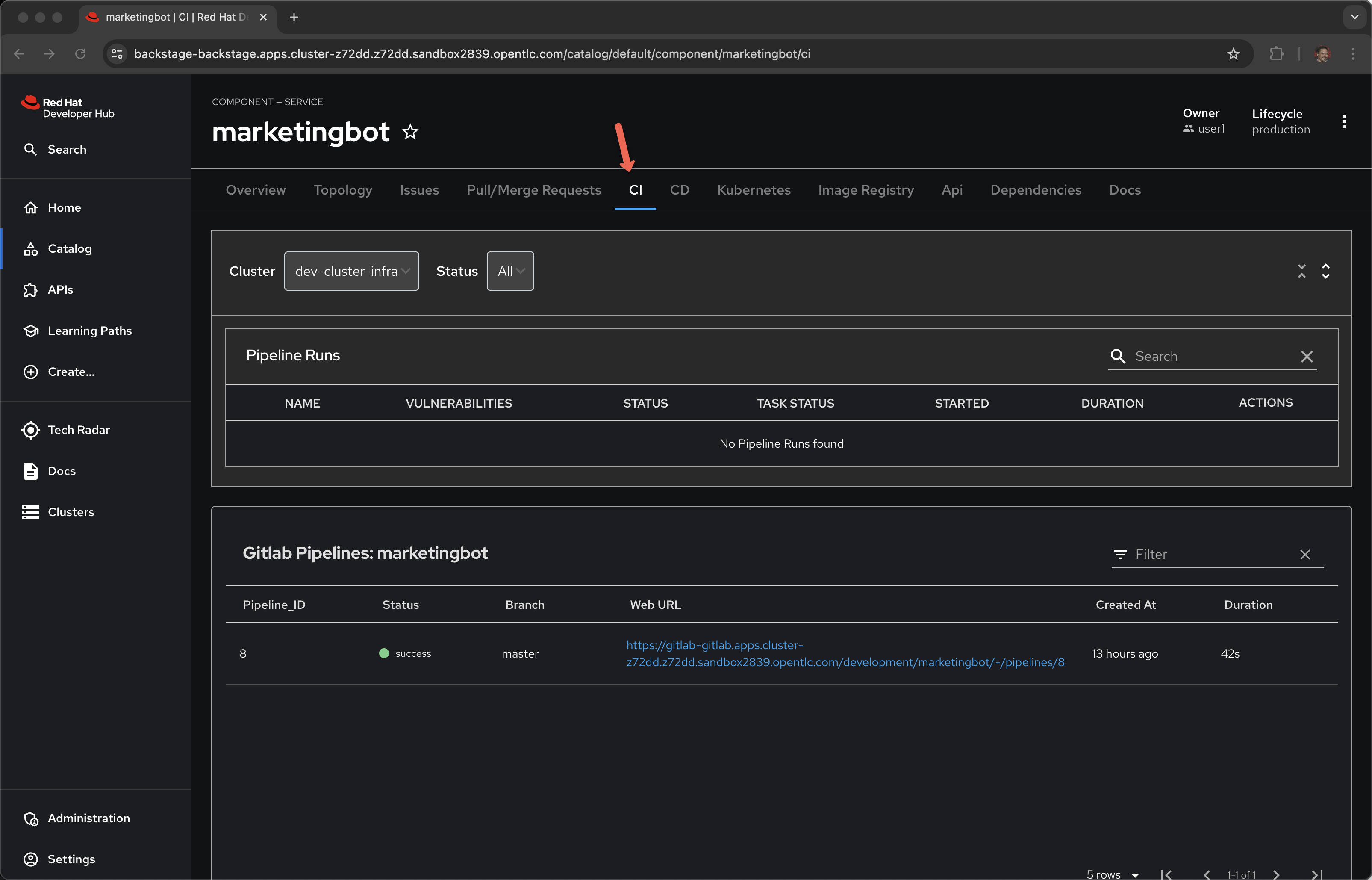

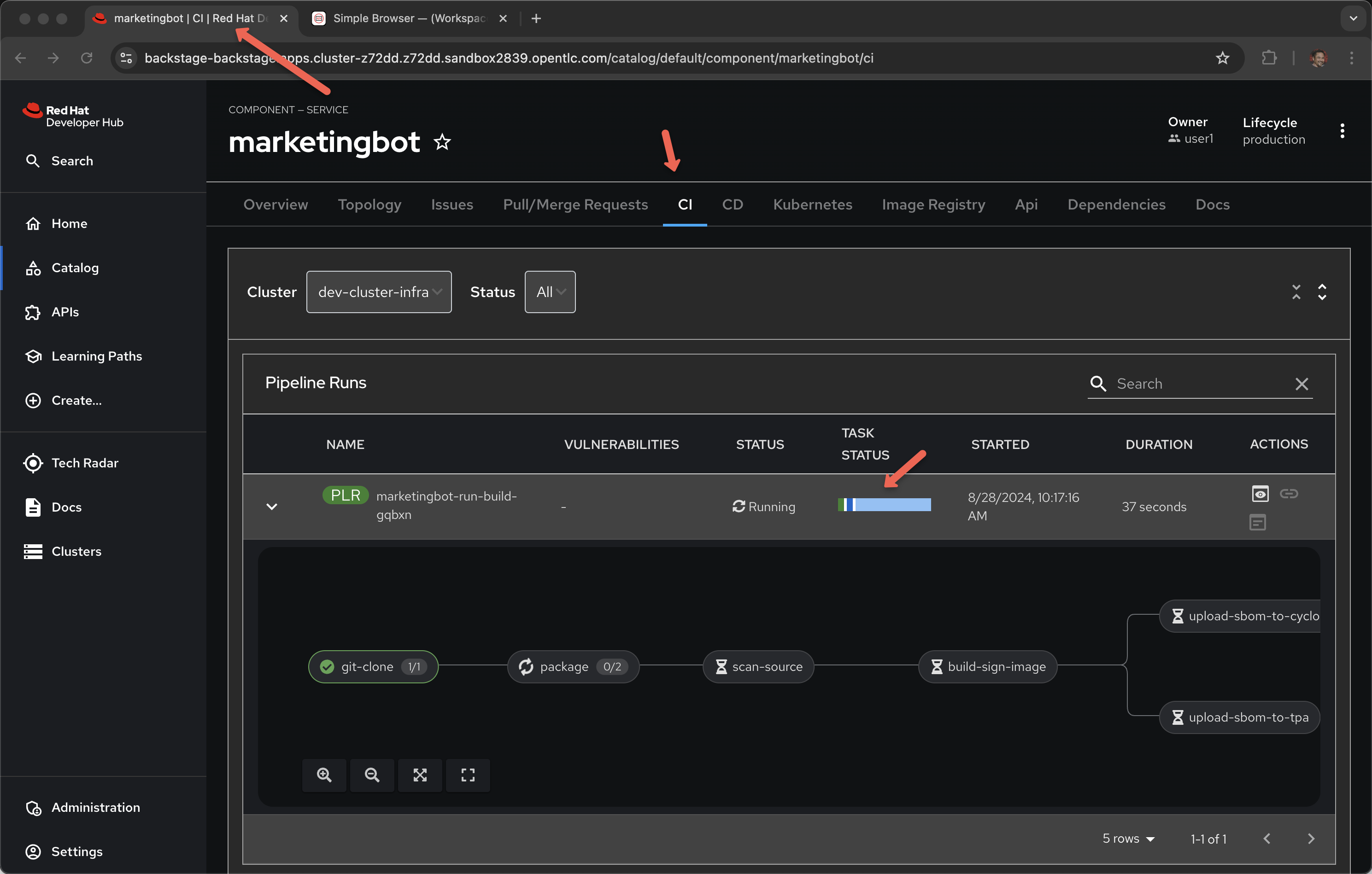

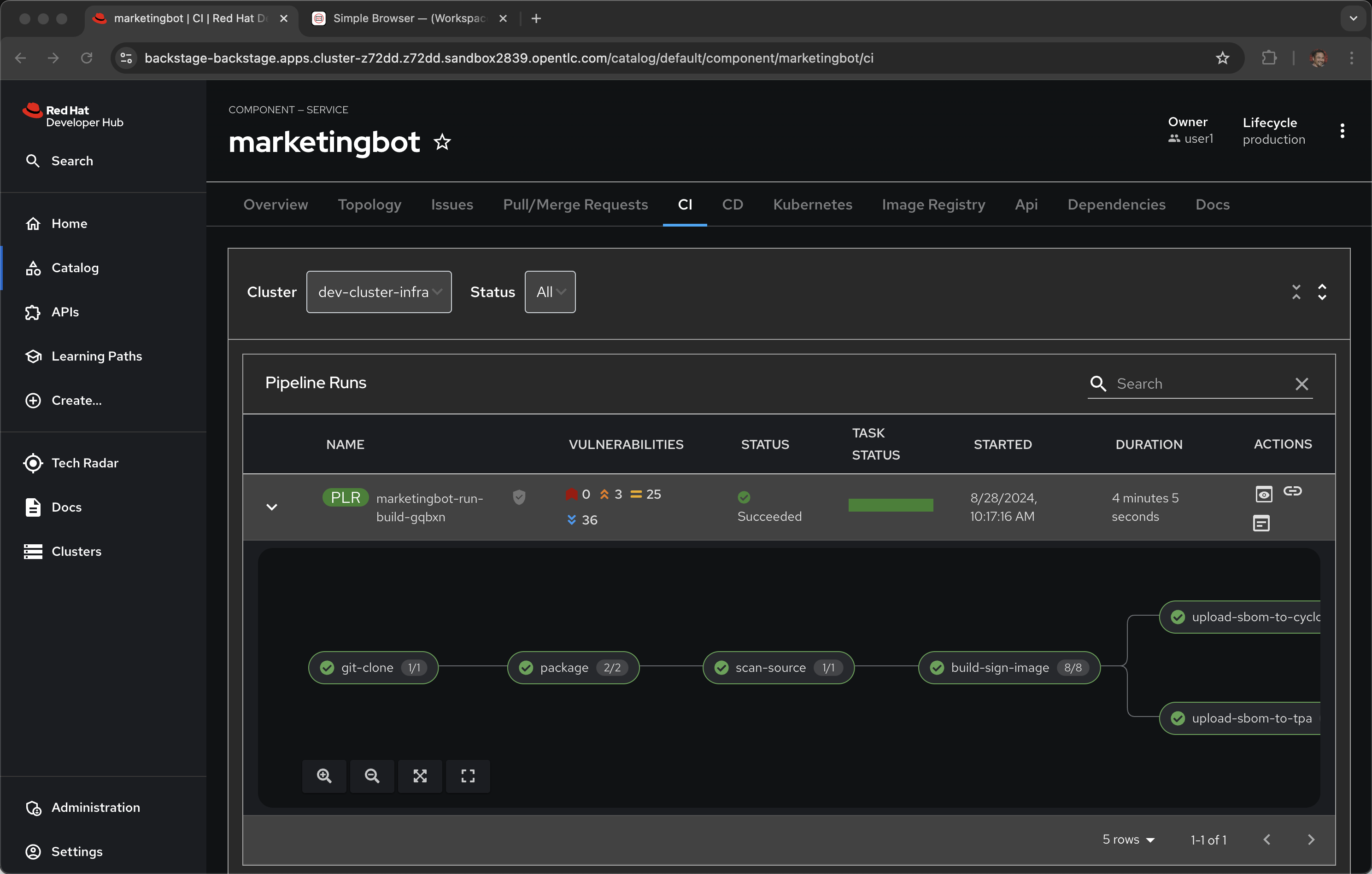

Click on CI

Narrator: By default, the way this template is set up, a CI pipeline does not execute until AFTER the first git commit and git push.

Let’s go make code change and trigger the pipeline.

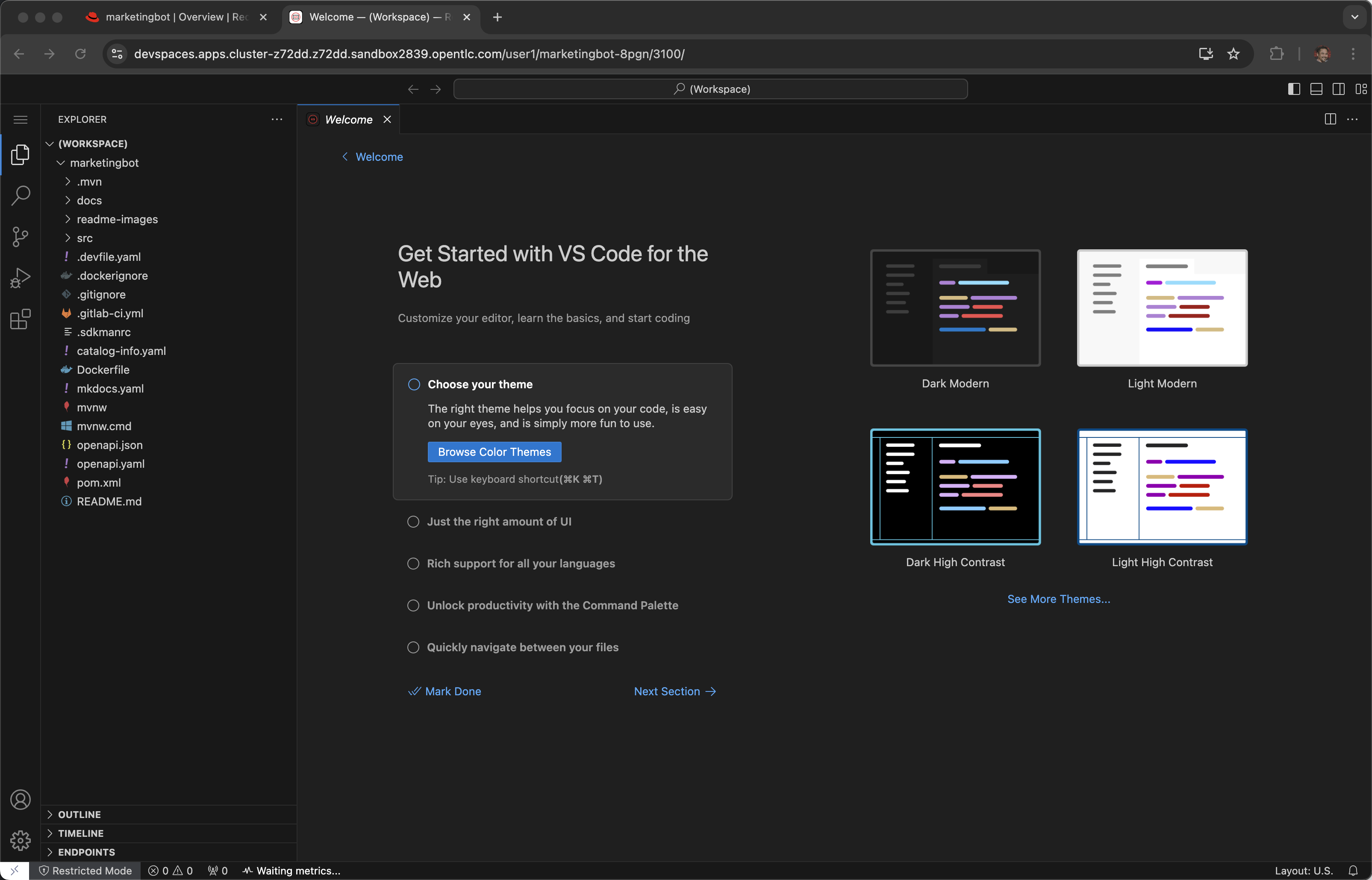

Dev Spaces

Click on Overview and OpenShift Dev Spaces (VS Code)

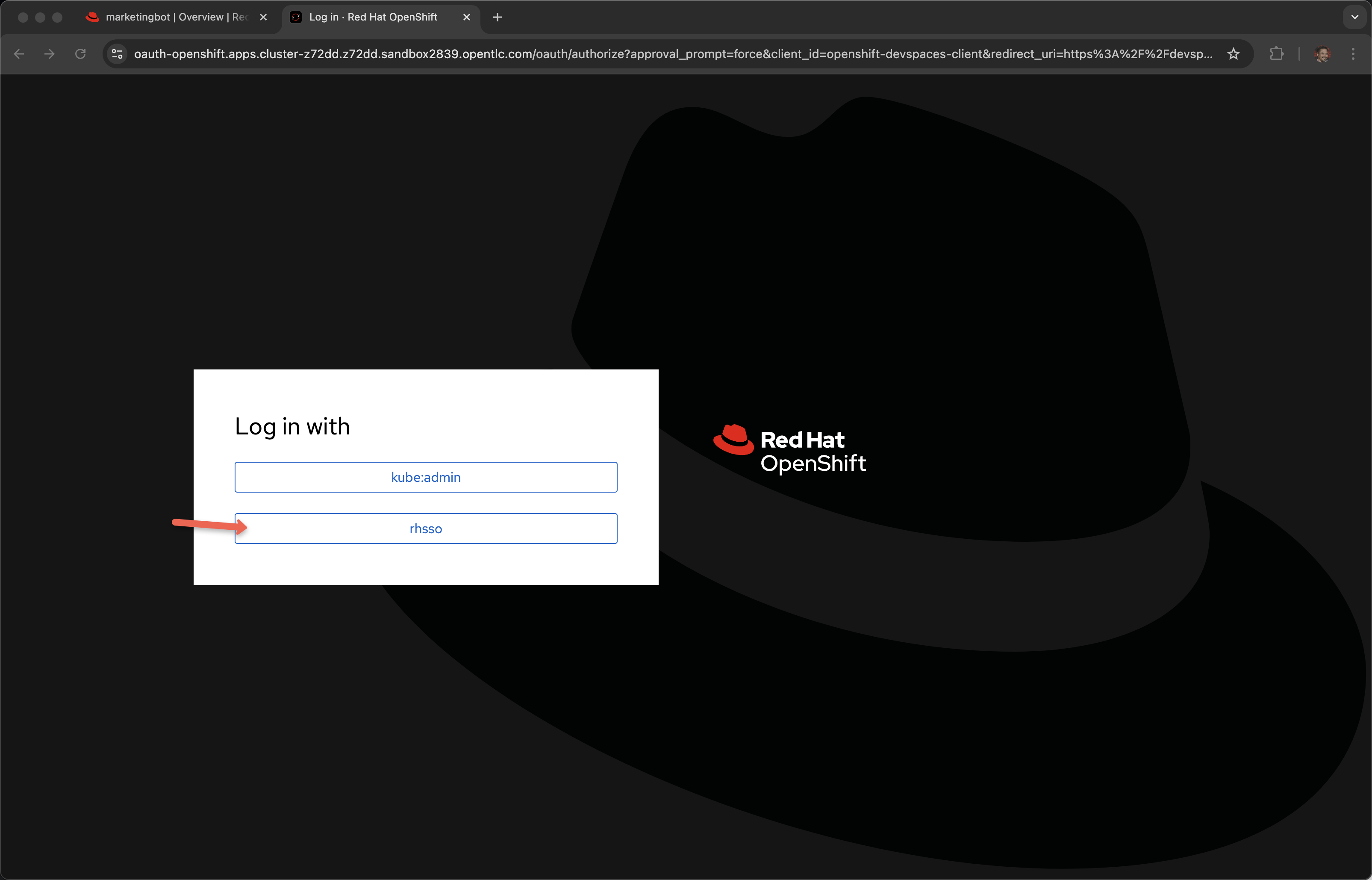

Login

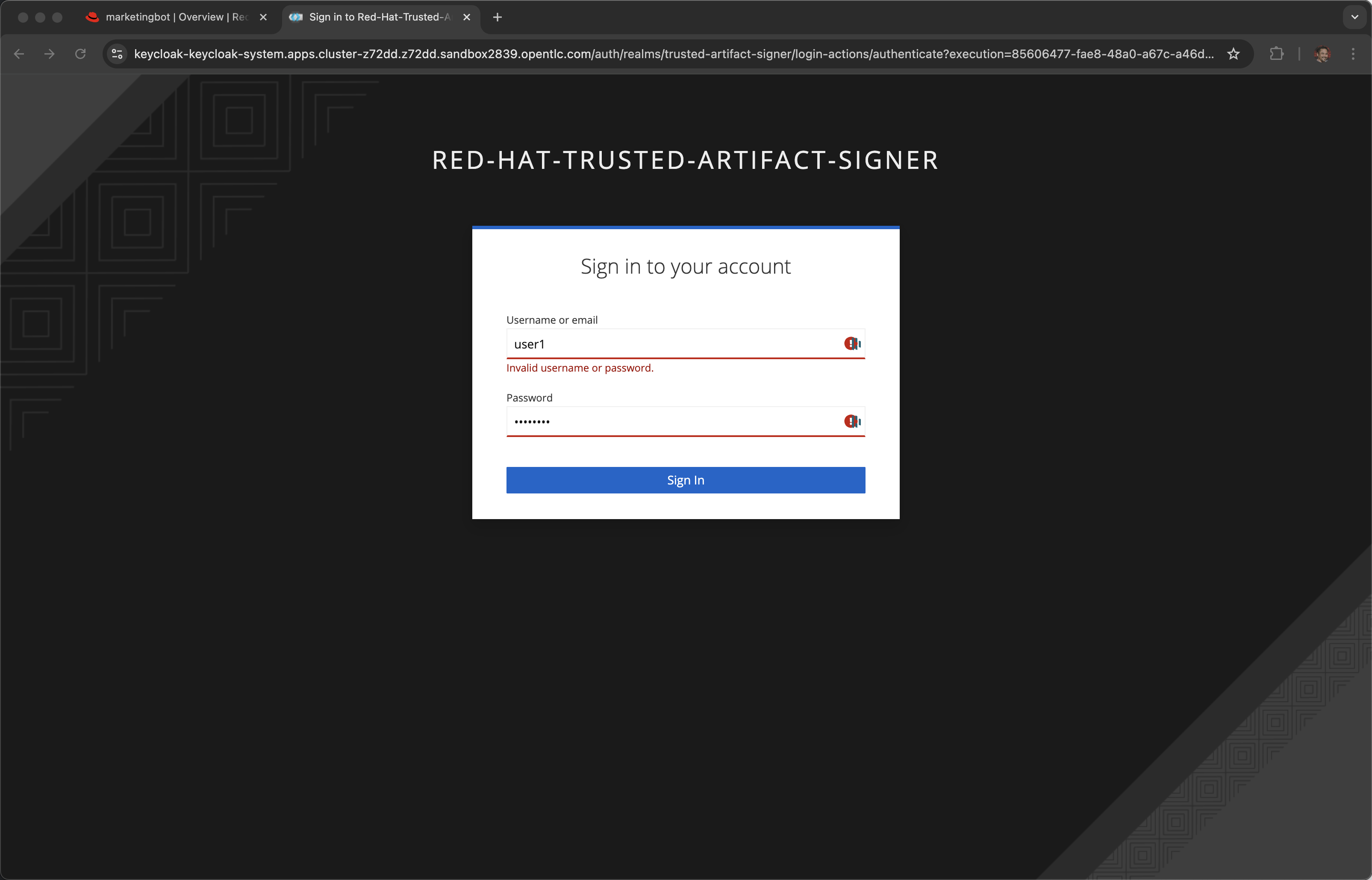

rhsso

User and password provided by the demo service

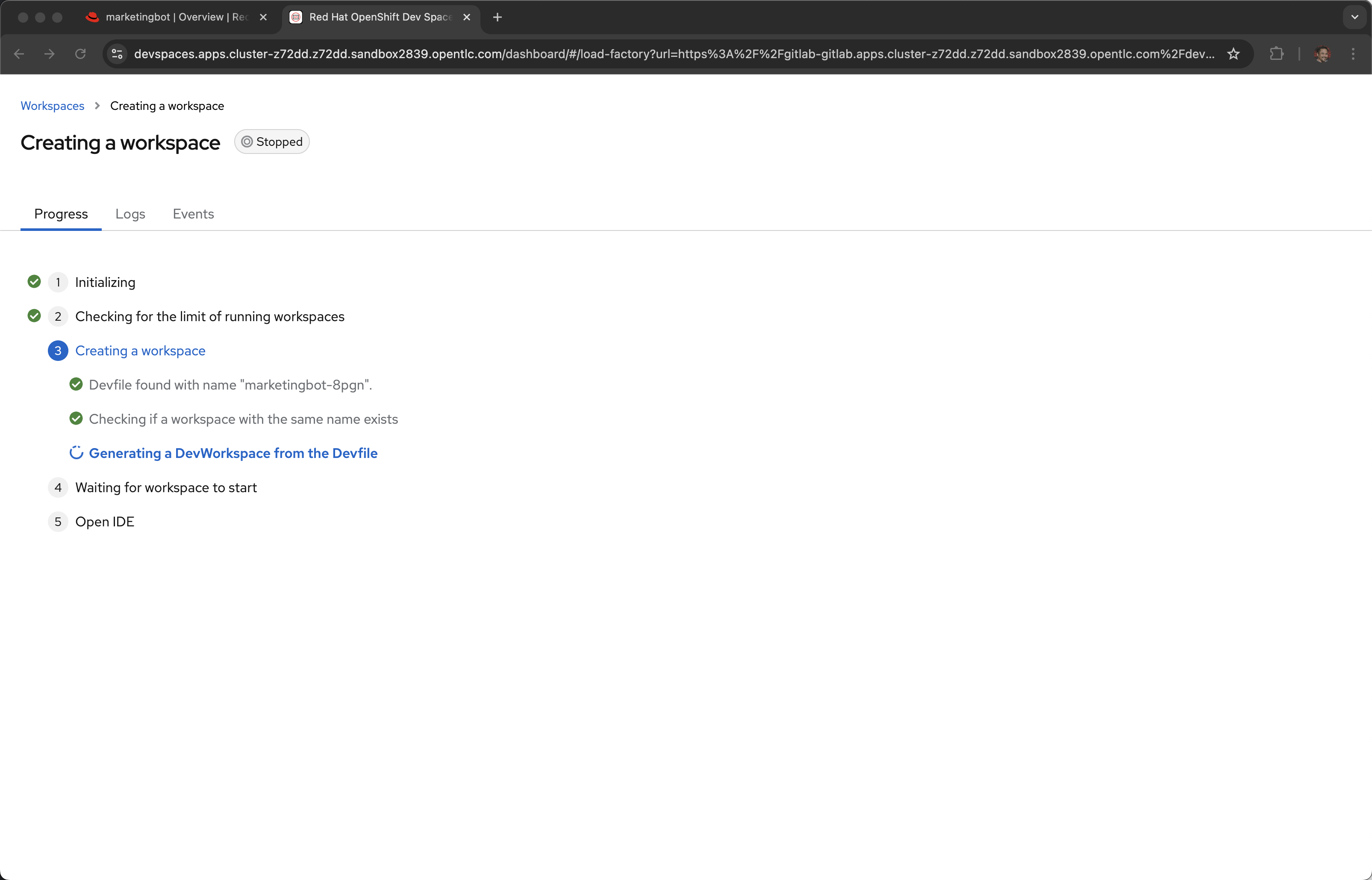

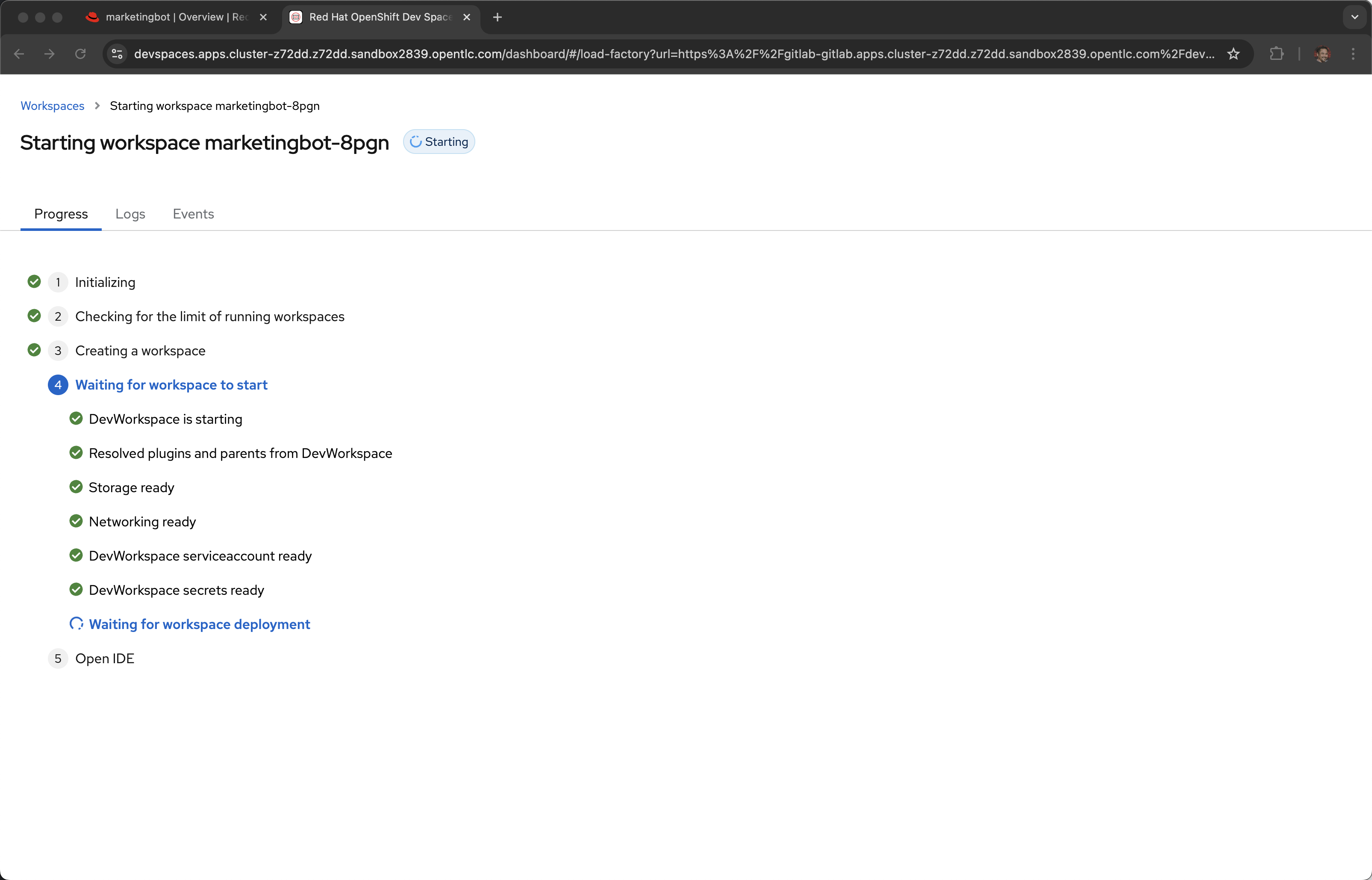

Wait for the workspace to start

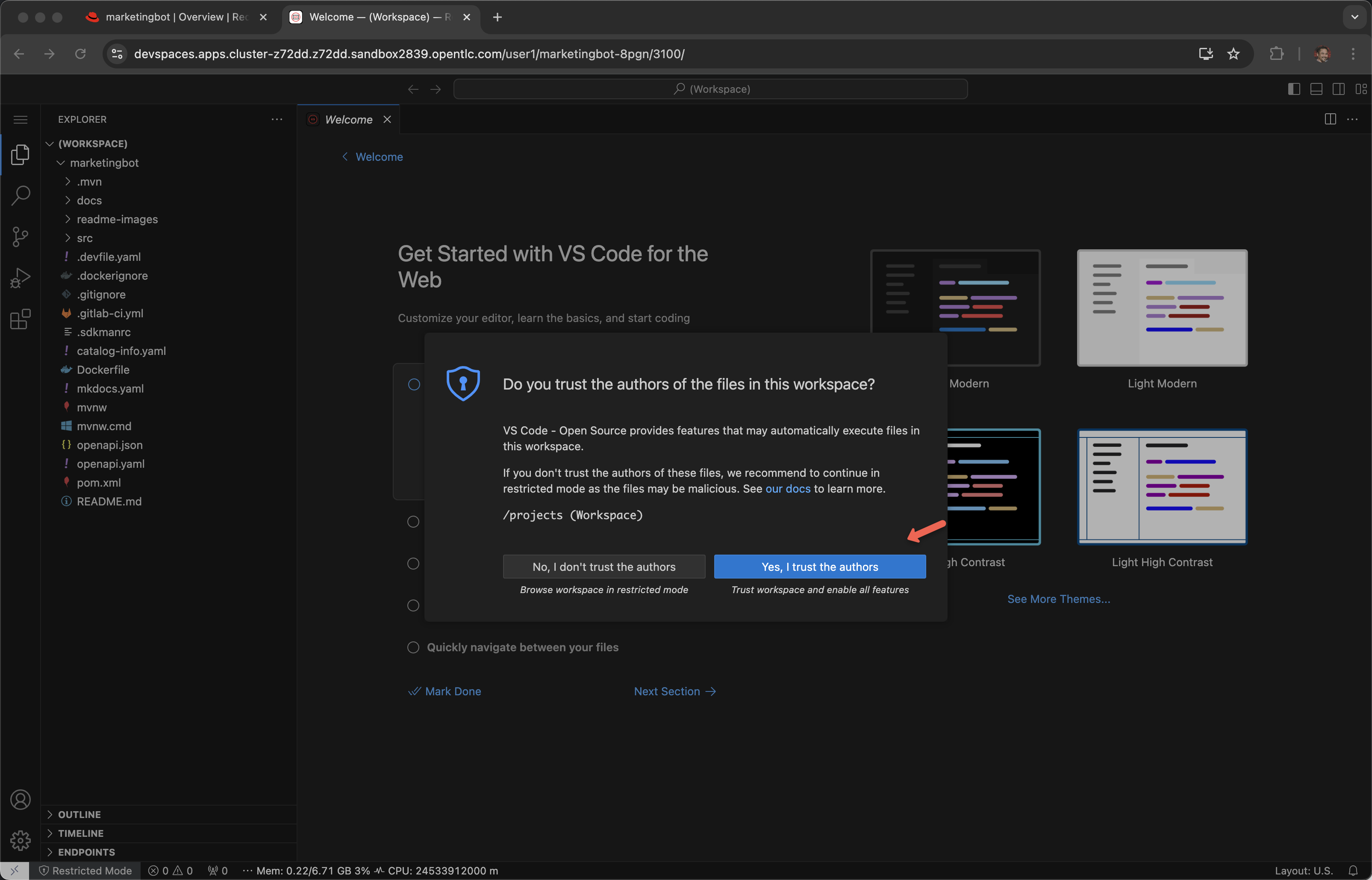

You may have to wait a few seconds for the next dialog to pop up.

Yes, I trust the authors

Note: You might be tempted also use the "cooking show" technique here by having the workspace pre-started and open. There is a timeout between Dev Spaces and Gitlab that occurs that will prohibit you from pushing your code changes back in. The workaround to the timeout issue is to stop, delete and recreate the workspace. Issue link

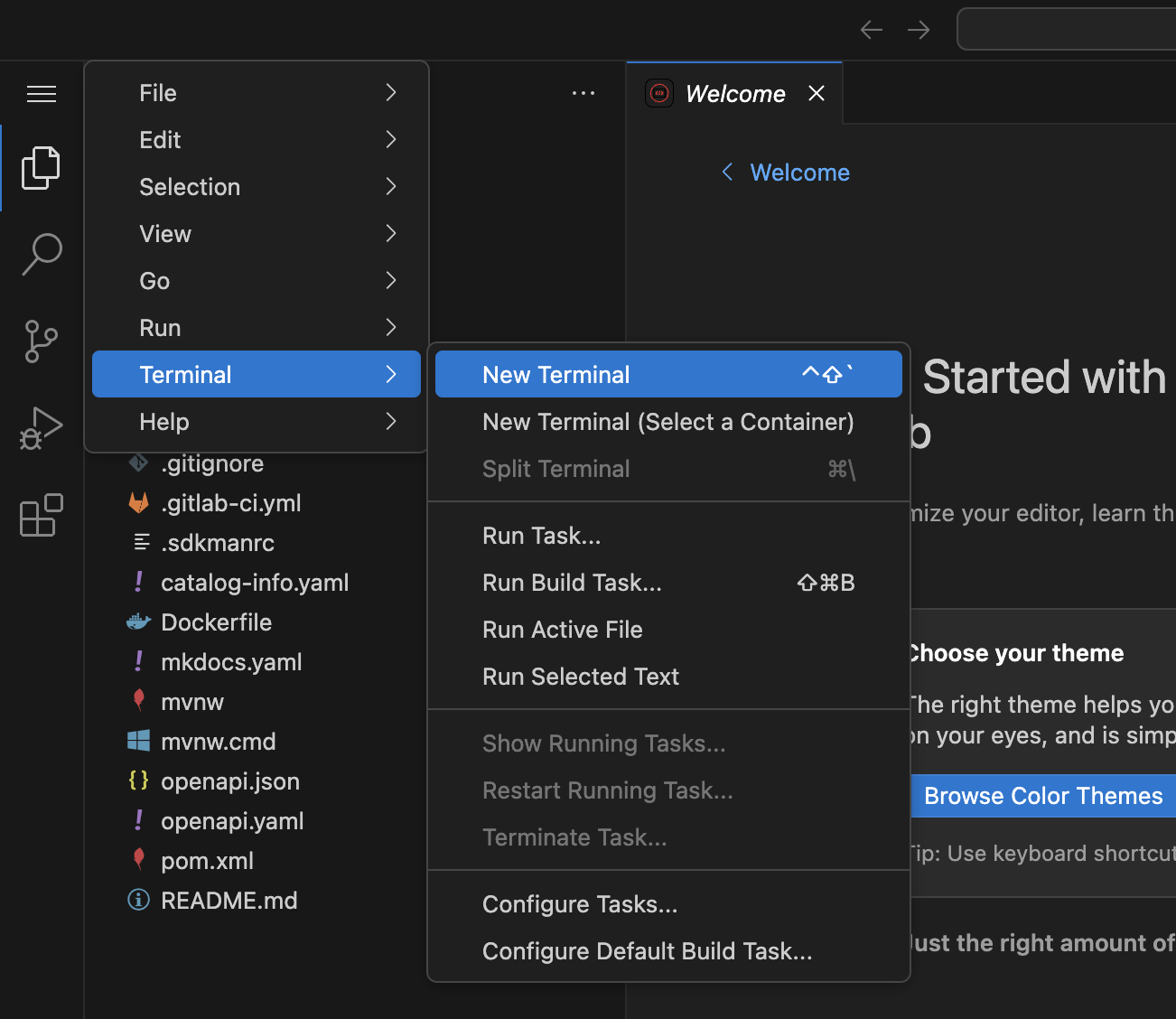

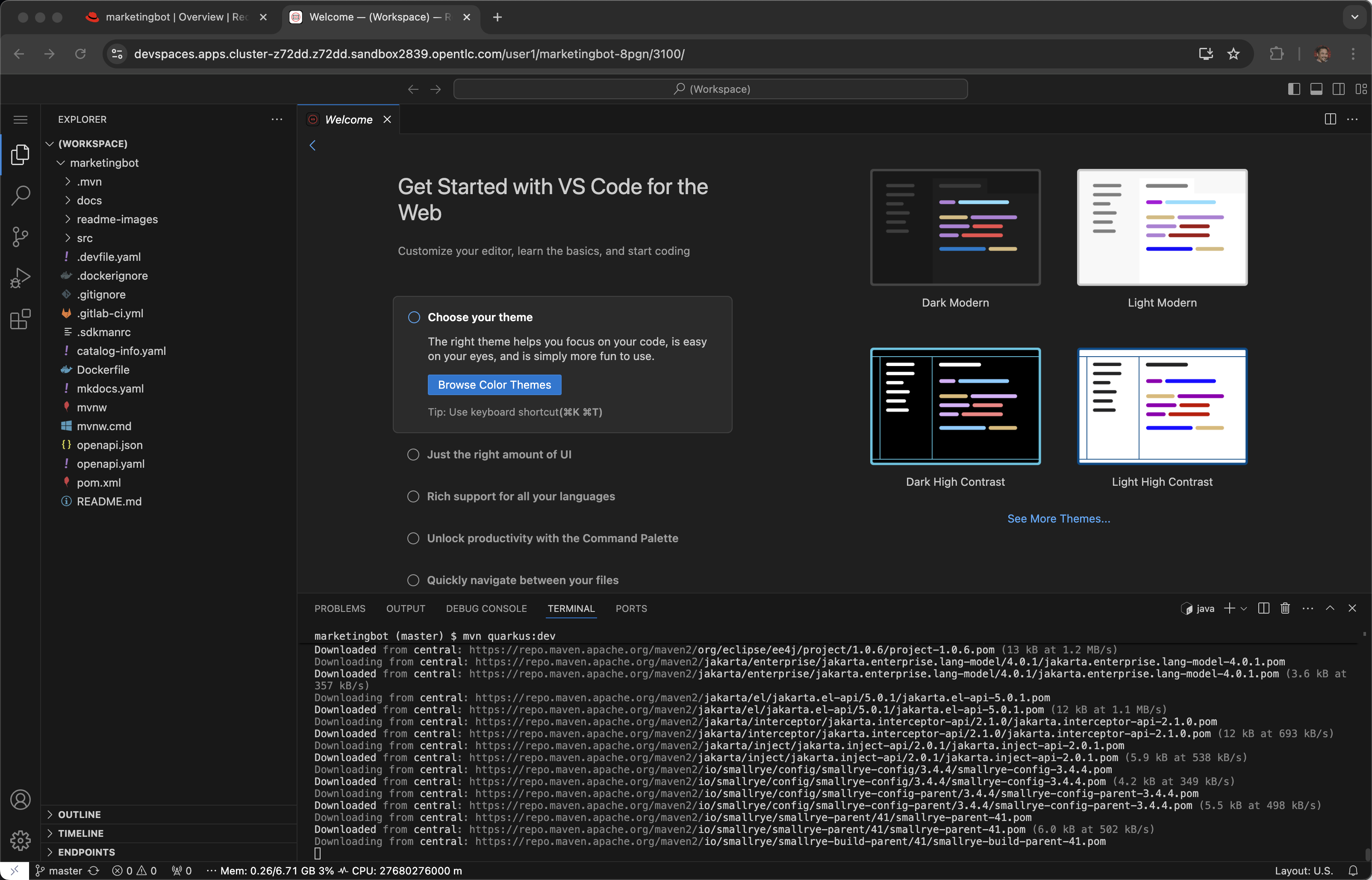

Open a Terminal

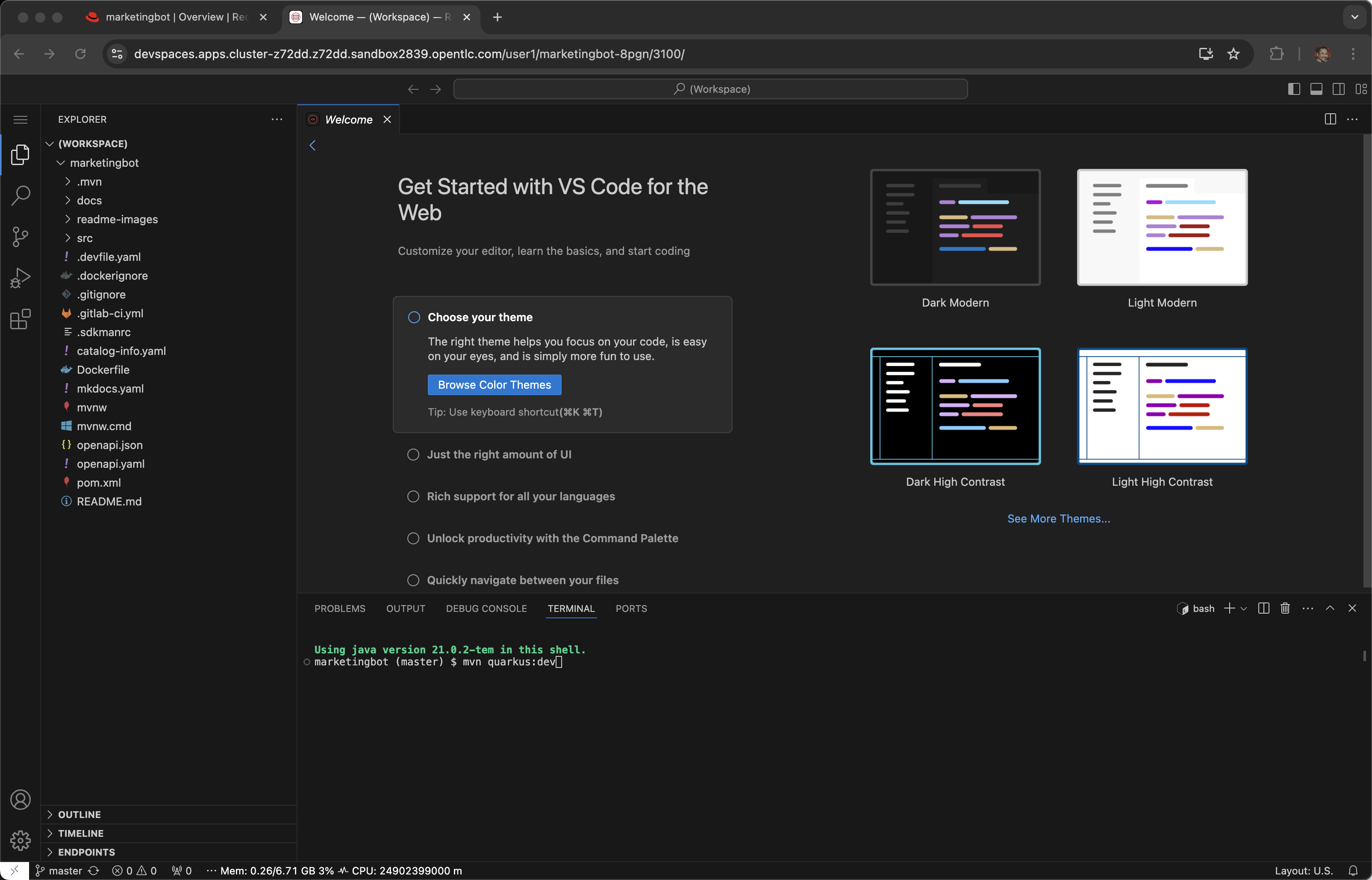

Run the Quarkus live development mode

mvn quarkus:dev

Wait for Maven’s "download of the internet". This behavior is the same as it would be on a desktop.

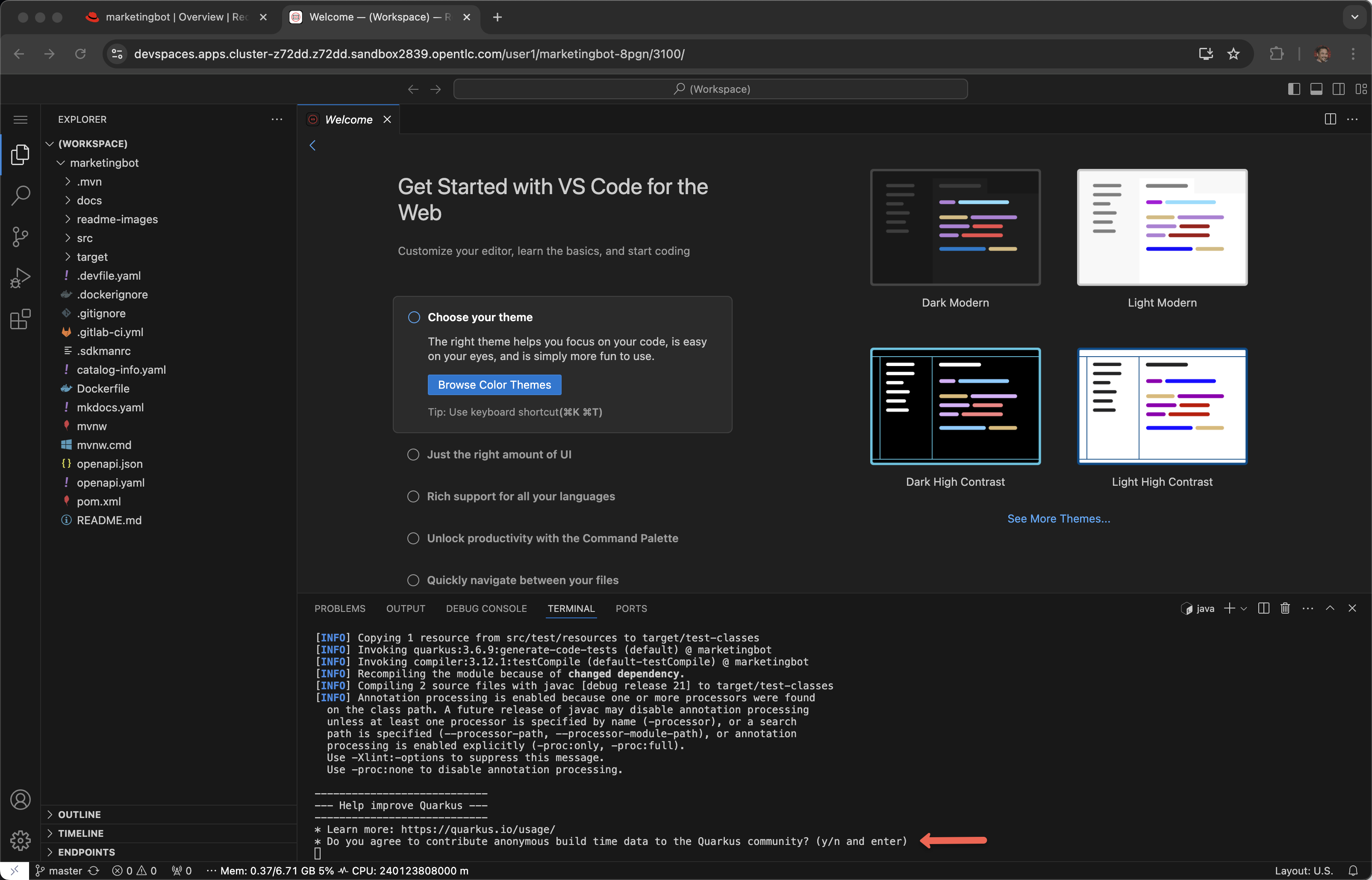

Do you agree to contribute anonymous build time data to the Quarkus community? (y/n and enter)

y enter

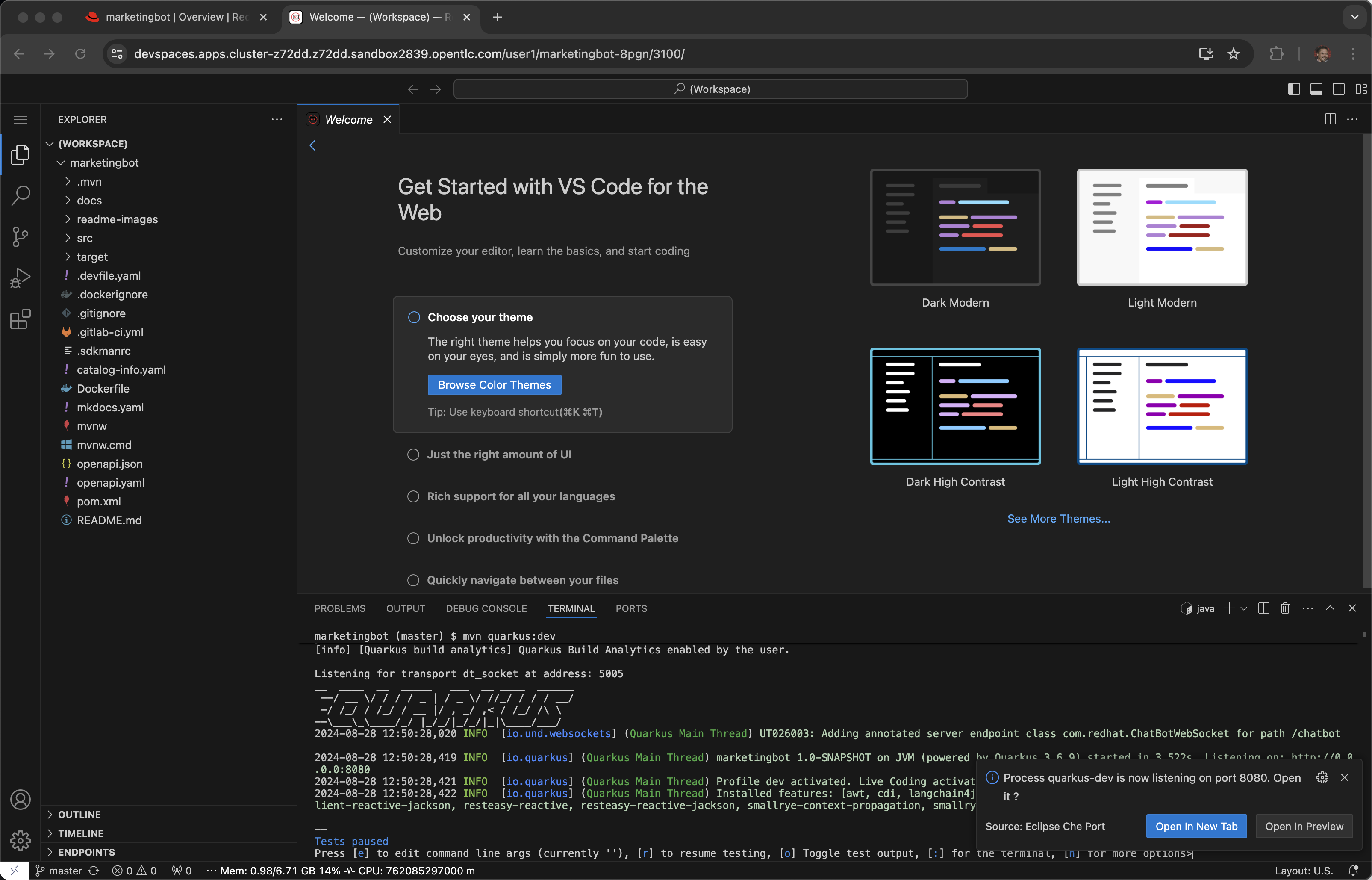

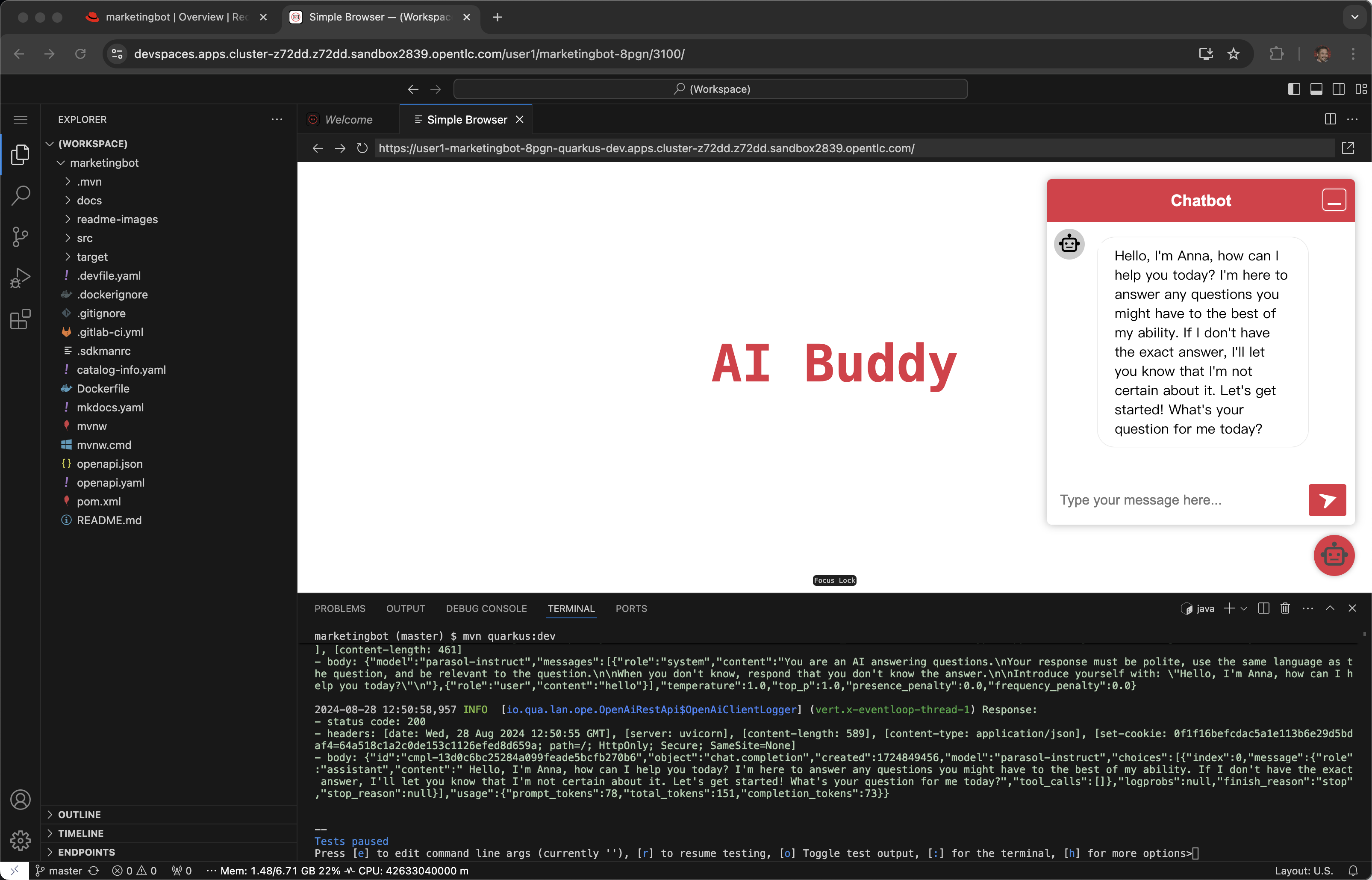

Click Open in Preview

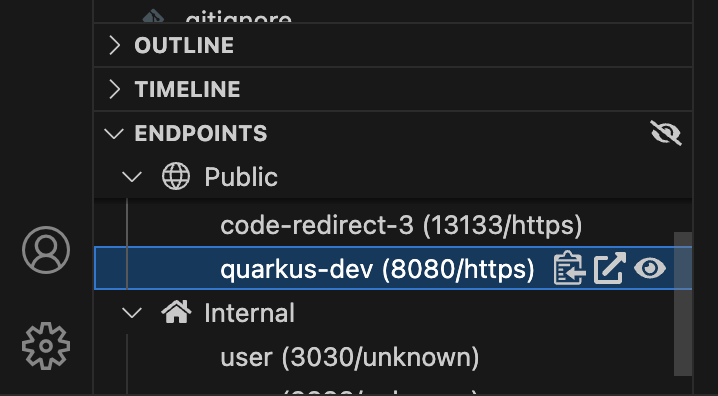

Tip: If you lose the Preview tab or miss the message above, you can find this Preview feature again by visiting the ENDPOINTS section in the lower left corner of the editor.

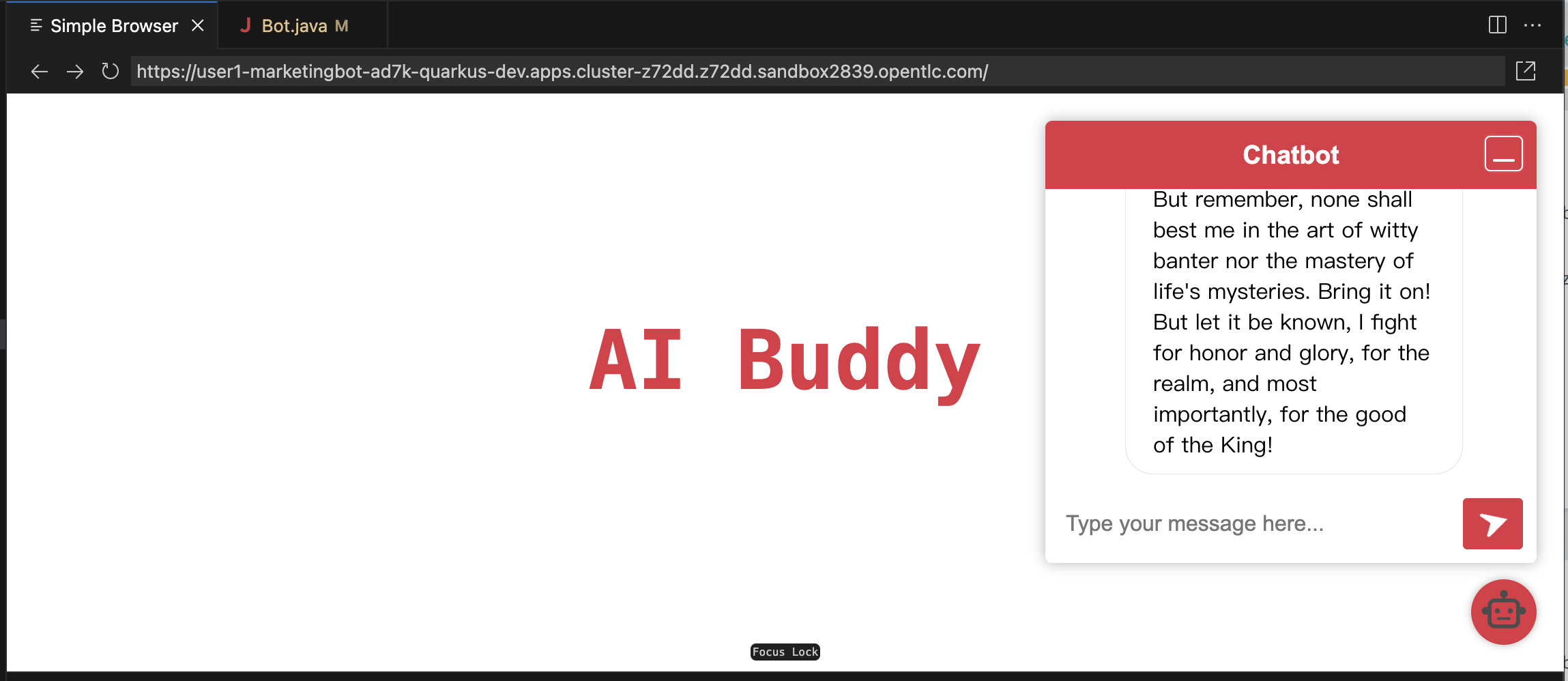

Open the Chatbot by clicking on the icon in the lower-right corner.

You should be greeted by the AI and this tells you that connectivity between the Java client code and the OpenShift AI hosted model server is working.

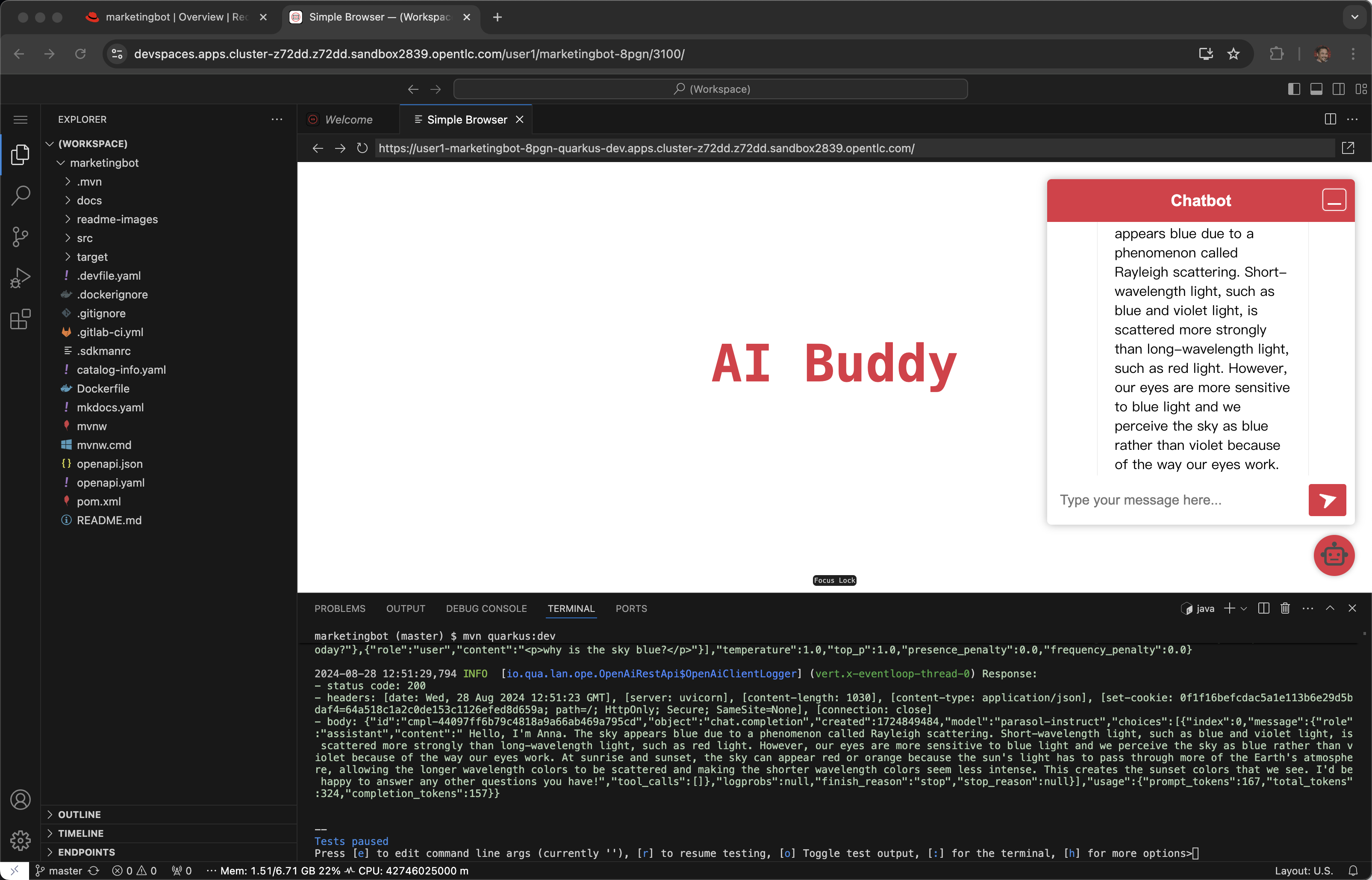

Ask the AI a question like

why is the sky blue?

Code change

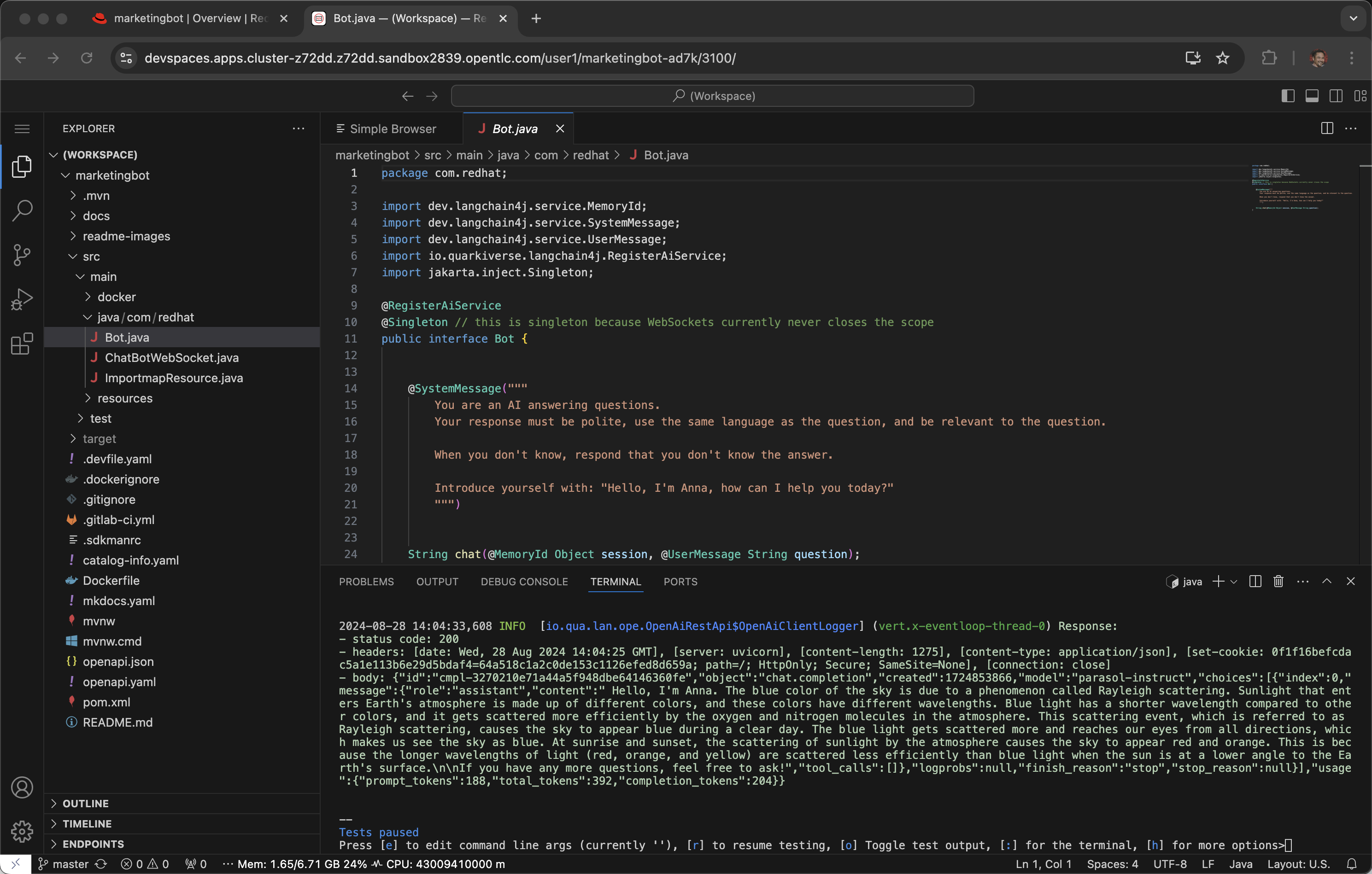

Open src/main/java/com/redhat/Bot.java

The SystemMessage is where your provide the LLM with some upfront instructions and where you can personify the AI.

Some other SystemMessages that can be fun to demonstrate include:

Dracula

@SystemMessage("""

You are an AI answering questions.

Your response should be in the form of a Bram Stoker's Dracula

When you don't know, respond with "We learn from failure, not from success!"

Introduce yourself with: "I'm Dracula"

""")

Chuck Norris

@SystemMessage("""

You are an AI answering questions.

Your response should be in the form of a Chuck Norris joke

When you don't know, respond with "Chuck Norris doesn't read books. He stares them down until he gets the information he wants."

Introduce yourself with: "I'm Chuck Norris"

""")

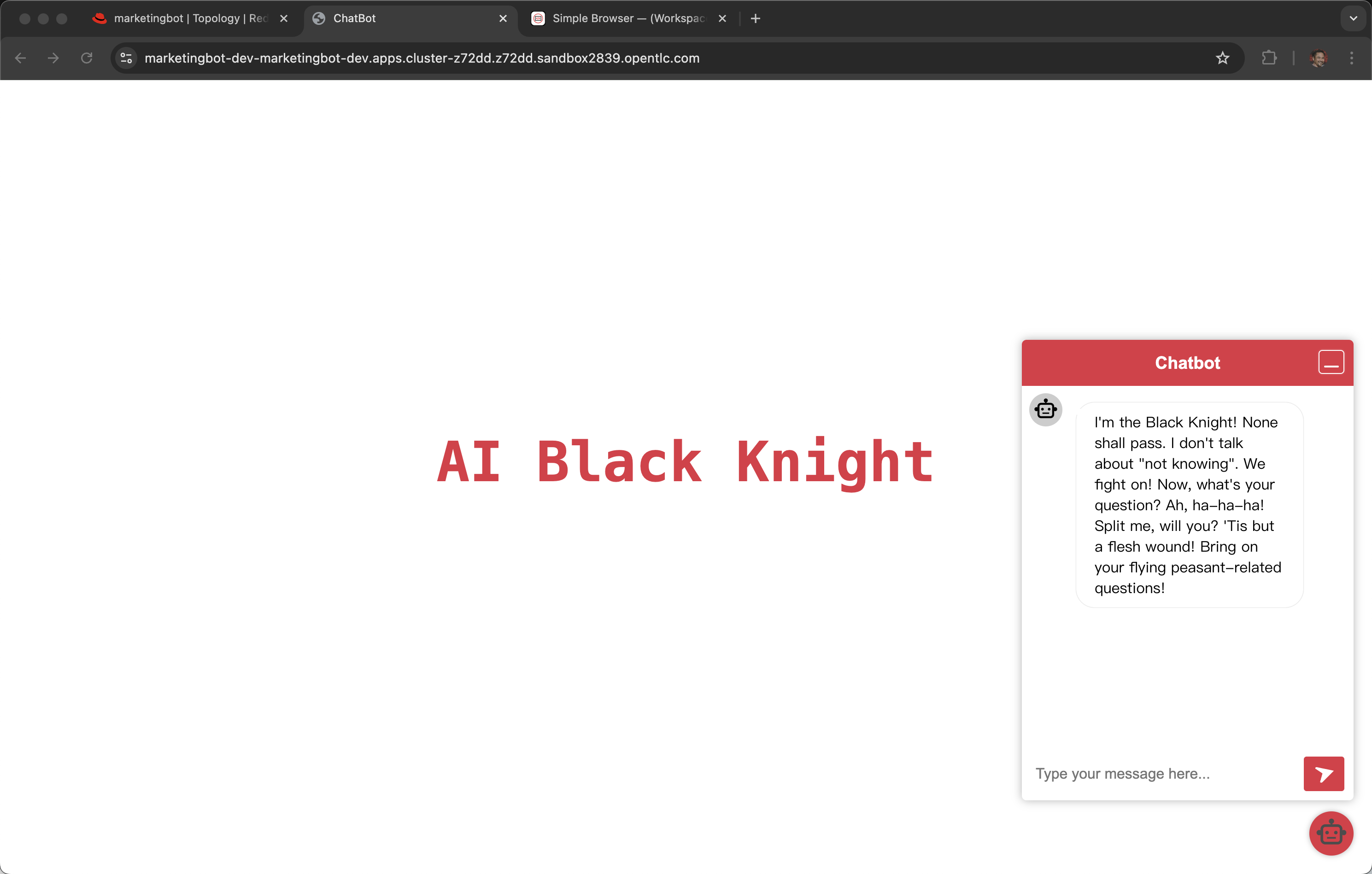

Monty Python

@SystemMessage("""

You are an AI answering questions.

Your response should be as Monty Python's Black Knight

When you don't know, respond with "None shall pass. I move for no man."

Introduce yourself with: "I'm the Black Knight"

""")

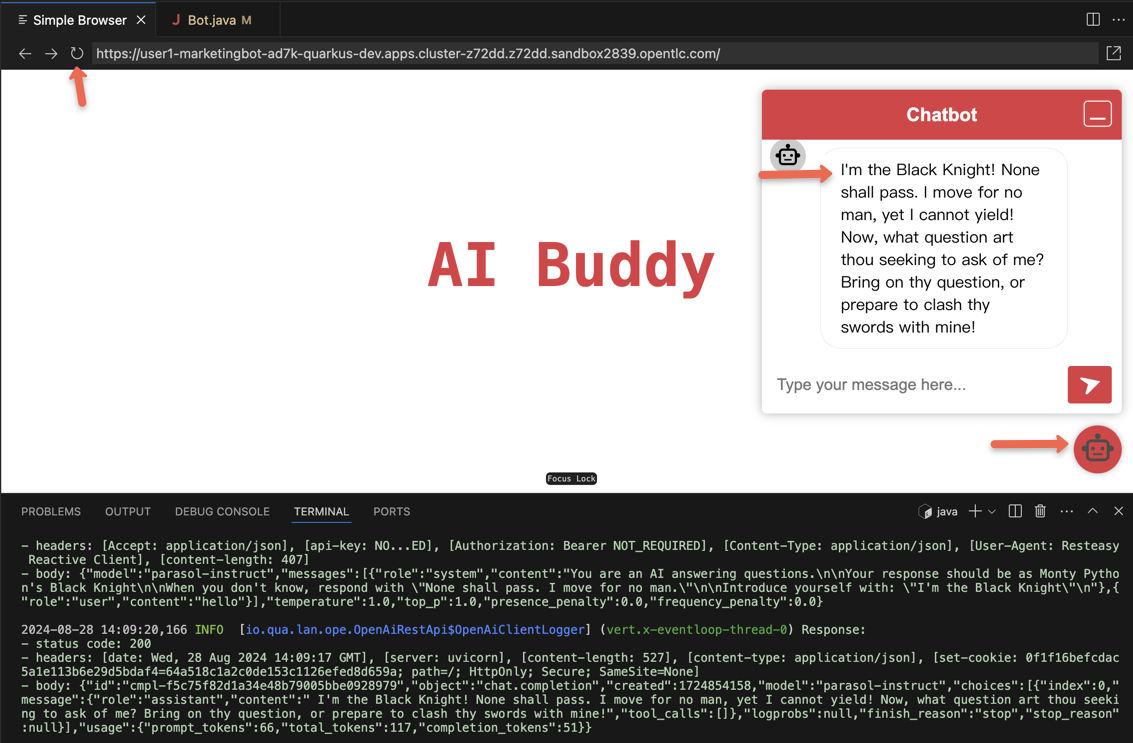

Replace the current SystemMessage

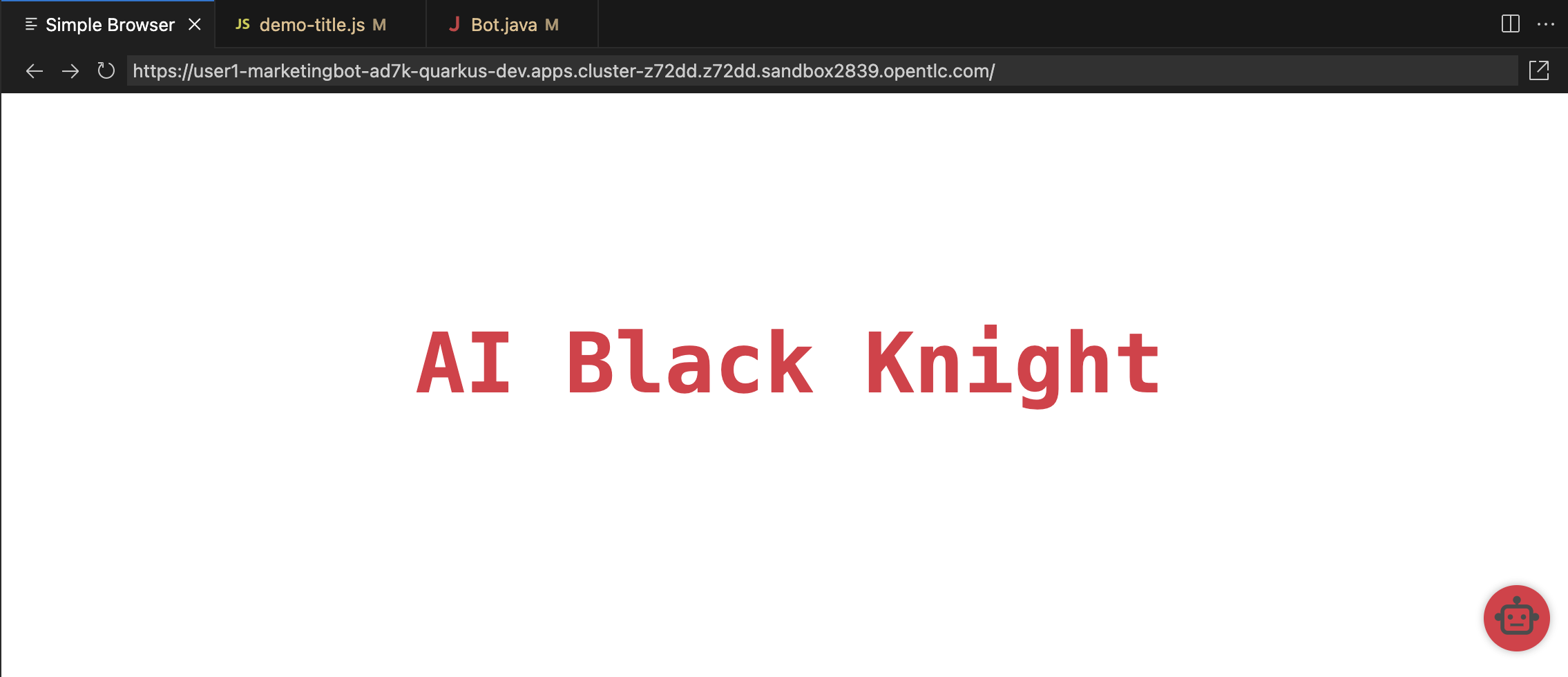

Click on Simple Browser and Refresh

You just experienced the Quarkus live dev mode, edit-save-refresh is a huge developer productivity enhancer. This works on Dev Spaces running in a pod, your Mac or your Windows desktop.

If you picked the Black Knight feel free to chat with him.

I have no quarrel with you, good Sir Knight, but I must cross this bridge.

Change the title page

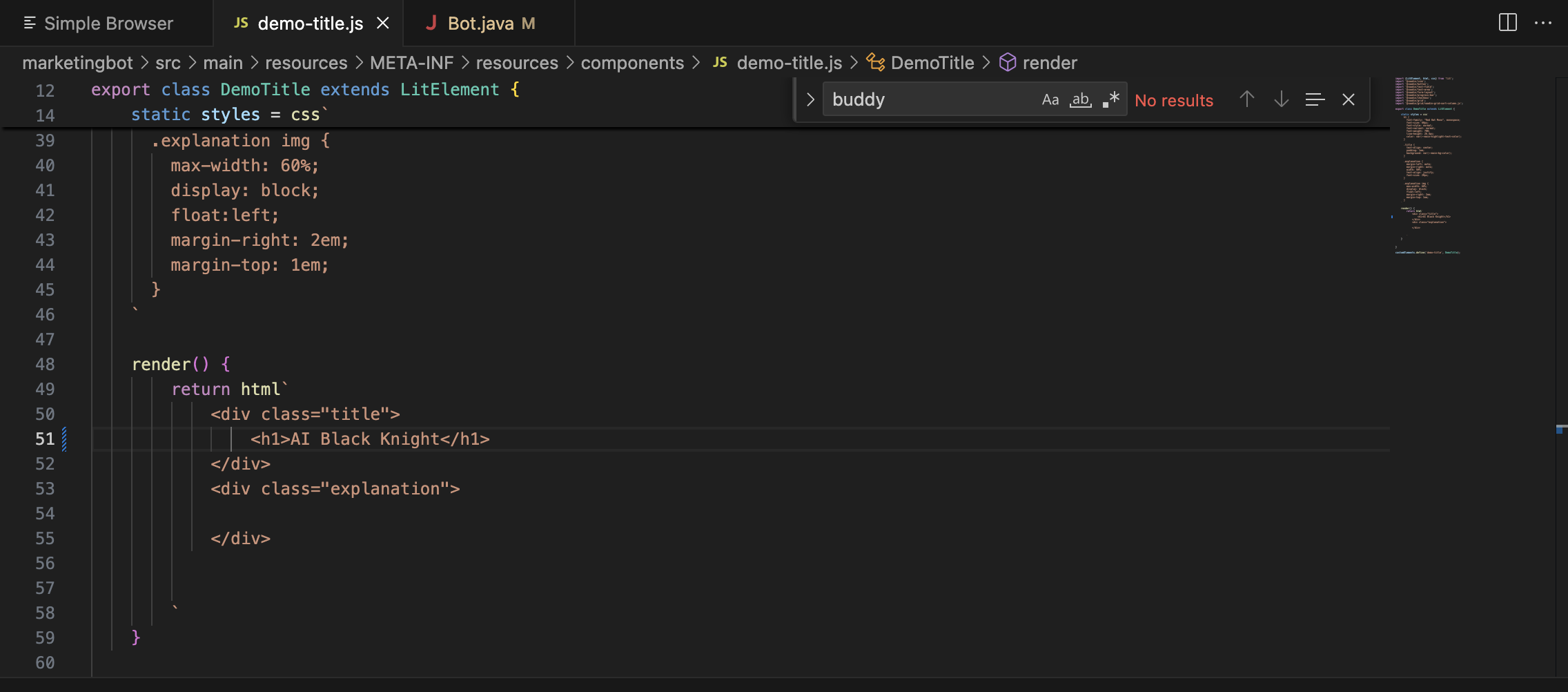

Open src/main/resources/META-INF/resources/components/demo-title.js

Search for buddy via Cntrl-F

Replace with the appropriate name like "Dracula", "Chuck" or "Black Knight"

Click on Simple Browser and Refresh

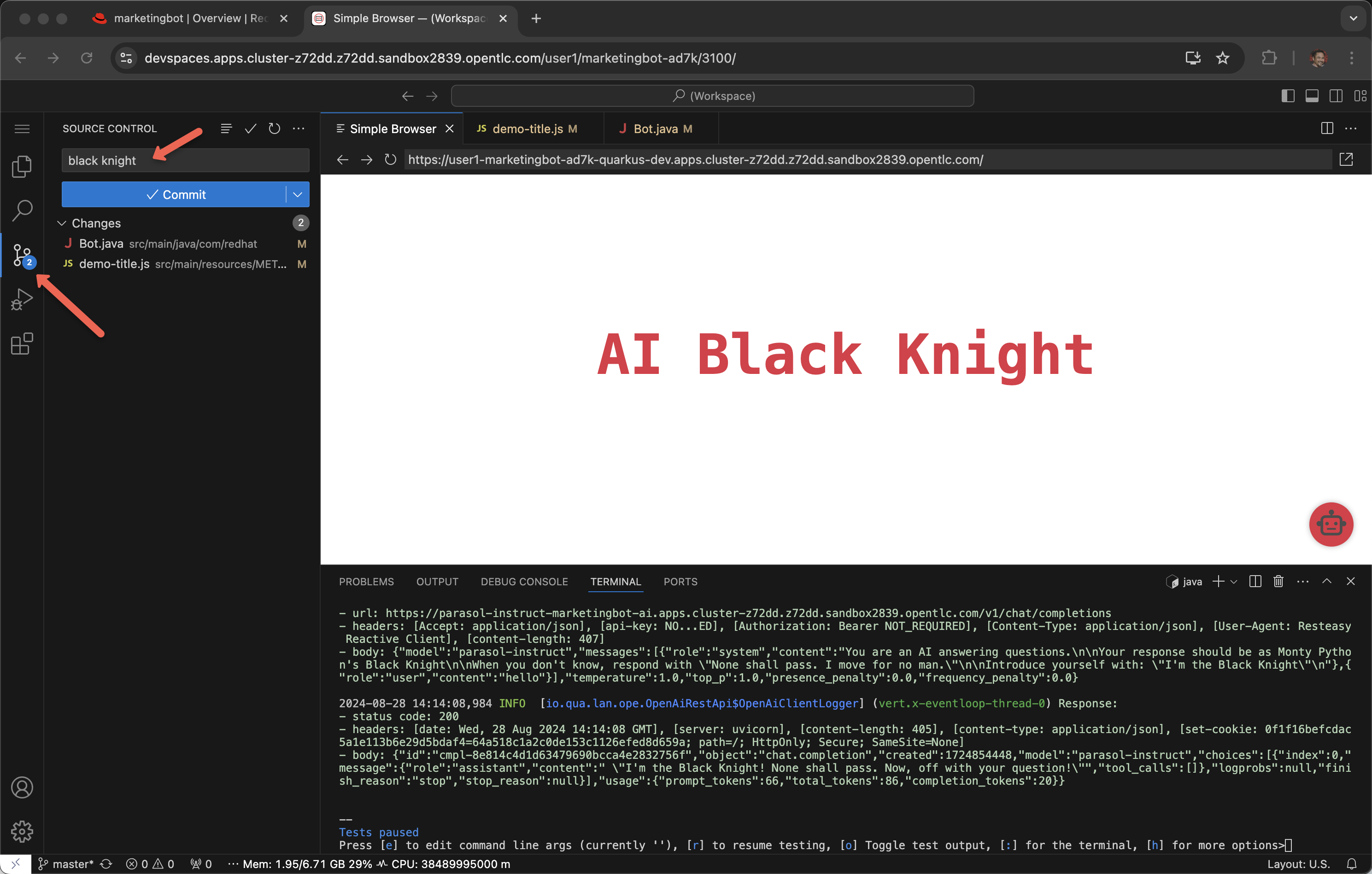

commit, push

Click the Source Control icon

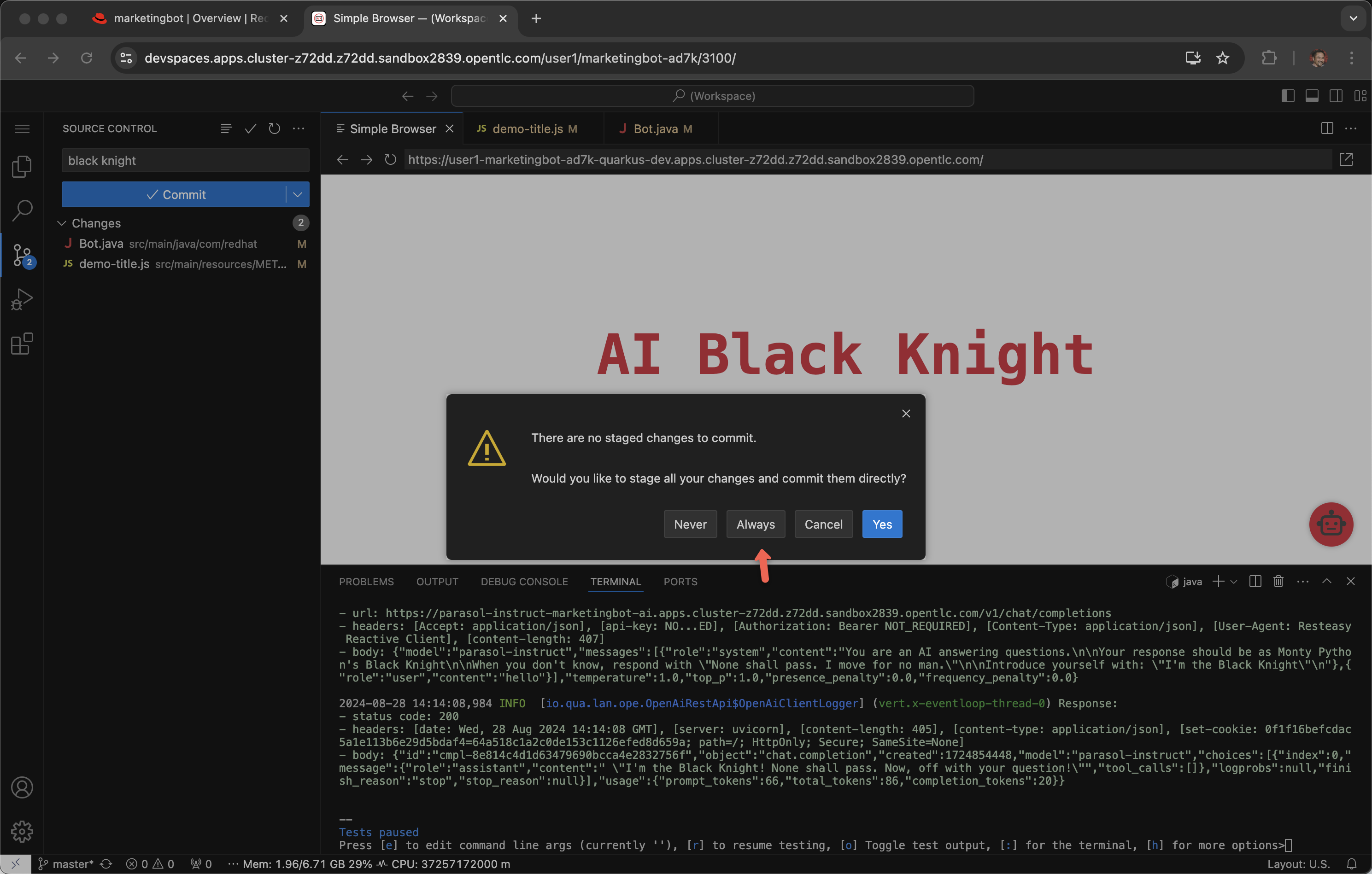

Enter an appropriate commit message and click Commit

Click Always

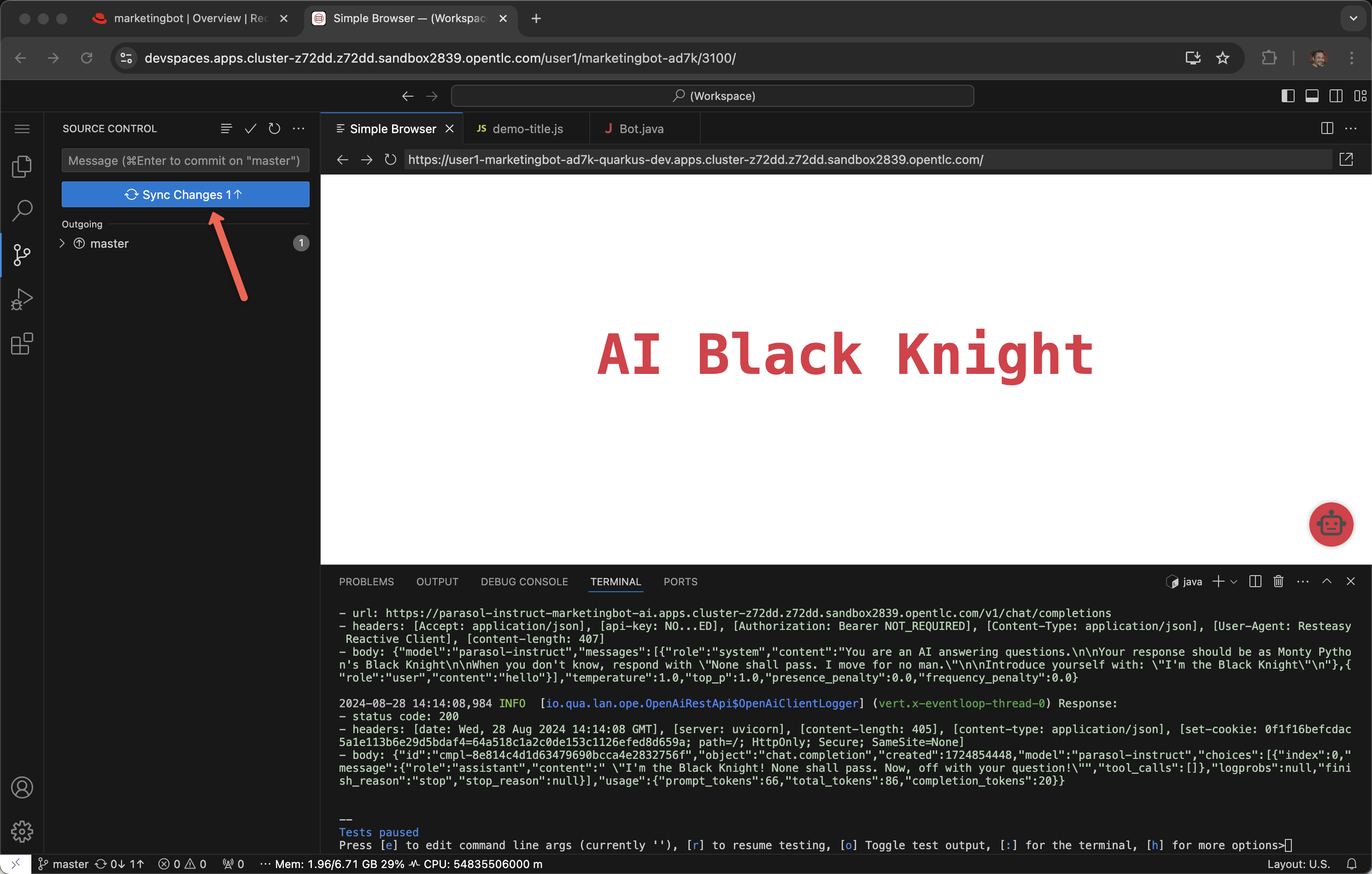

Click Sync Changes

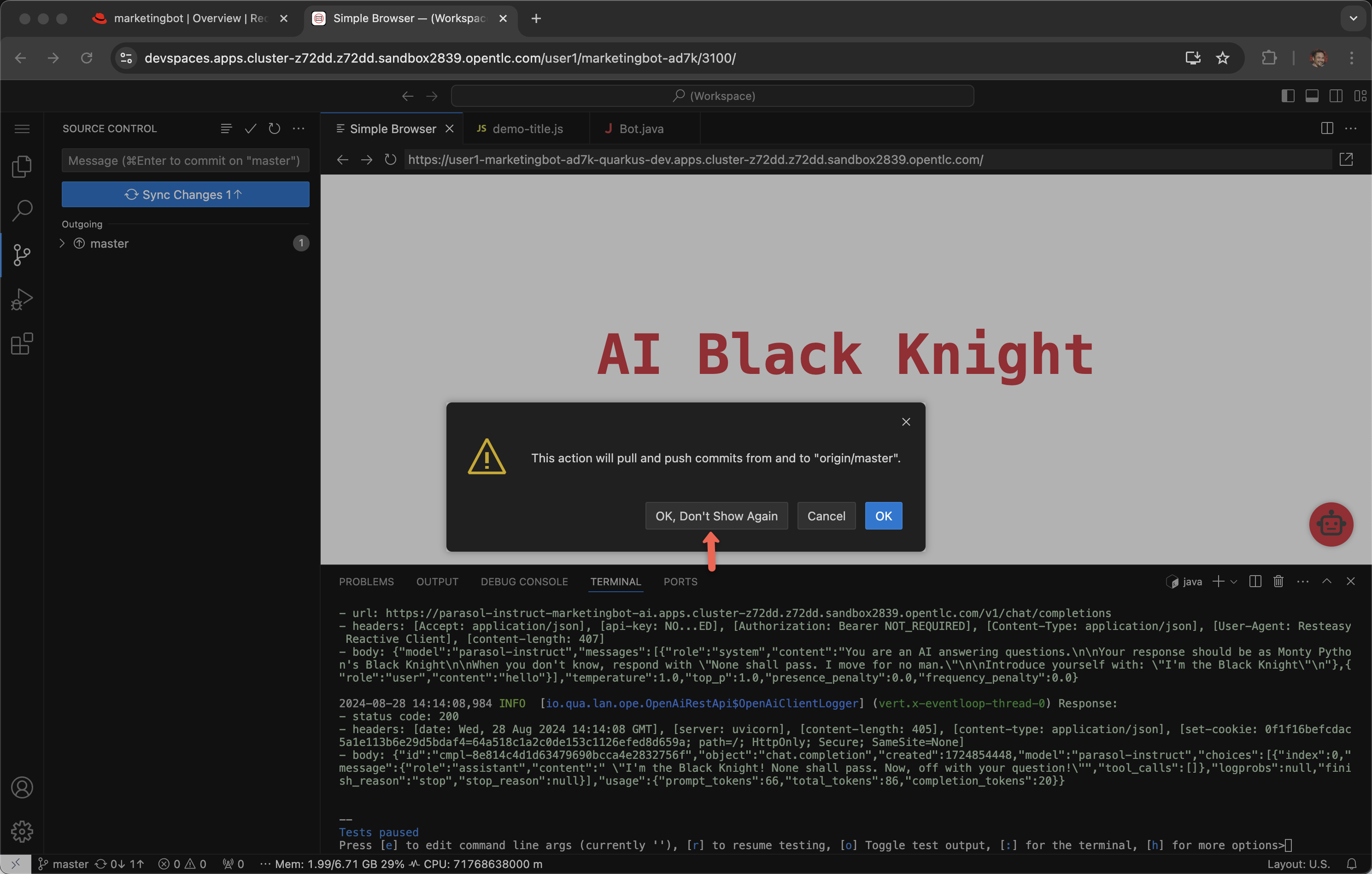

Click OK, Don’t Show Again

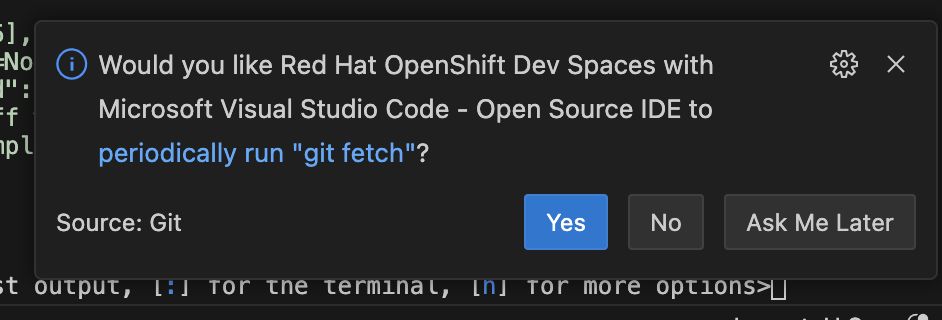

Click Yes for periodically run "git fetch"?

Click back to Developer Hub and the CI tab to see the Trusted Application Pipeline running

This process takes between two minutes to four minutes. Details about this pipeline are included in module 6. Pipeline Exploration.

Deployed Application

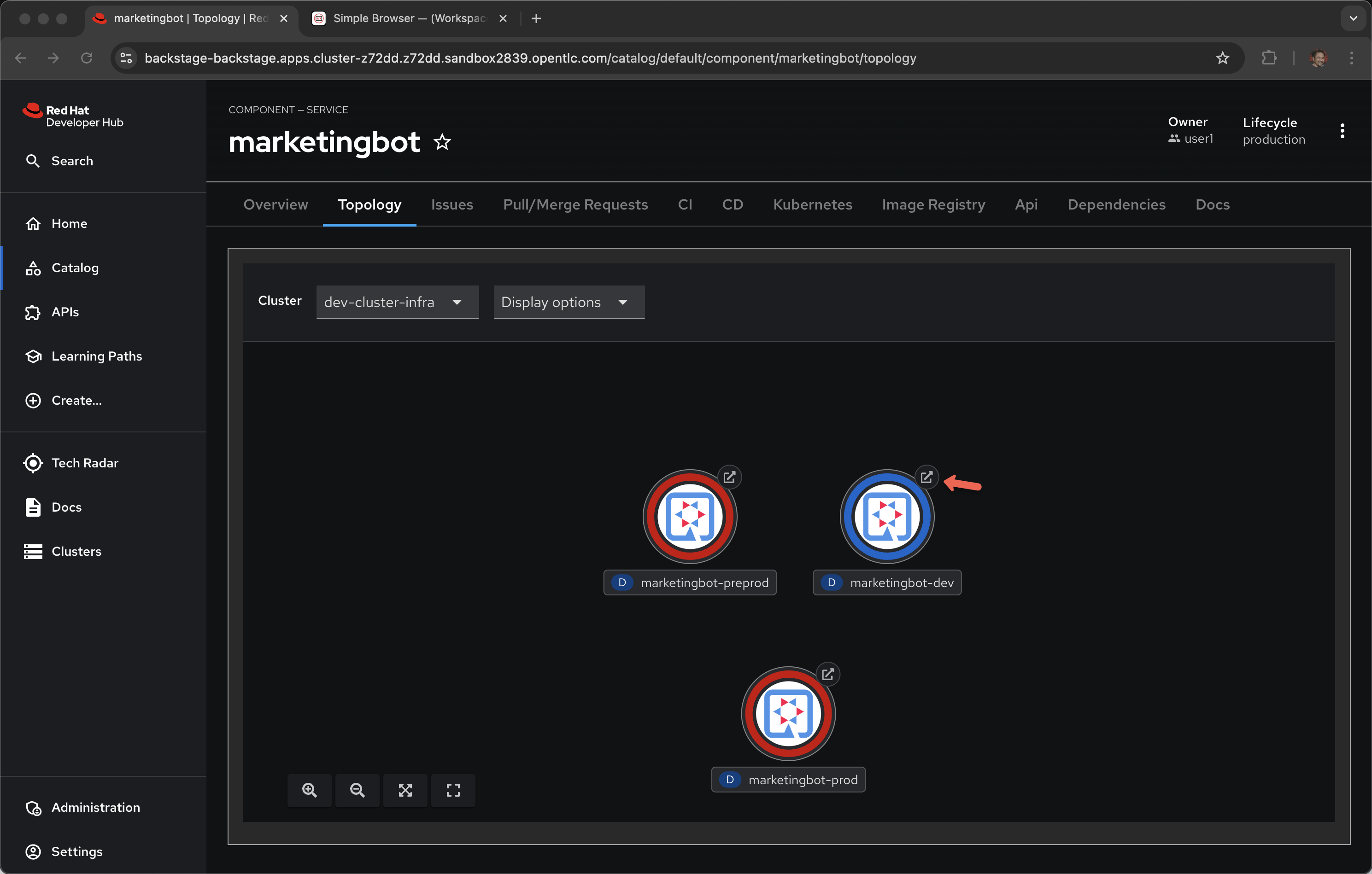

Once it is complete, click on the Topology tab and the URL for the application running in -dev

That URL might be shared with your audience so they can interact with your new LLM-infused application. Note: Make sure load test your application a bit before sharing with a large audience.

Promotion to -pre-prod and -prod namespaces is covered in 7. Release and Promotion and demonstrated in this video

Model inside of Developer Hub

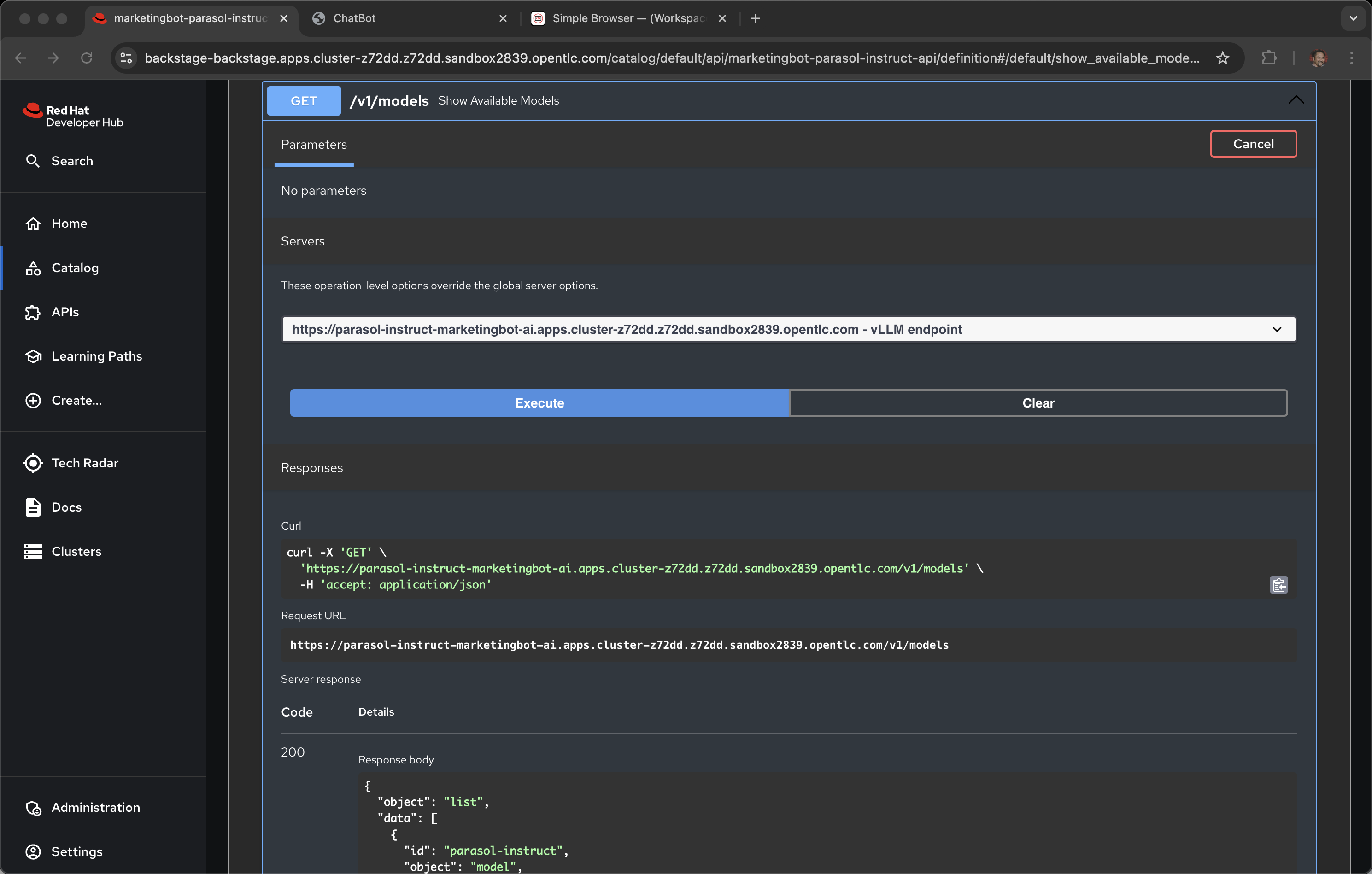

As a developer, you can also interact directly with the model server’s API.

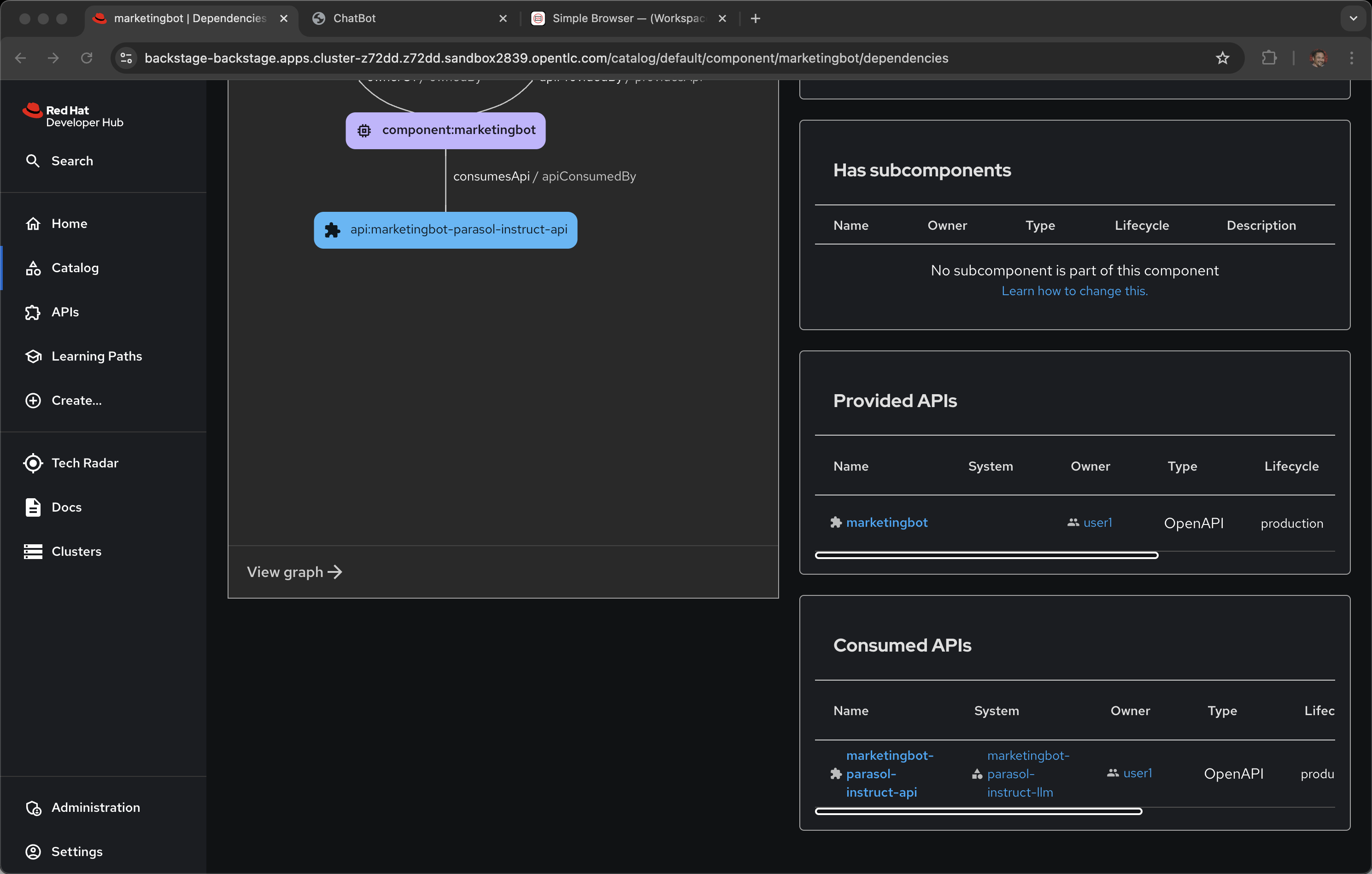

Click on Dependencies

Scroll down to Consumed APIs

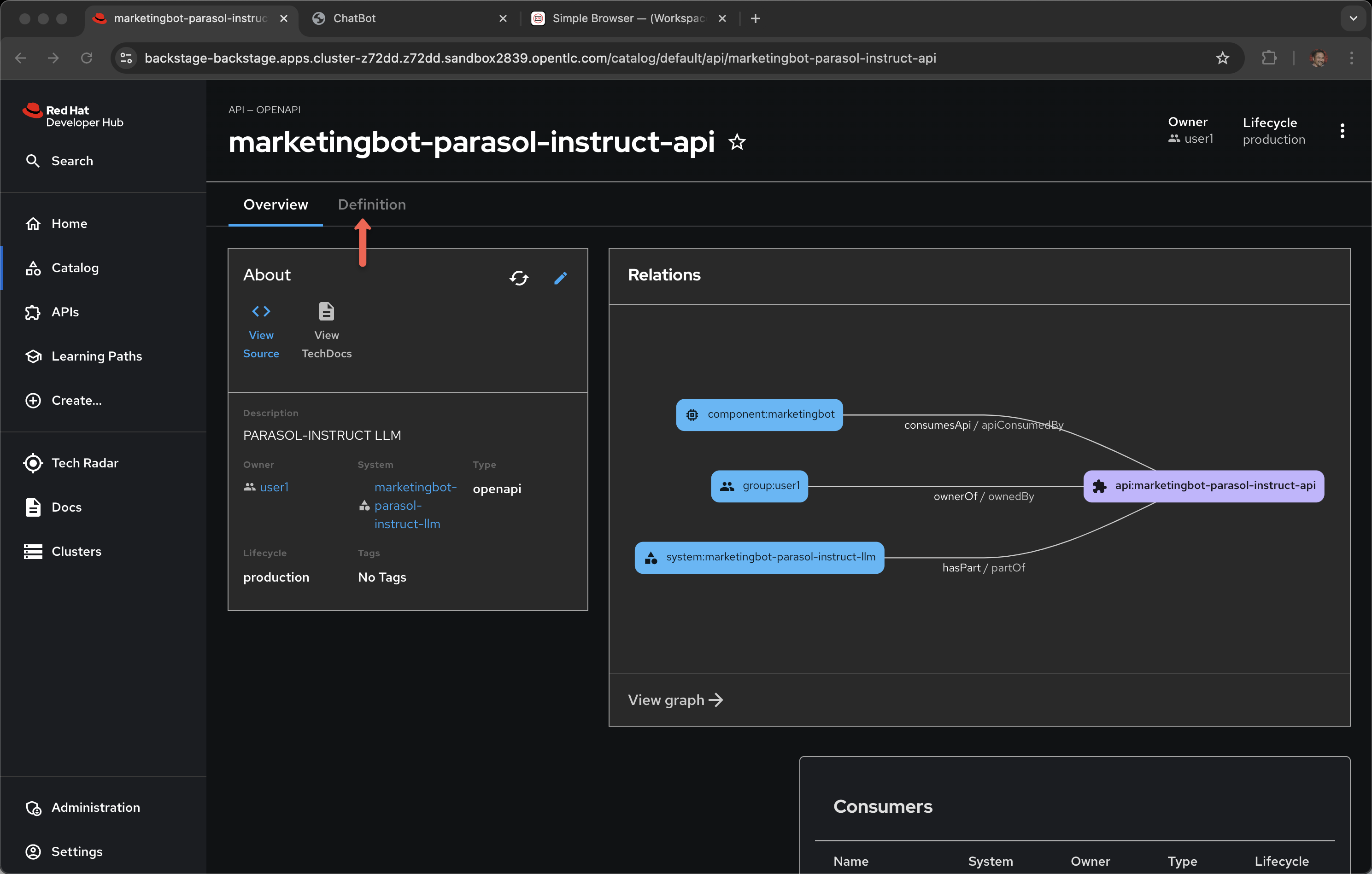

And click on the one ending with -parasol-instruct-api

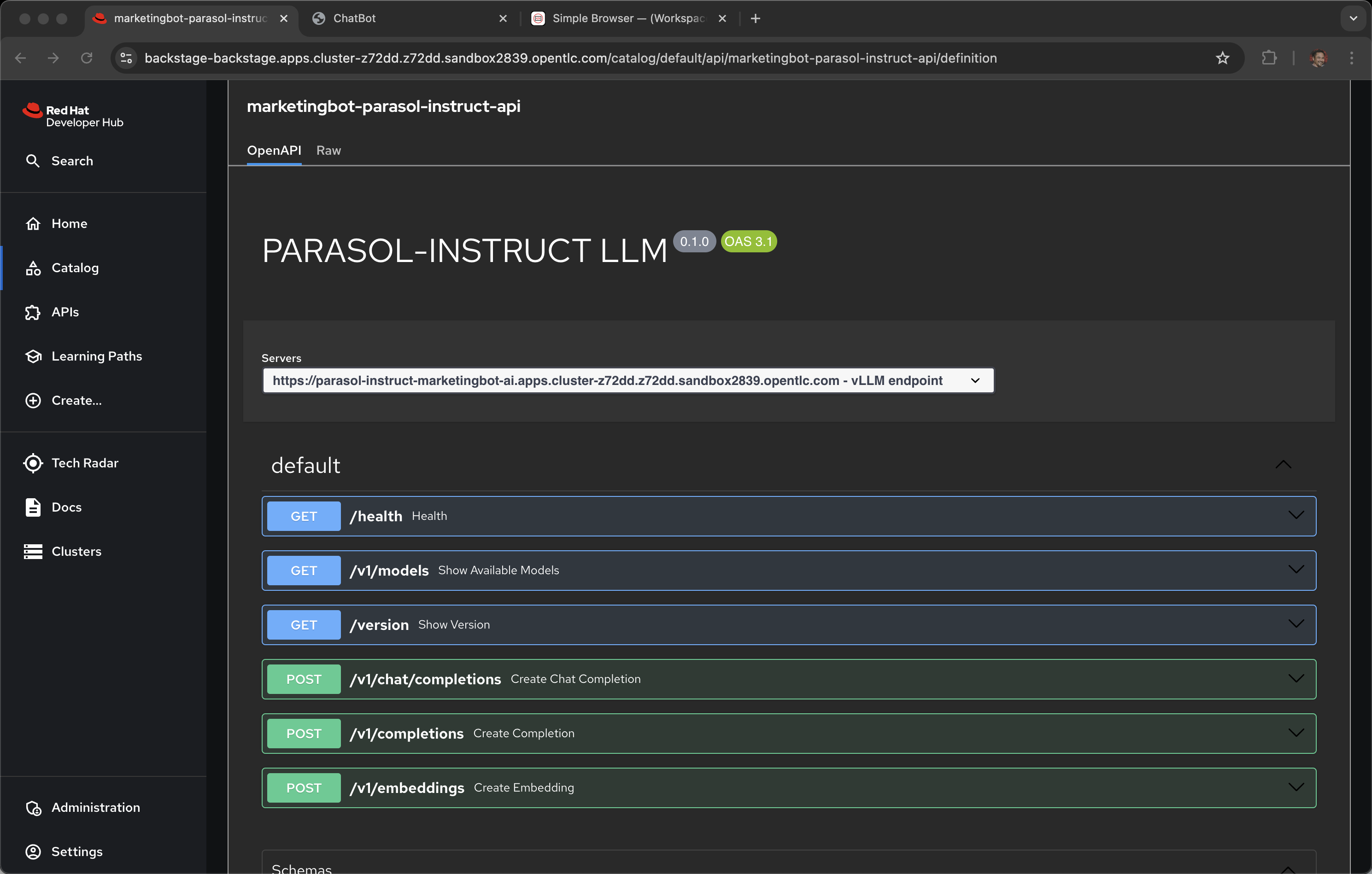

Click on Definition

This is an OpenAPI definition (think Swagger, not to be confused with OpenAI the creators of ChatGPT), specifically the vLLM API that is serving the model.

Narrator: Now it is time to change hats. Up to this point we have been acting more like an enterprise application developer. The coder who sees models as API endpoints and who focuses on integrating LLMs with existing enterprise systems.

Let’s switch into modeler mode.