AI with LLMs Demo Setup

In this module we will show you the setup steps, the things you do BEFORE going on stage, and introduce you to a new template that provisions both the SDLC (Software Development Lifecycle) and the MDLC (Model Development Lifecycle). Where the SDLC is implemented as a Tekton-based Trusted Application Pipeline as seen in previous modules and the MDLC is implemented as a Red Hat OpenShift AI (RHOAI) pipeline based on Kubeflow.

Where RHOAI is responsible for the LLM serving, management, and monitoring.

Where RHTAP + RHDH is responsible for the application code pipeline and lifecycle.

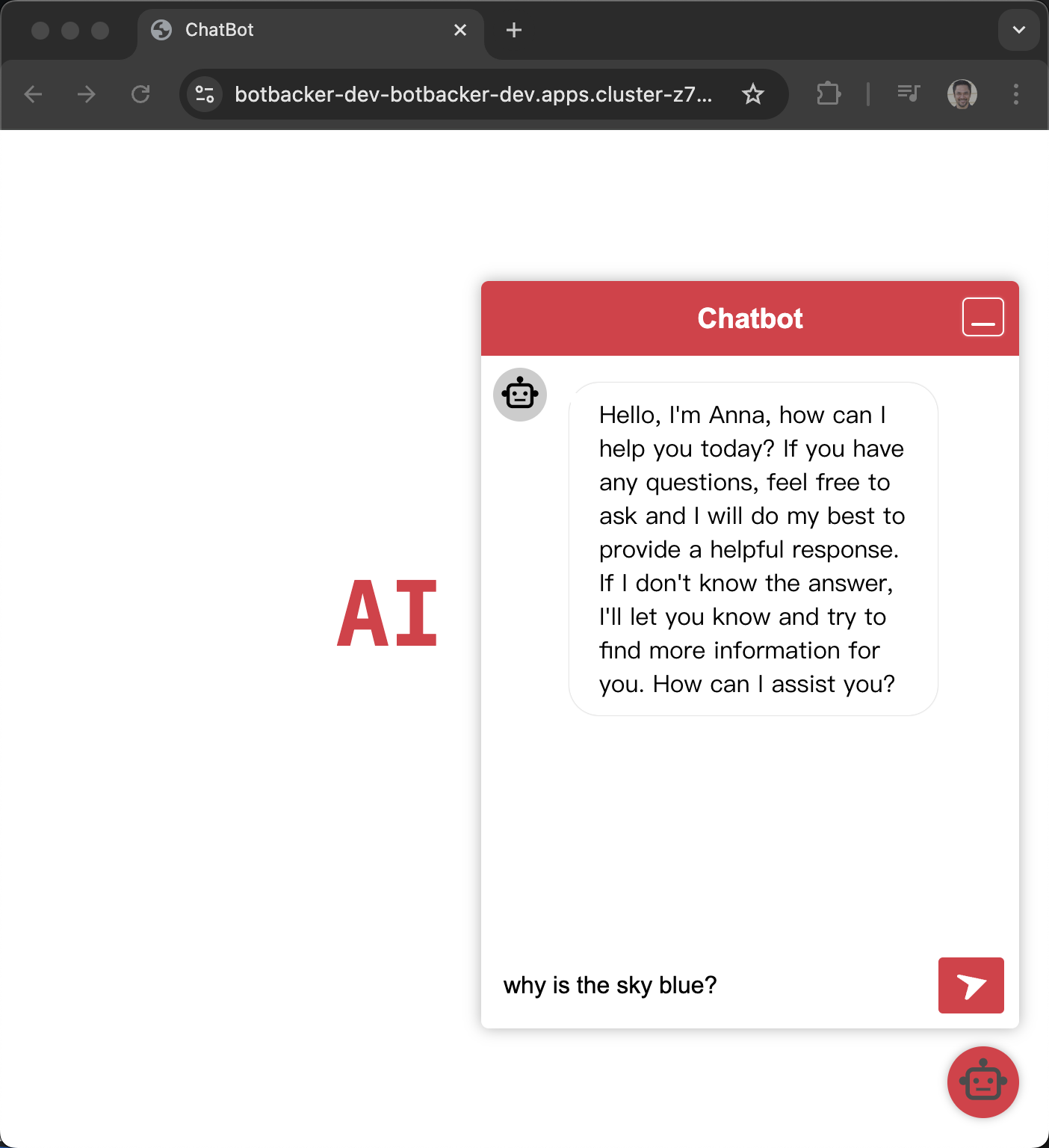

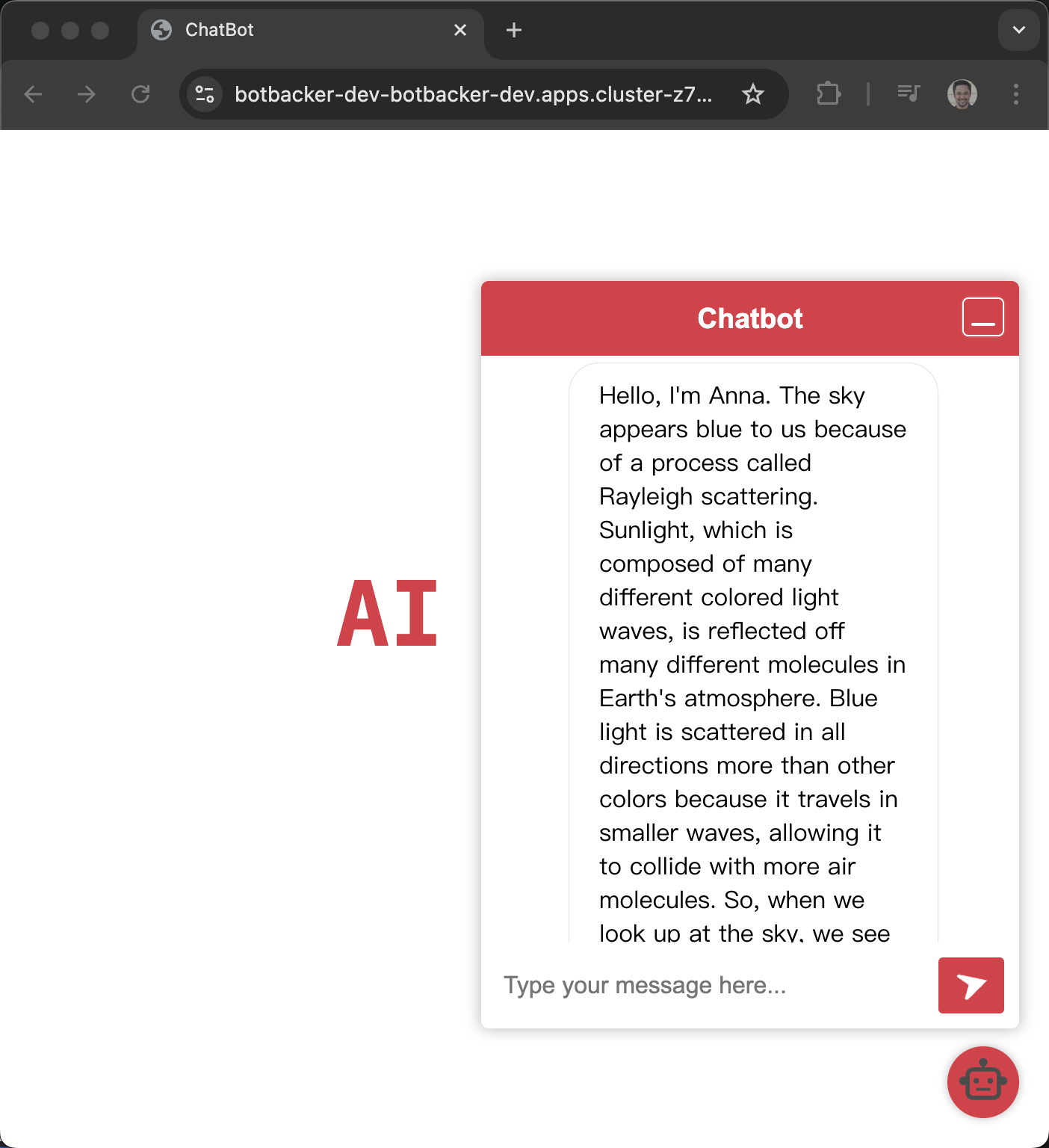

A 10-minute video that walks through a AI/ML template for building out a LLM-powered Chatbot chatbot

A behind the scenes tour and deeper dive video.

And a video that describes some of the clean up process

Setup

LLMs take a fair bit of time to "spawn" within their pod, use the cooking show technique by running the template once BEFORE taking the stage, before sharing your screen.

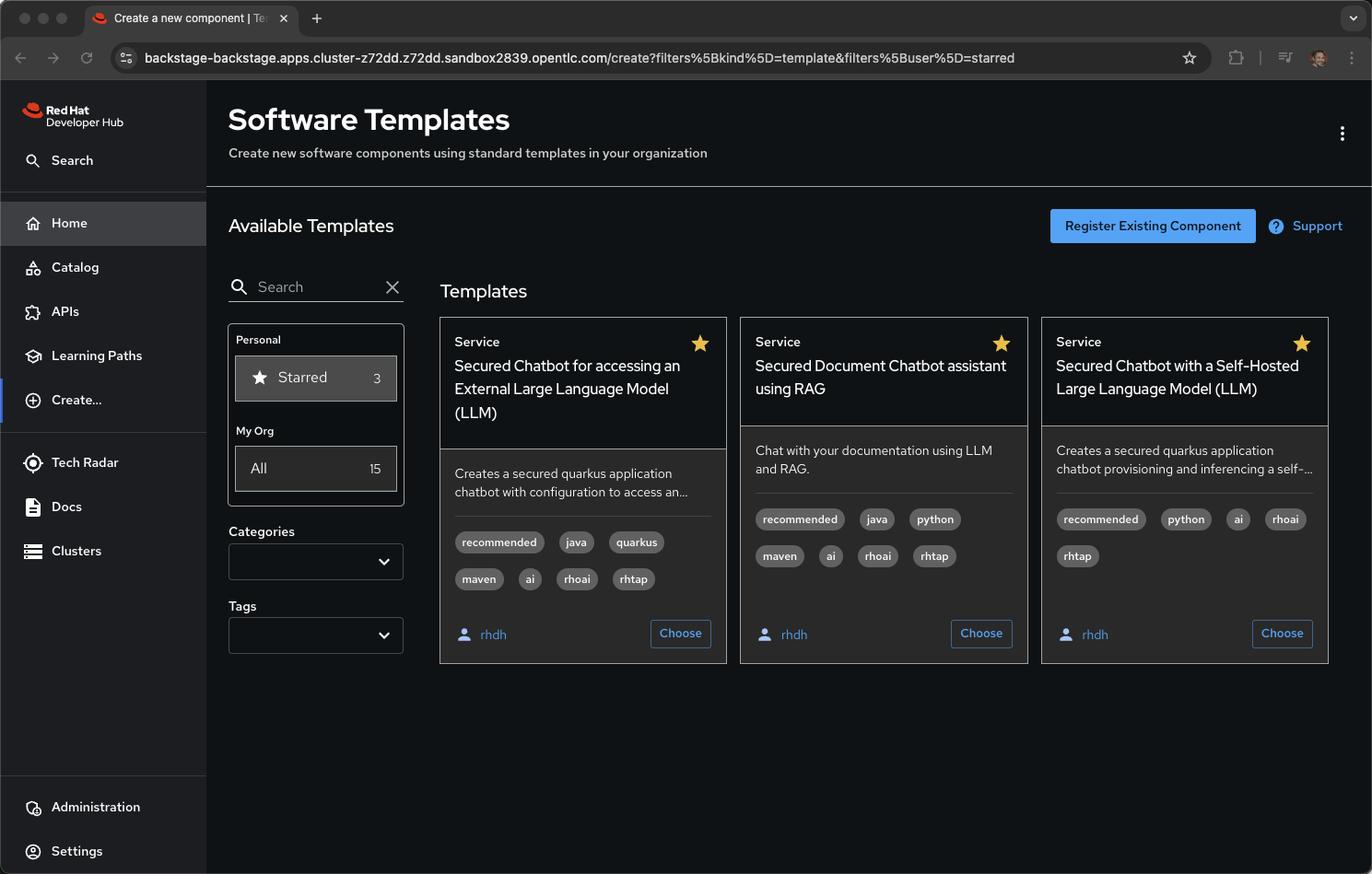

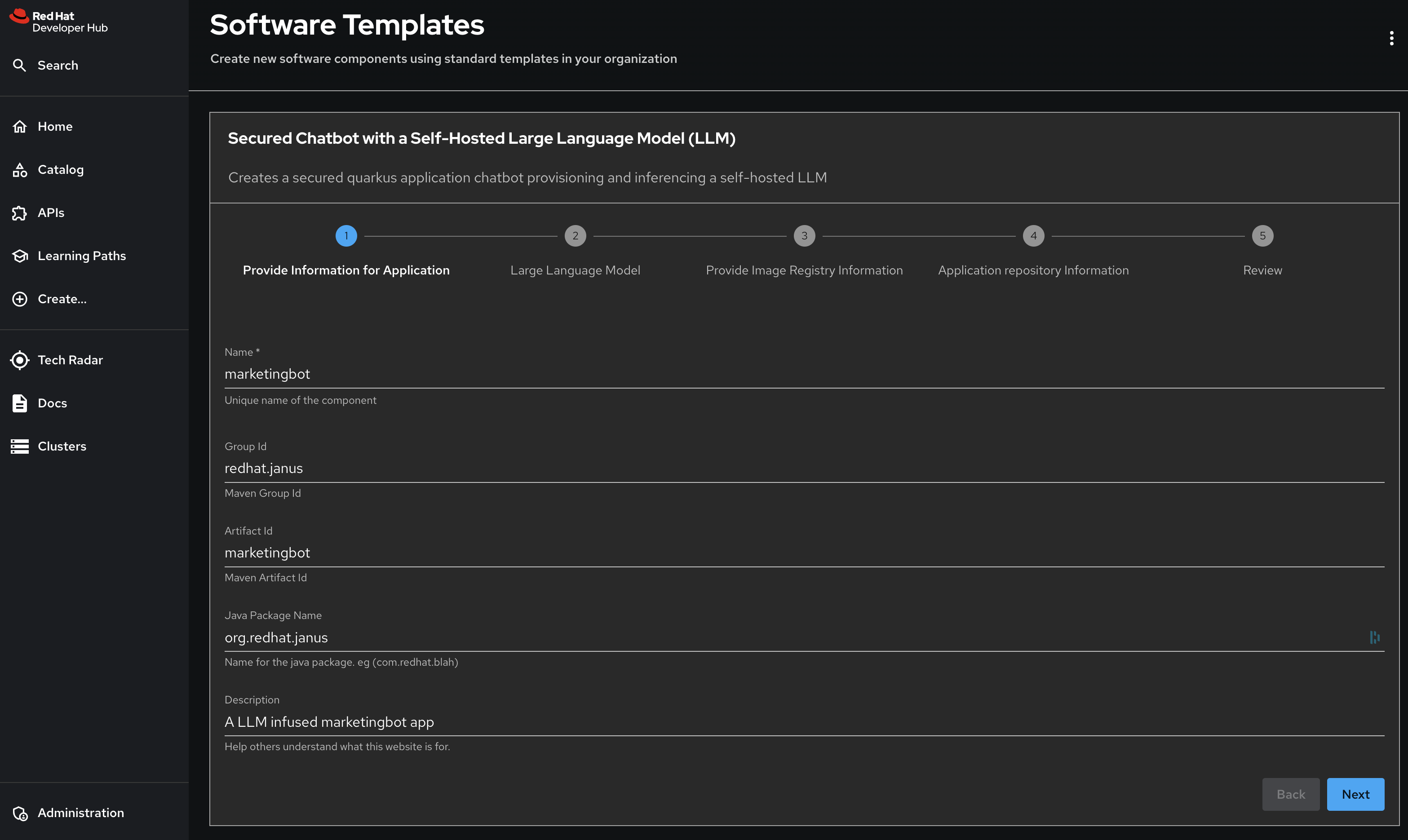

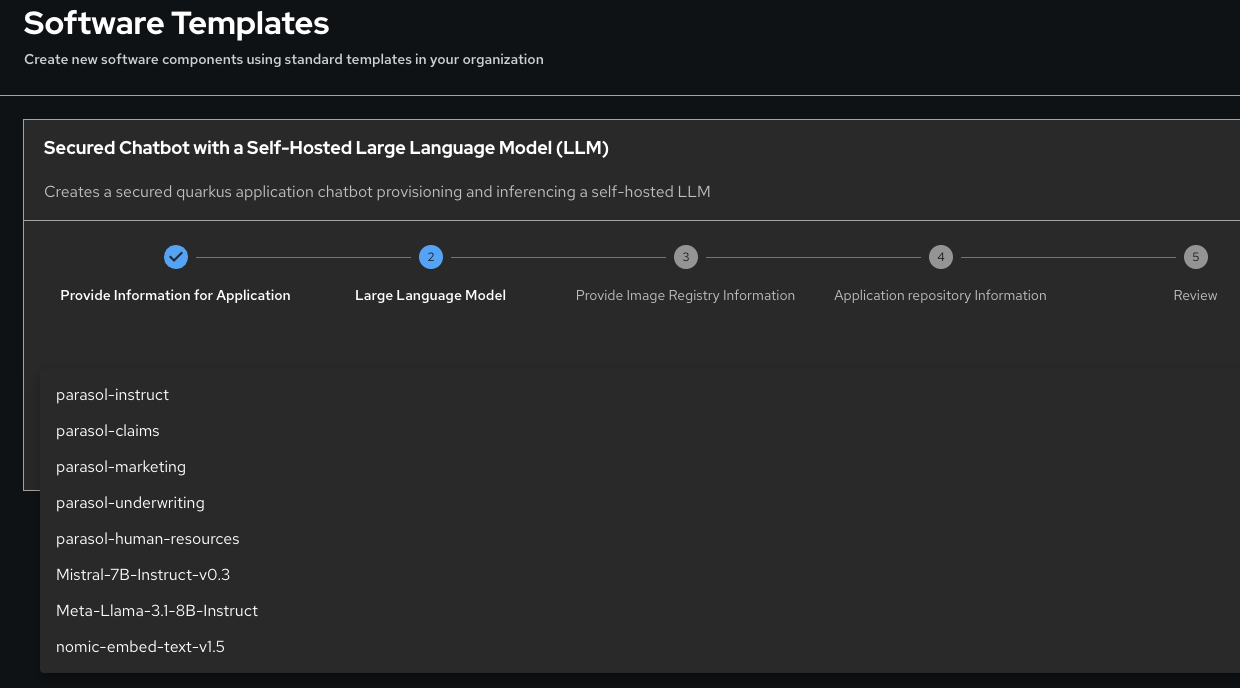

The primary template to run is called Secured Chatbot with a Self-Hosted Large Language Model (LLM). The best way to learn about this template is to execute it.

Start a new project, an application that leverages a LLM for Natural Language Processing (NLP). The creation of a net new LLM-infused microservice is as simple as clicking Choose on the Secured Chatbot with a Self-Hosted Large Language Model (LLM) template.

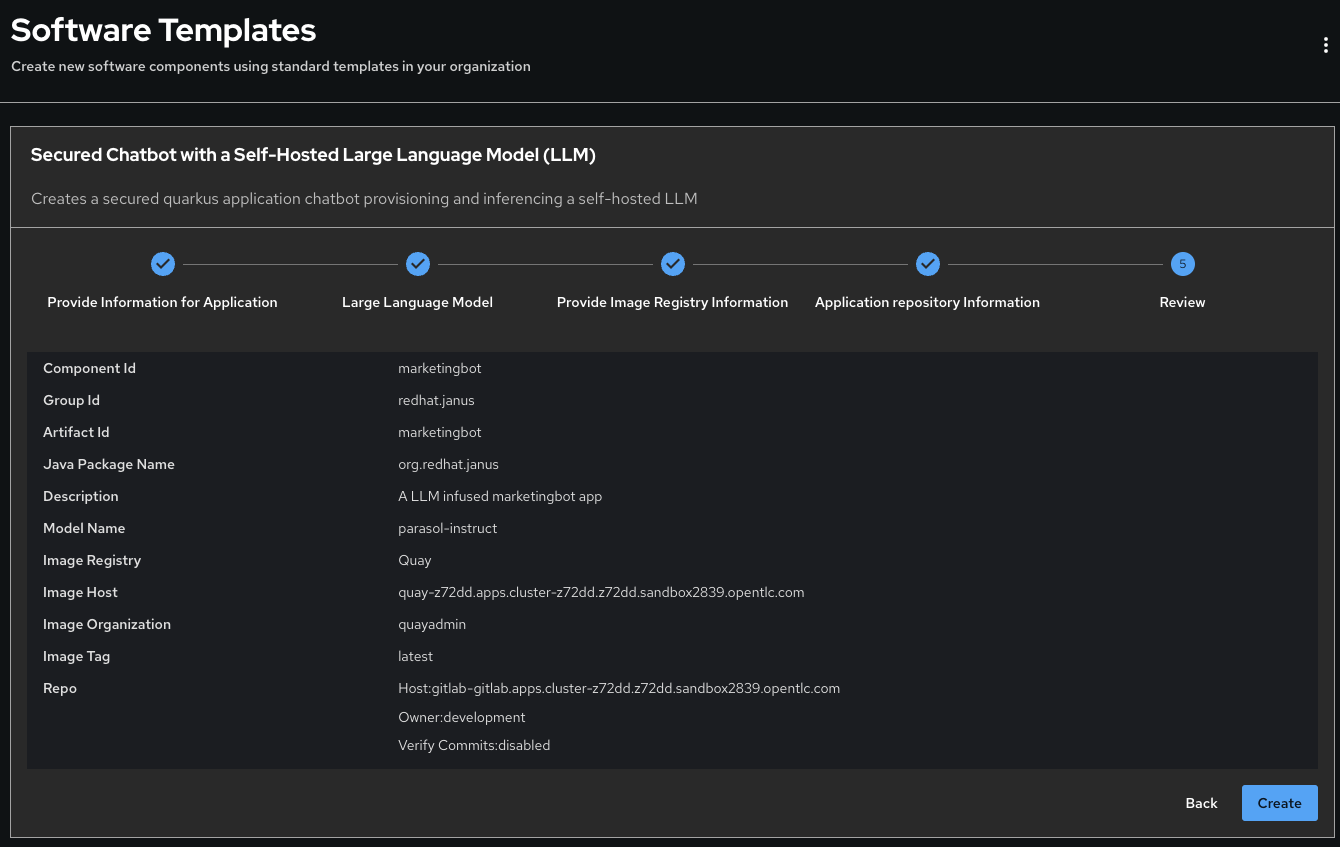

Fill-in some fields and follow the wizard:

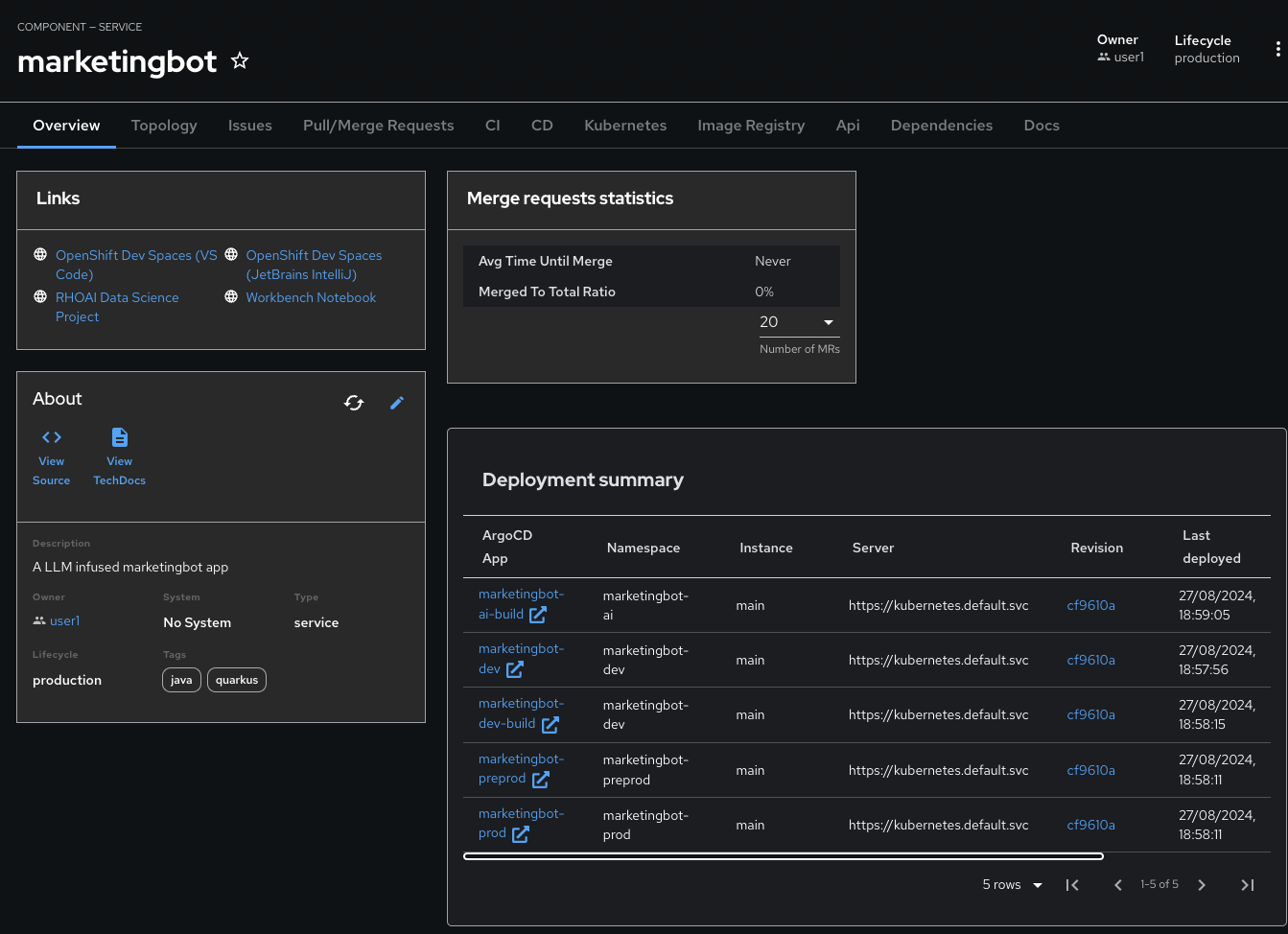

Name: marketingbot

Group Id: redhat.janus

Artifact Id: marketingbot

Java Package Name: org.redhat.janus

Description: A LLM infused marketingbot app

Click Next

Model Name: parasol-instruct

Note: Expanding the list of model names in the screenshot will be covered later, for now, just pick the one you have access to which is parasol-instruct out-of-the-box.

Click Next

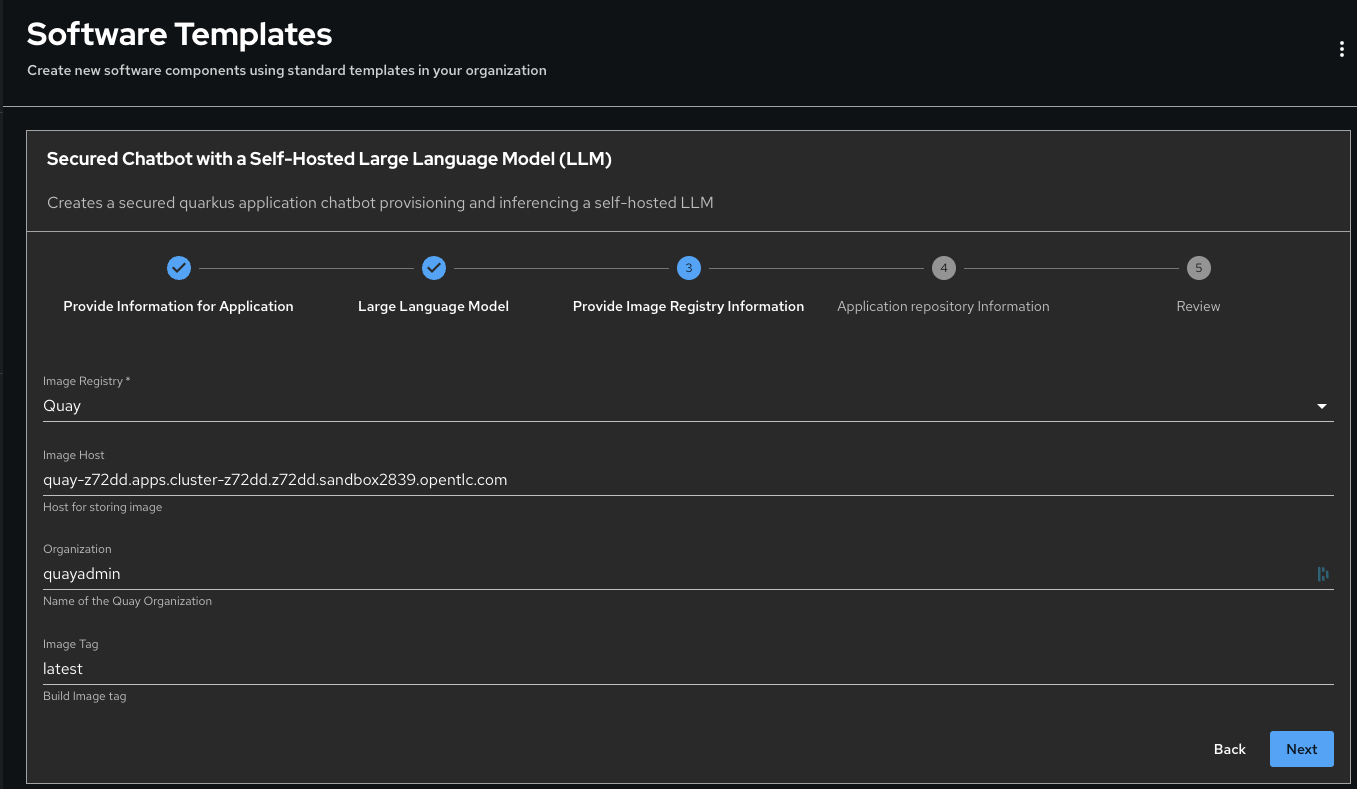

For Image Registry, keep all the defaults

Click Next

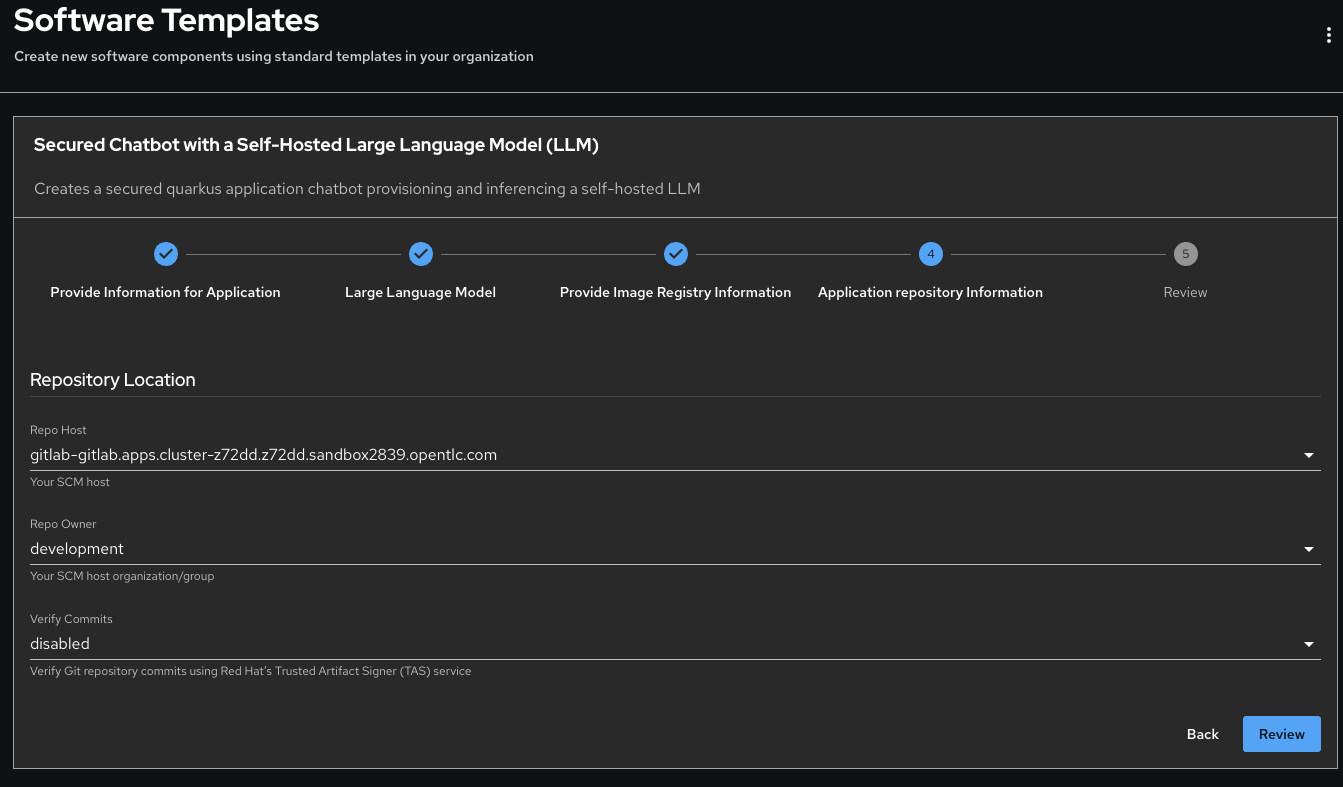

For Repository Location, keep all the defaults

Click Review

Click Create

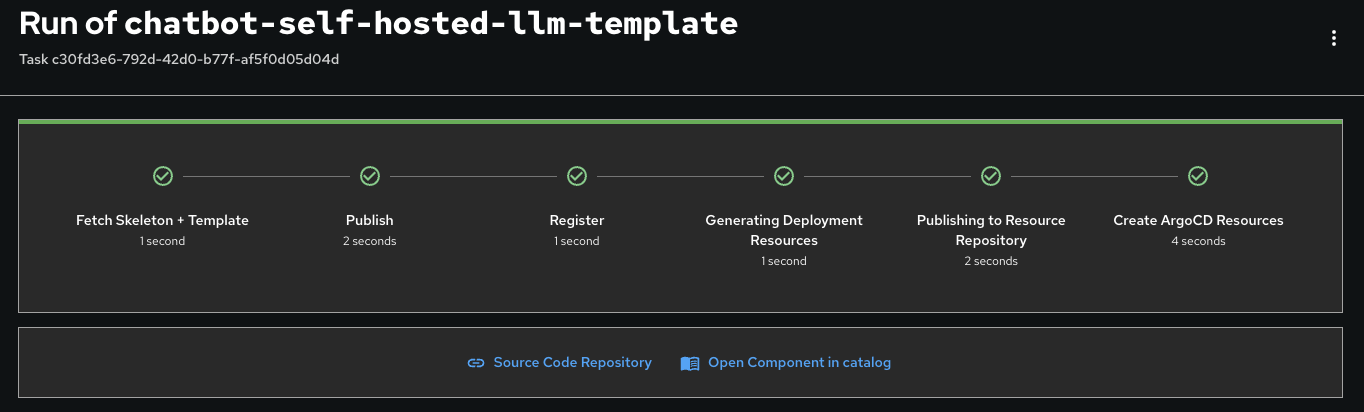

The animation takes few seconds however this hides the heavy lifting happening under the covers.

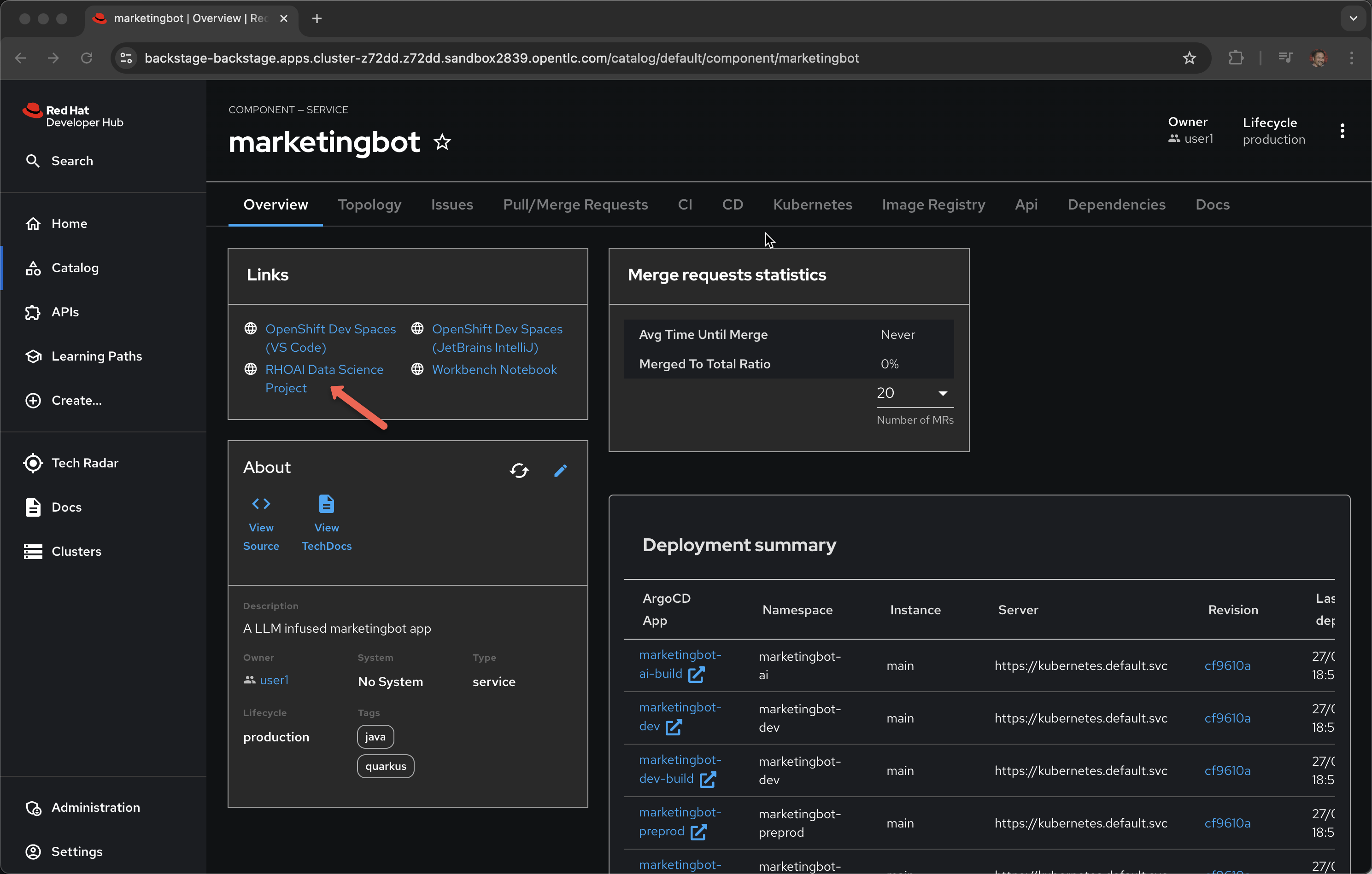

Click on Open Component in catalog

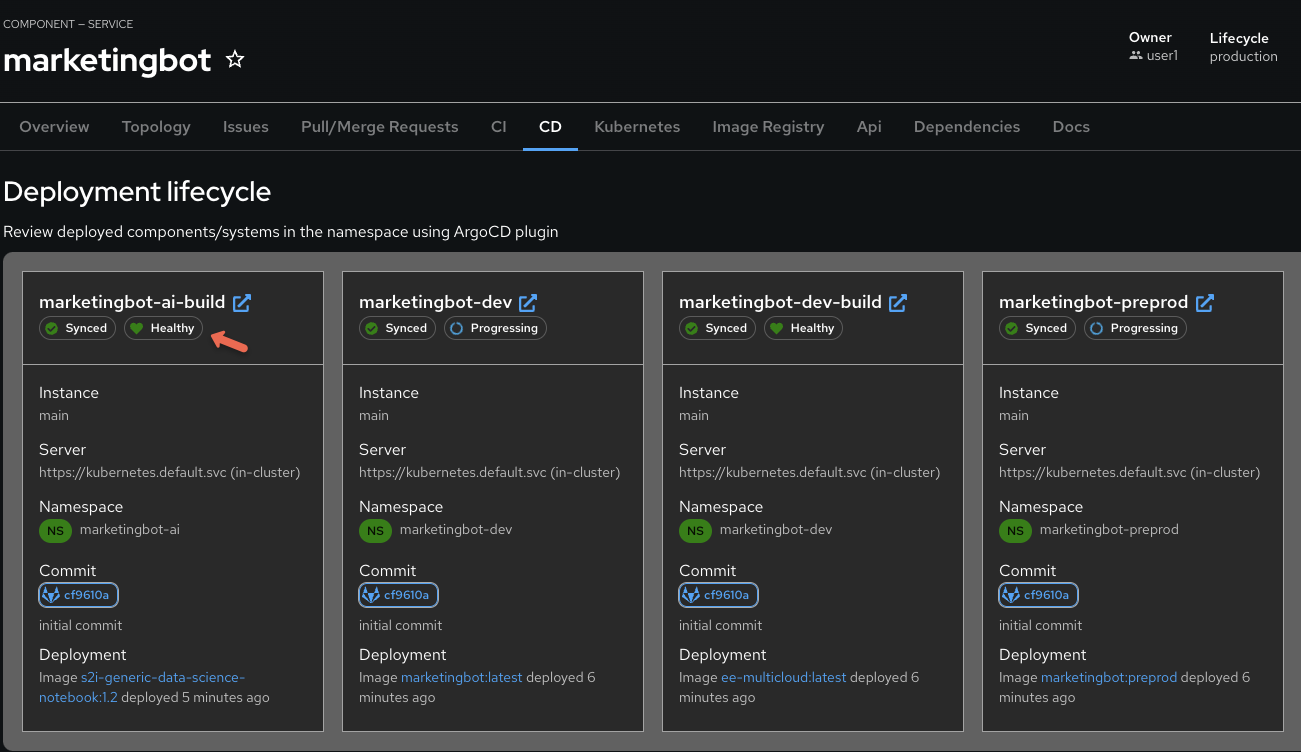

Click on CD tab

Look for Healthy under the -ai-build application

Click on the Overview tab and then RHOAI Data Science Project

Login in via rhsso and the provided password

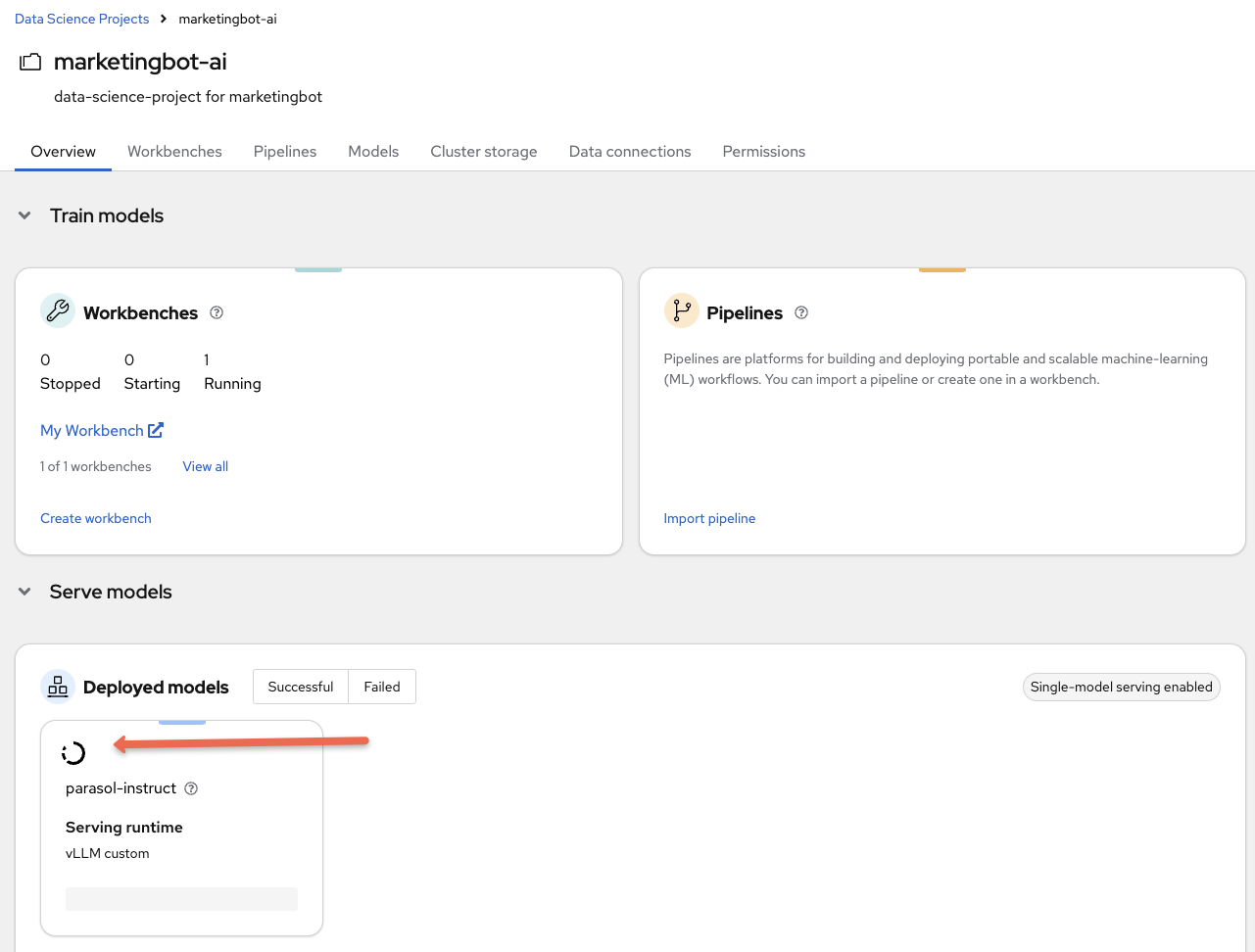

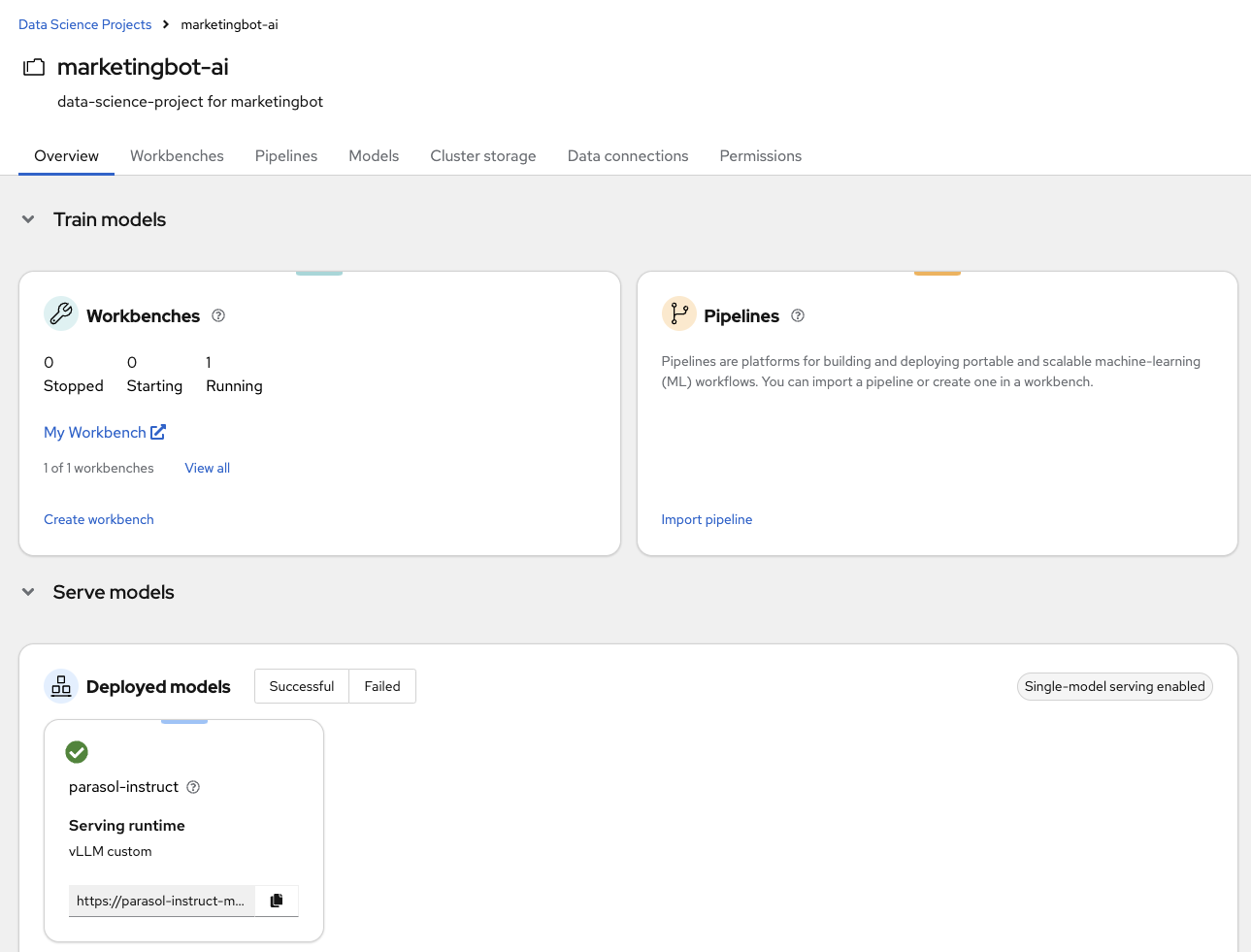

Look at the Deployed Models section, it is very likely that you do not yet have a green check mark indicating that the model server is in fact up. It can take several minutes for the model server to be ready.

The green check mark is important. Again, use the cooking show technique and "pull the baked cake out of the oven".

Now, you are ready to begin the basic demo flow.